Gunnar Raetsch

Improving Clinical Predictions through Unsupervised Time Series Representation Learning

Dec 02, 2018

Abstract:In this work, we investigate unsupervised representation learning on medical time series, which bears the promise of leveraging copious amounts of existing unlabeled data in order to eventually assist clinical decision making. By evaluating on the prediction of clinically relevant outcomes, we show that in a practical setting, unsupervised representation learning can offer clear performance benefits over end-to-end supervised architectures. We experiment with using sequence-to-sequence (Seq2Seq) models in two different ways, as an autoencoder and as a forecaster, and show that the best performance is achieved by a forecasting Seq2Seq model with an integrated attention mechanism, proposed here for the first time in the setting of unsupervised learning for medical time series.

mTim: Rapid and accurate transcript reconstruction from RNA-Seq data

Sep 20, 2013

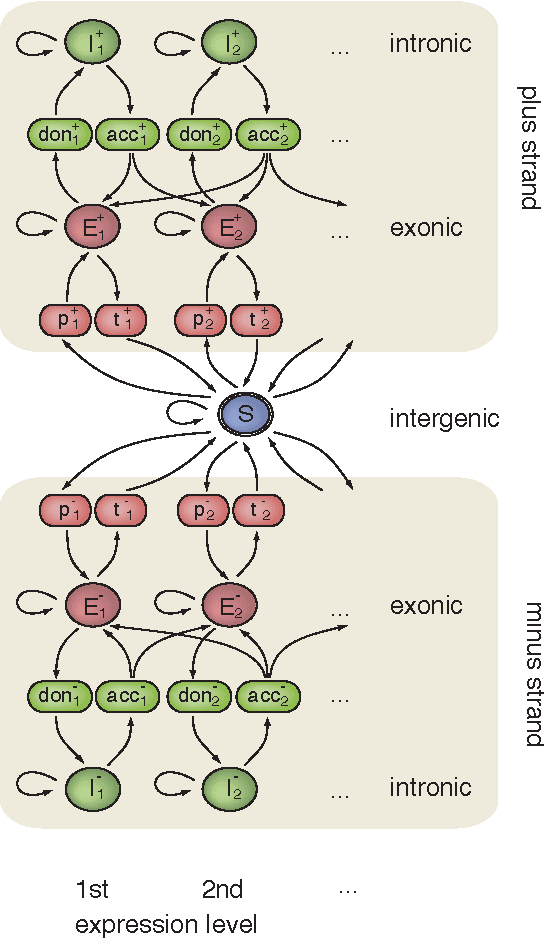

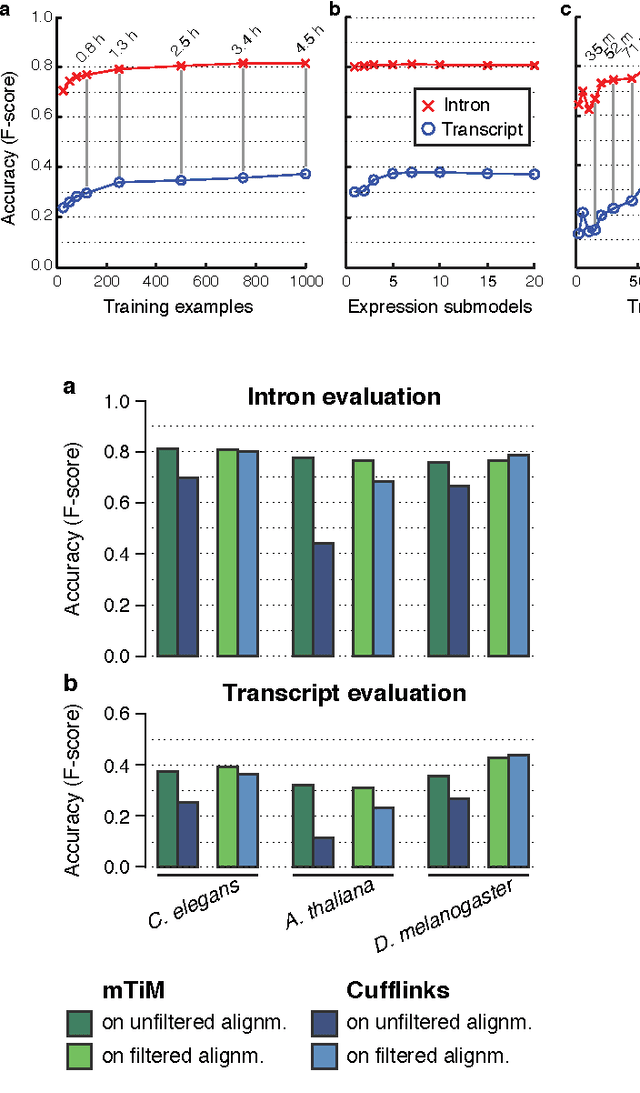

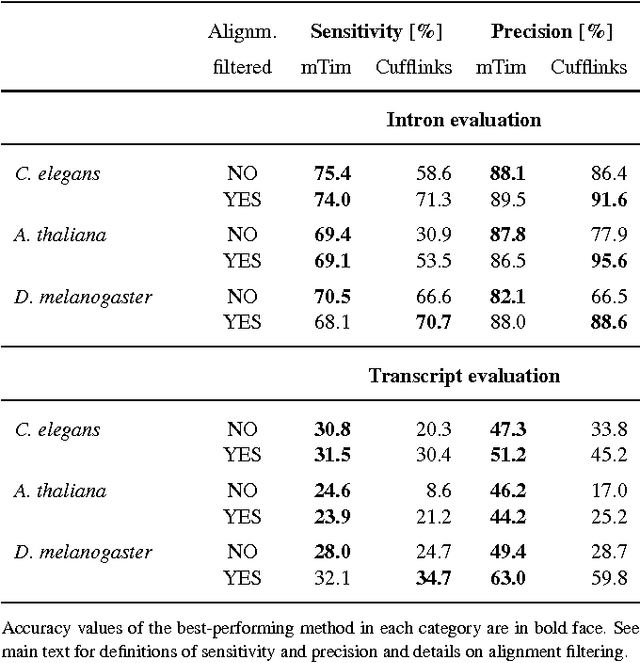

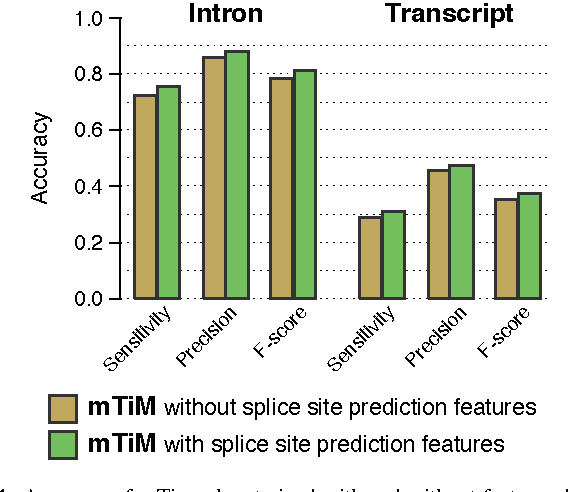

Abstract:Recent advances in high-throughput cDNA sequencing (RNA-Seq) technology have revolutionized transcriptome studies. A major motivation for RNA-Seq is to map the structure of expressed transcripts at nucleotide resolution. With accurate computational tools for transcript reconstruction, this technology may also become useful for genome (re-)annotation, which has mostly relied on de novo gene finding where gene structures are primarily inferred from the genome sequence. We developed a machine-learning method, called mTim (margin-based transcript inference method) for transcript reconstruction from RNA-Seq read alignments that is based on discriminatively trained hidden Markov support vector machines. In addition to features derived from read alignments, it utilizes characteristic genomic sequences, e.g. around splice sites, to improve transcript predictions. mTim inferred transcripts that were highly accurate and relatively robust to alignment errors in comparison to those from Cufflinks, a widely used transcript assembly method.

The Feature Importance Ranking Measure

Jun 23, 2009

Abstract:Most accurate predictions are typically obtained by learning machines with complex feature spaces (as e.g. induced by kernels). Unfortunately, such decision rules are hardly accessible to humans and cannot easily be used to gain insights about the application domain. Therefore, one often resorts to linear models in combination with variable selection, thereby sacrificing some predictive power for presumptive interpretability. Here, we introduce the Feature Importance Ranking Measure (FIRM), which by retrospective analysis of arbitrary learning machines allows to achieve both excellent predictive performance and superior interpretation. In contrast to standard raw feature weighting, FIRM takes the underlying correlation structure of the features into account. Thereby, it is able to discover the most relevant features, even if their appearance in the training data is entirely prevented by noise. The desirable properties of FIRM are investigated analytically and illustrated in simulations.

* 15 pages, 3 figures. to appear in the Proceedings of the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML/PKDD), 2009

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge