Guillaume Noyel

LHC

Logarithmic Mathematical Morphology: theory and applications

Sep 05, 2023

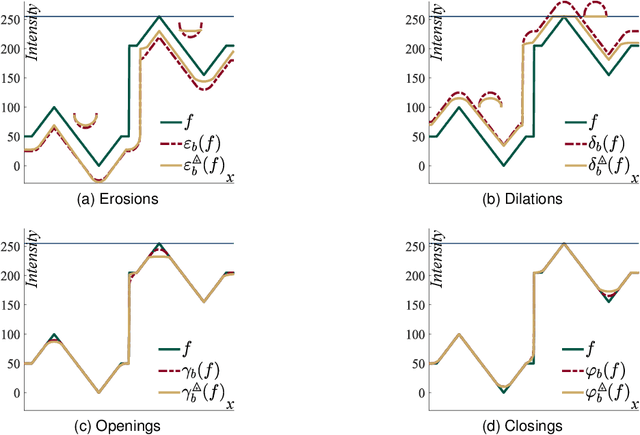

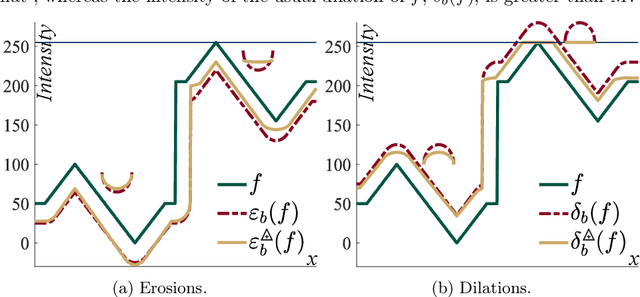

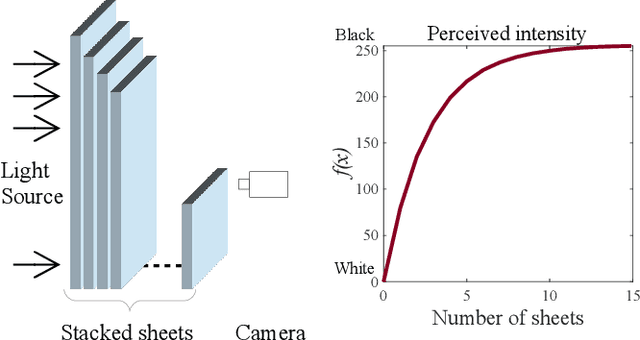

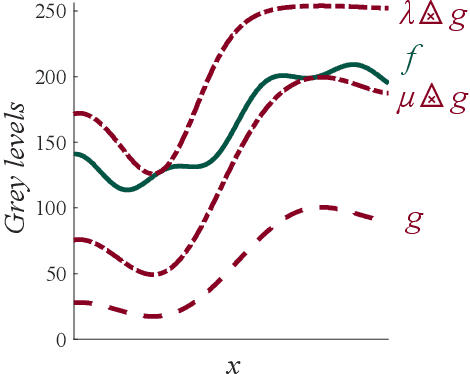

Abstract:Classically, in Mathematical Morphology, an image (i.e., a grey-level function) is analysed by another image which is named the structuring element or the structuring function. This structuring function is moved over the image domain and summed to the image. However, in an image presenting lighting variations, the analysis by a structuring function should require that its amplitude varies according to the image intensity. Such a property is not verified in Mathematical Morphology for grey level functions, when the structuring function is summed to the image with the usual additive law. In order to address this issue, a new framework is defined with an additive law for which the amplitude of the structuring function varies according to the image amplitude. This additive law is chosen within the Logarithmic Image Processing framework and models the lighting variations with a physical cause such as a change of light intensity or a change of camera exposure-time. The new framework is named Logarithmic Mathematical Morphology (LMM) and allows the definition of operators which are robust to such lighting variations. In images with uniform lighting variations, those new LMM operators perform better than usual morphological operators. In eye-fundus images with non-uniform lighting variations, a LMM method for vessel segmentation is compared to three state-of-the-art approaches. Results show that the LMM approach has a better robustness to such variations than the three others.

Logarithmic Morphological Neural Nets robust to lighting variations

Apr 20, 2022

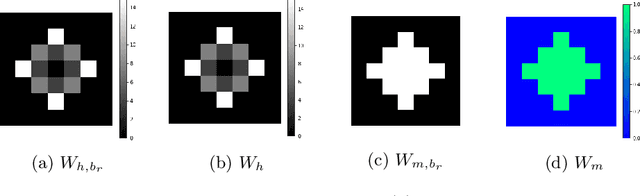

Abstract:Morphological neural networks allow to learn the weights of a structuring function knowing the desired output image. However, those networks are not intrinsically robust to lighting variations in images with an optical cause, such as a change of light intensity. In this paper, we introduce a morphological neural network which possesses such a robustness to lighting variations. It is based on the recent framework of Logarithmic Mathematical Morphology (LMM), i.e. Mathematical Morphology defined with the Logarithmic Image Processing (LIP) model. This model has a LIP additive law which simulates in images a variation of the light intensity. We especially learn the structuring function of a LMM operator robust to those variations, namely : the map of LIP-additive Asplund distances. Results in images show that our neural network verifies the required property.

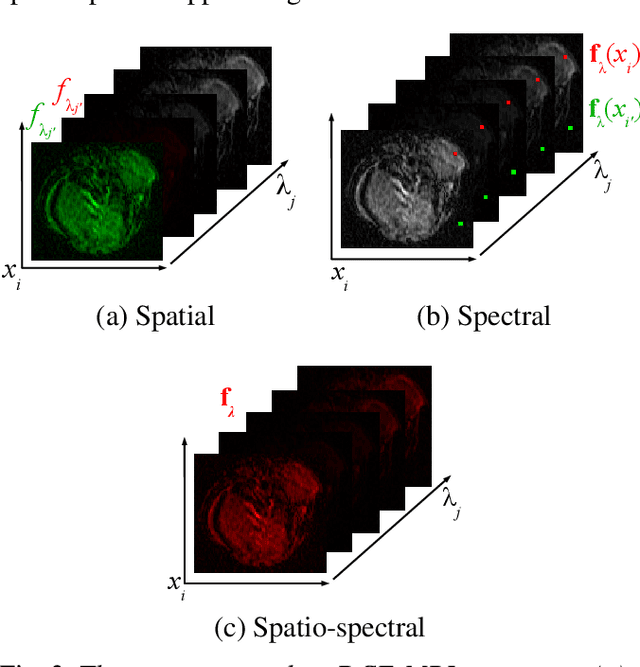

Morphological segmentation of hyperspectral images

Oct 02, 2020

Abstract:The present paper develops a general methodology for the morphological segmentation of hyperspectral images, i.e., with an important number of channels. This approach, based on watershed, is composed of a spectral classification to obtain the markers and a vectorial gradient which gives the spatial information. Several alternative gradients are adapted to the different hyperspectral functions. Data reduction is performed either by Factor Analysis or by model fitting. Image segmentation is done on different spaces: factor space, parameters space, etc. On all these spaces the spatial/spectral segmentation approach is applied, leading to relevant results on the image.

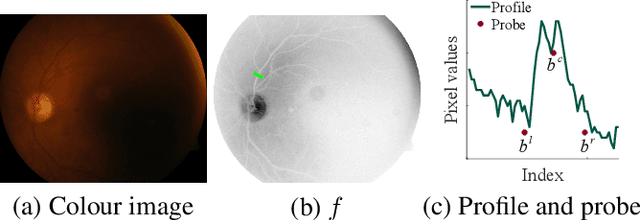

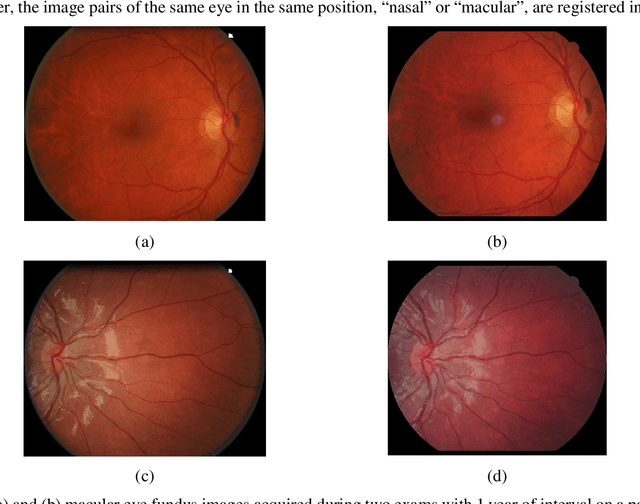

Retinal vessel segmentation by probing adaptive to lighting variations

Apr 29, 2020

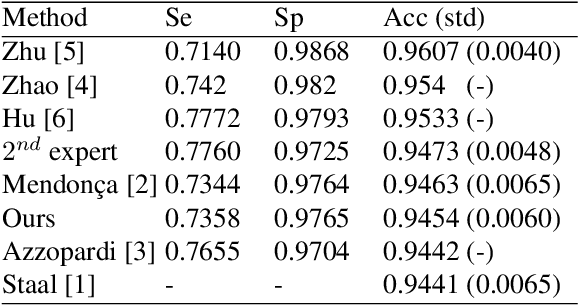

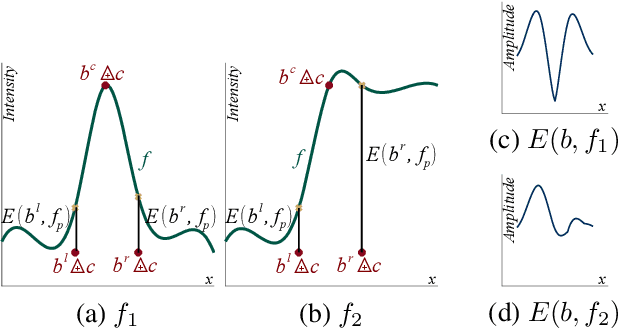

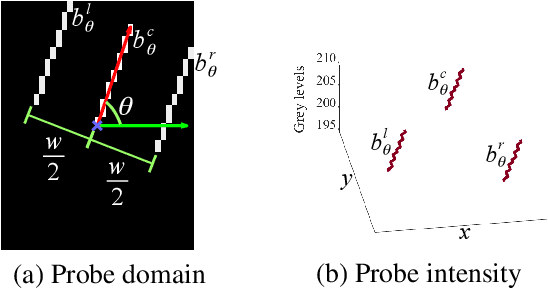

Abstract:We introduce a novel method to extract the vessels in eye fun-dus images which is adaptive to lighting variations. In the Logarithmic Image Processing framework, a 3-segment probe detects the vessels by probing the topographic surface of an image from below. A map of contrasts between the probe and the image allows to detect the vessels by a threshold. In a lowly contrasted image, results show that our method better extract the vessels than another state-of the-art method. In a highly contrasted image database (DRIVE) with a reference , ours has an accuracy of 0.9454 which is similar or better than three state-of-the-art methods and below three others. The three best methods have a higher accuracy than a manual segmentation by another expert. Importantly, our method automatically adapts to the lighting conditions of the image acquisition.

* Proceedings of 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI).To appear in https://ieeexplore.ieee.org

Multivariate mathematical morphology for DCE-MRI image analysis in angiogenesis studies

Oct 28, 2019

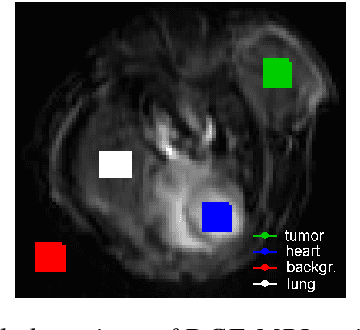

Abstract:We propose a new computer aided detection framework for tumours acquired on DCE-MRI (Dynamic Contrast Enhanced Magnetic Resonance Imaging) series on small animals. In this approach we consider DCE-MRI series as multivariate images. A full multivariate segmentation method based on dimensionality reduction, noise filtering, supervised classification and stochastic watershed is explained and tested on several data sets. The two main key-points introduced in this paper are noise reduction preserving contours and spatio temporal segmentation by stochastic watershed. Noise reduction is performed in a special way that selects factorial axes of Factor Correspondence Analysis in order to preserves contours. Then a spatio-temporal approach based on stochastic watershed is used to segment tumours. The results obtained are in accordance with the diagnosis of the medical doctors.

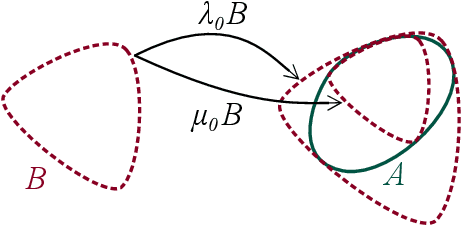

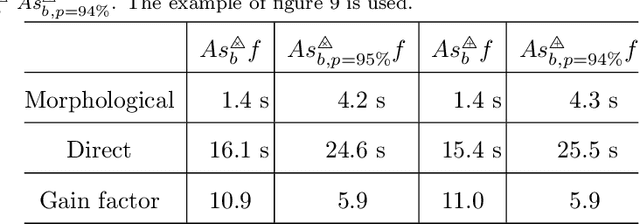

Functional Asplund's metrics for pattern matching robust to variable lighting conditions

Sep 04, 2019

Abstract:In this paper, we propose a complete framework to process images captured under uncontrolled lighting and especially under low lighting. By taking advantage of the Logarithmic Image Processing (LIP) context, we study two novel functional metrics: i) the LIP-multiplicative Asplund's metric which is robust to object absorption variations and ii) the LIP-additive Asplund's metric which is robust to variations of source intensity and exposure-time. We introduce robust to noise versions of these metrics. We demonstrate that the maps of their corresponding distances between an image and a reference template are linked to Mathematical Morphology. This facilitates their implementation. We assess them in various situations with different lightings and movements. Results show that those maps of distances are robust to lighting variations. Importantly, they are efficient to detect patterns in low-contrast images with a template acquired under a different lighting.

A Link Between the Multiplicative and Additive Functional Asplund's Metrics

Jul 17, 2019

Abstract:Functional Asplund's metrics were recently introduced to perform pattern matching robust to lighting changes thanks to double-sided probing in the Logarithmic Image Processing (LIP) framework. Two metrics were defined, namely the LIP-multiplicative Asplund's metric which is robust to variations of object thickness (or opacity) and the LIP-additive Asplund's metric which is robust to variations of camera exposure-time (or light intensity). Maps of distances-i.e. maps of these metric values-were also computed between a reference template and an image. Recently, it was proven that the map of LIP-multiplicative As-plund's distances corresponds to mathematical morphology operations. In this paper, the link between both metrics and between their corresponding distance maps will be demonstrated. It will be shown that the map of LIP-additive Asplund's distances of an image can be computed from the map of the LIP-multiplicative Asplund's distance of a transform of this image and vice-versa. Both maps will be related by the LIP isomorphism which will allow to pass from the image space of the LIP-additive distance map to the positive real function space of the LIP-multiplicative distance map. Experiments will illustrate this relation and the robustness of the LIP-additive Asplund's metric to lighting changes.

Region homogeneity in the Logarithmic Image Processing framework: application to region growing algorithms

Apr 17, 2019

Abstract:In order to create an image segmentation method robust to lighting changes, two novel homogeneity criteria of an image region were studied. Both were defined using the Logarithmic Image Processing (LIP) framework whose laws model lighting changes. The first criterion estimates the LIP-additive homogeneity and is based on the LIP-additive law. It is theoretically insensitive to lighting changes caused by variations of the camera exposure-time or source intensity. The second, the LIP-multiplicative homogeneity criterion, is based on the LIP-multiplicative law and is insensitive to changes due to variations of the object thickness or opacity. Each criterion is then applied in Revol and Jourlin's (1997) region growing method which is based on the homogeneity of an image region. The region growing method becomes therefore robust to the lighting changes specific to each criterion. Experiments on simulated and on real images presenting lighting variations prove the robustness of the criteria to those variations. Compared to a state-of the art method based on the image component-tree, ours is more robust. These results open the way to numerous applications where the lighting is uncontrolled or partially controlled.

* The original publication is available at www.ias-iss.org

Registration of retinal images from Public Health by minimising an error between vessels using an affine model with radial distortions

Apr 17, 2019

Abstract:In order to estimate a registration model of eye fundus images made of an affinity and two radial distortions, we introduce an estimation criterion based on an error between the vessels. In [1], we estimated this model by minimising the error between characteristics points. In this paper, the detected vessels are selected using the circle and ellipse equations of the overlap area boundaries deduced from our model. Our method successfully registers 96 % of the 271 pairs in a Public Health dataset acquired mostly with different cameras. This is better than our previous method [1] and better than three other state-of-the-art methods. On a publicly available dataset, ours still better register the images than the reference method.

Superimposition of eye fundus images for longitudinal analysis from large public health databases

Jul 18, 2018

Abstract:In this paper, a method is presented for superimposition (i.e. registration) of eye fundus images from persons with diabetes screened over many years for diabetic retinopathy. The method is fully automatic and robust to camera changes and colour variations across the images both in space and time. All the stages of the process are designed for longitudinal analysis of cohort public health databases where retinal examinations are made at approximately yearly intervals. The method relies on a model correcting two radial distortions and an affine transformation between pairs of images which is robustly fitted on salient points. Each stage involves linear estimators followed by non-linear optimisation. The model of image warping is also invertible for fast computation. The method has been validated (1) on a simulated montage and (2) on public health databases with 69 patients with high quality images (271 pairs acquired mostly with different types of camera and 268 pairs acquired mostly with the same type of camera) with success rates of 92% and 98%, and five patients (20 pairs) with low quality images with a success rate of 100%. Compared to two state-of-the-art methods, ours gives better results.

* This is an author-created, un-copyedited version of an article published in Biomedical Physics \& Engineering Express. IOP Publishing Ltd is not responsible for any errors or omissions in this version of the manuscript or any version derived from it. The Version of Record is available online at https://doi.org/10.1088/2057-1976/aa7d16

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge