Guibin Wu

DocDiff: Document Enhancement via Residual Diffusion Models

May 06, 2023Abstract:Removing degradation from document images not only improves their visual quality and readability, but also enhances the performance of numerous automated document analysis and recognition tasks. However, existing regression-based methods optimized for pixel-level distortion reduction tend to suffer from significant loss of high-frequency information, leading to distorted and blurred text edges. To compensate for this major deficiency, we propose DocDiff, the first diffusion-based framework specifically designed for diverse challenging document enhancement problems, including document deblurring, denoising, and removal of watermarks and seals. DocDiff consists of two modules: the Coarse Predictor (CP), which is responsible for recovering the primary low-frequency content, and the High-Frequency Residual Refinement (HRR) module, which adopts the diffusion models to predict the residual (high-frequency information, including text edges), between the ground-truth and the CP-predicted image. DocDiff is a compact and computationally efficient model that benefits from a well-designed network architecture, an optimized training loss objective, and a deterministic sampling process with short time steps. Extensive experiments demonstrate that DocDiff achieves state-of-the-art (SOTA) performance on multiple benchmark datasets, and can significantly enhance the readability and recognizability of degraded document images. Furthermore, our proposed HRR module in pre-trained DocDiff is plug-and-play and ready-to-use, with only 4.17M parameters. It greatly sharpens the text edges generated by SOTA deblurring methods without additional joint training. Available codes: https://github.com/Royalvice/DocDiff

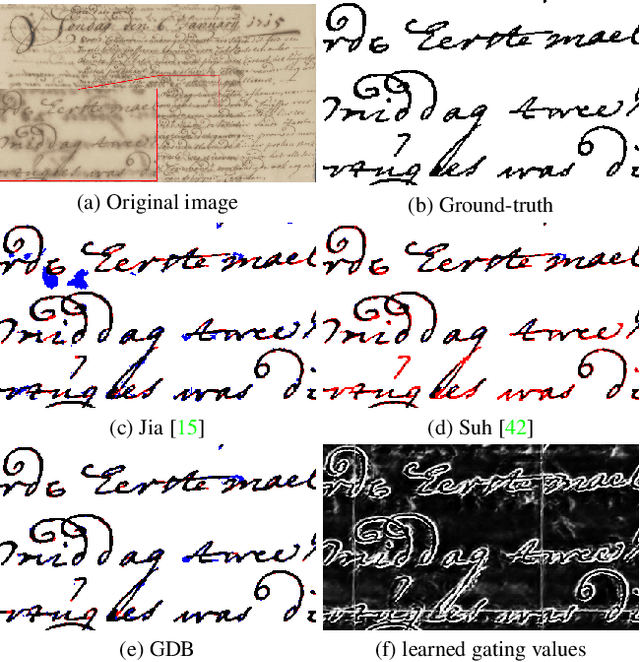

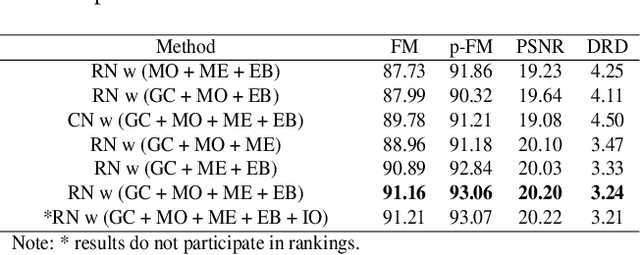

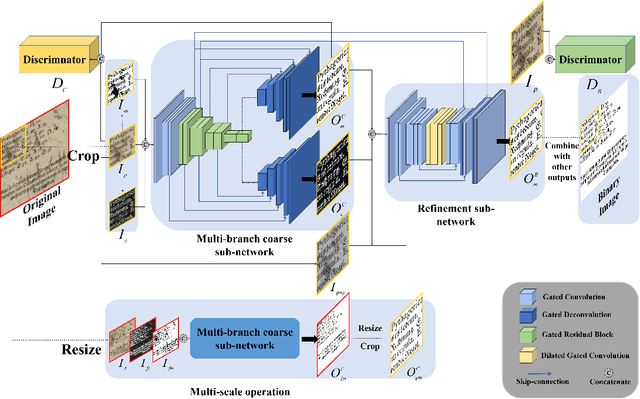

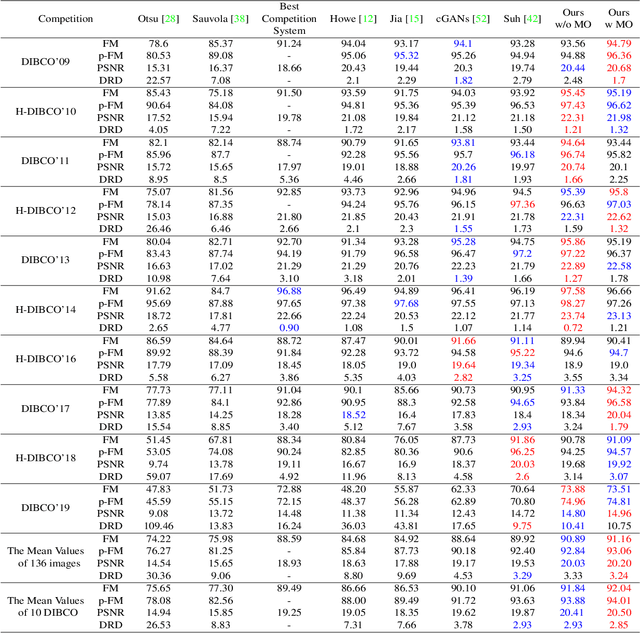

GDB: Gated convolutions-based Document Binarization

Feb 04, 2023

Abstract:Document binarization is a key pre-processing step for many document analysis tasks. However, existing methods can not extract stroke edges finely, mainly due to the fair-treatment nature of vanilla convolutions and the extraction of stroke edges without adequate supervision by boundary-related information. In this paper, we formulate text extraction as the learning of gating values and propose an end-to-end gated convolutions-based network (GDB) to solve the problem of imprecise stroke edge extraction. The gated convolutions are applied to selectively extract the features of strokes with different attention. Our proposed framework consists of two stages. Firstly, a coarse sub-network with an extra edge branch is trained to get more precise feature maps by feeding a priori mask and edge. Secondly, a refinement sub-network is cascaded to refine the output of the first stage by gated convolutions based on the sharp edge. For global information, GDB also contains a multi-scale operation to combine local and global features. We conduct comprehensive experiments on ten Document Image Binarization Contest (DIBCO) datasets from 2009 to 2019. Experimental results show that our proposed methods outperform the state-of-the-art methods in terms of all metrics on average and achieve top ranking on six benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge