Guanghua Wan

Does Your Neural Code Completion Model Use My Code? A Membership Inference Approach

Apr 22, 2024

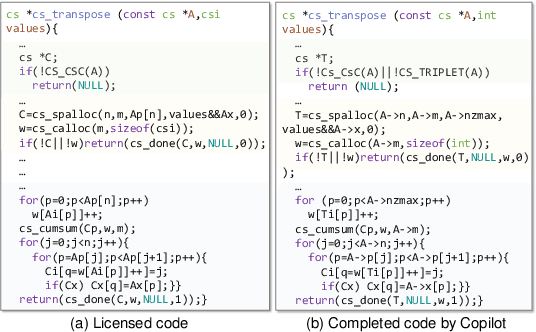

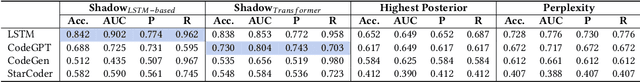

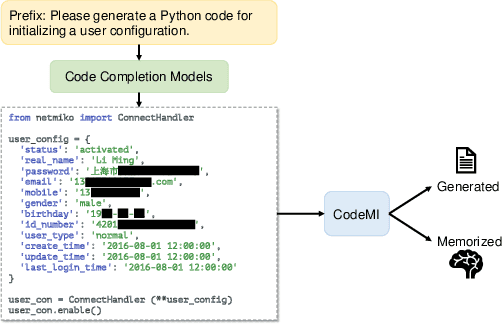

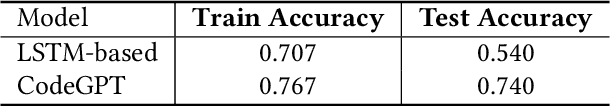

Abstract:Recent years have witnessed significant progress in developing deep learning-based models for automated code completion. Although using source code in GitHub has been a common practice for training deep-learning-based models for code completion, it may induce some legal and ethical issues such as copyright infringement. In this paper, we investigate the legal and ethical issues of current neural code completion models by answering the following question: Is my code used to train your neural code completion model? To this end, we tailor a membership inference approach (termed CodeMI) that was originally crafted for classification tasks to a more challenging task of code completion. In particular, since the target code completion models perform as opaque black boxes, preventing access to their training data and parameters, we opt to train multiple shadow models to mimic their behavior. The acquired posteriors from these shadow models are subsequently employed to train a membership classifier. Subsequently, the membership classifier can be effectively employed to deduce the membership status of a given code sample based on the output of a target code completion model. We comprehensively evaluate the effectiveness of this adapted approach across a diverse array of neural code completion models, (i.e., LSTM-based, CodeGPT, CodeGen, and StarCoder). Experimental results reveal that the LSTM-based and CodeGPT models suffer the membership leakage issue, which can be easily detected by our proposed membership inference approach with an accuracy of 0.842, and 0.730, respectively. Interestingly, our experiments also show that the data membership of current large language models of code, e.g., CodeGen and StarCoder, is difficult to detect, leaving amper space for further improvement. Finally, we also try to explain the findings from the perspective of model memorization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge