Guangcheng Chen

TPT-Bench: A Large-Scale, Long-Term and Robot-Egocentric Dataset for Benchmarking Target Person Tracking

May 12, 2025Abstract:Tracking a target person from robot-egocentric views is crucial for developing autonomous robots that provide continuous personalized assistance or collaboration in Human-Robot Interaction (HRI) and Embodied AI. However, most existing target person tracking (TPT) benchmarks are limited to controlled laboratory environments with few distractions, clean backgrounds, and short-term occlusions. In this paper, we introduce a large-scale dataset designed for TPT in crowded and unstructured environments, demonstrated through a robot-person following task. The dataset is collected by a human pushing a sensor-equipped cart while following a target person, capturing human-like following behavior and emphasizing long-term tracking challenges, including frequent occlusions and the need for re-identification from numerous pedestrians. It includes multi-modal data streams, including odometry, 3D LiDAR, IMU, panoptic, and RGB-D images, along with exhaustively annotated 2D bounding boxes of the target person across 35 sequences, both indoors and outdoors. Using this dataset and visual annotations, we perform extensive experiments with existing TPT methods, offering a thorough analysis of their limitations and suggesting future research directions.

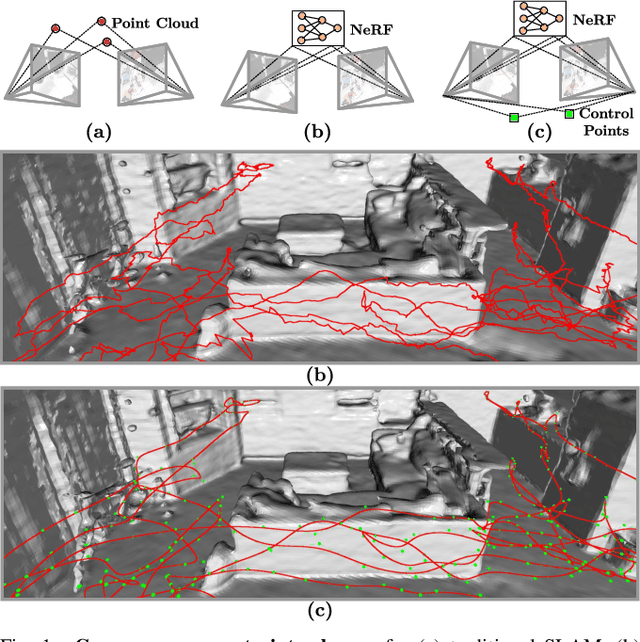

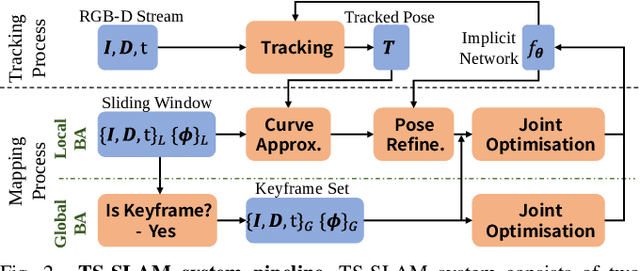

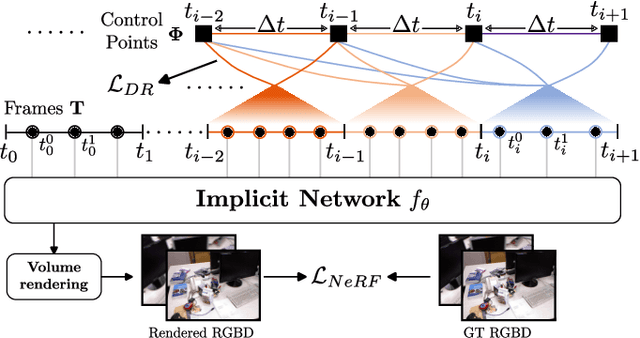

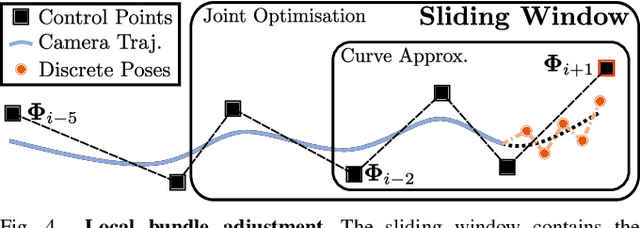

Optimizing NeRF-based SLAM with Trajectory Smoothness Constraints

Oct 11, 2024

Abstract:The joint optimization of Neural Radiance Fields (NeRF) and camera trajectories has been widely applied in SLAM tasks due to its superior dense mapping quality and consistency. NeRF-based SLAM learns camera poses using constraints by implicit map representation. A widely observed phenomenon that results from the constraints of this form is jerky and physically unrealistic estimated camera motion, which in turn affects the map quality. To address this deficiency of current NeRF-based SLAM, we propose in this paper TS-SLAM (TS for Trajectory Smoothness). It introduces smoothness constraints on camera trajectories by representing them with uniform cubic B-splines with continuous acceleration that guarantees smooth camera motion. Benefiting from the differentiability and local control properties of B-splines, TS-SLAM can incrementally learn the control points end-to-end using a sliding window paradigm. Additionally, we regularize camera trajectories by exploiting the dynamics prior to further smooth trajectories. Experimental results demonstrate that TS-SLAM achieves superior trajectory accuracy and improves mapping quality versus NeRF-based SLAM that does not employ the above smoothness constraints.

PISR: Polarimetric Neural Implicit Surface Reconstruction for Textureless and Specular Objects

Sep 22, 2024

Abstract:Neural implicit surface reconstruction has achieved remarkable progress recently. Despite resorting to complex radiance modeling, state-of-the-art methods still struggle with textureless and specular surfaces. Different from RGB images, polarization images can provide direct constraints on the azimuth angles of the surface normals. In this paper, we present PISR, a novel method that utilizes a geometrically accurate polarimetric loss to refine shape independently of appearance. In addition, PISR smooths surface normals in image space to eliminate severe shape distortions and leverages the hash-grid-based neural signed distance function to accelerate the reconstruction. Experimental results demonstrate that PISR achieves higher accuracy and robustness, with an L1 Chamfer distance of 0.5 mm and an F-score of 99.5% at 1 mm, while converging 4~30 times faster than previous polarimetric surface reconstruction methods.

Perspective Phase Angle Model for Polarimetric 3D Reconstruction

Jul 21, 2022

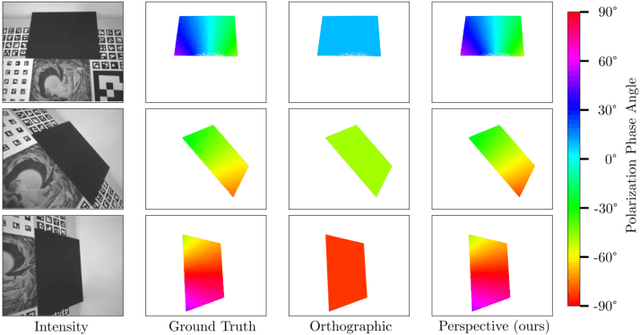

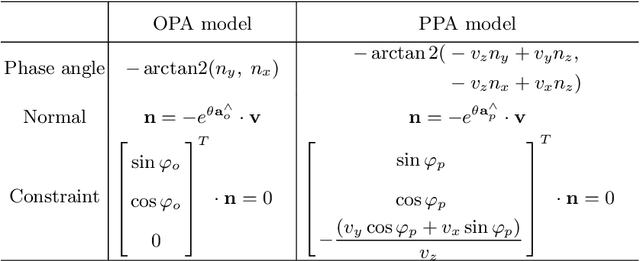

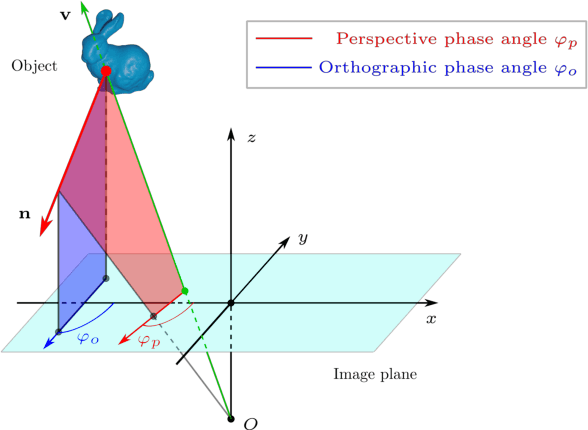

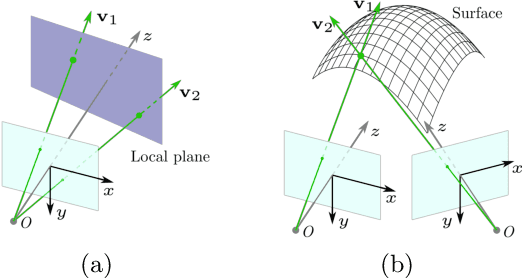

Abstract:Current polarimetric 3D reconstruction methods, including those in the well-established shape from polarization literature, are all developed under the orthographic projection assumption. In the case of a large field of view, however, this assumption does not hold and may result in significant reconstruction errors in methods that make this assumption. To address this problem, we present the perspective phase angle (PPA) model that is applicable to perspective cameras. Compared with the orthographic model, the proposed PPA model accurately describes the relationship between polarization phase angle and surface normal under perspective projection. In addition, the PPA model makes it possible to estimate surface normals from only one single-view phase angle map and does not suffer from the so-called $\pi$-ambiguity problem. Experiments on real data show that the PPA model is more accurate for surface normal estimation with a perspective camera than the orthographic model.

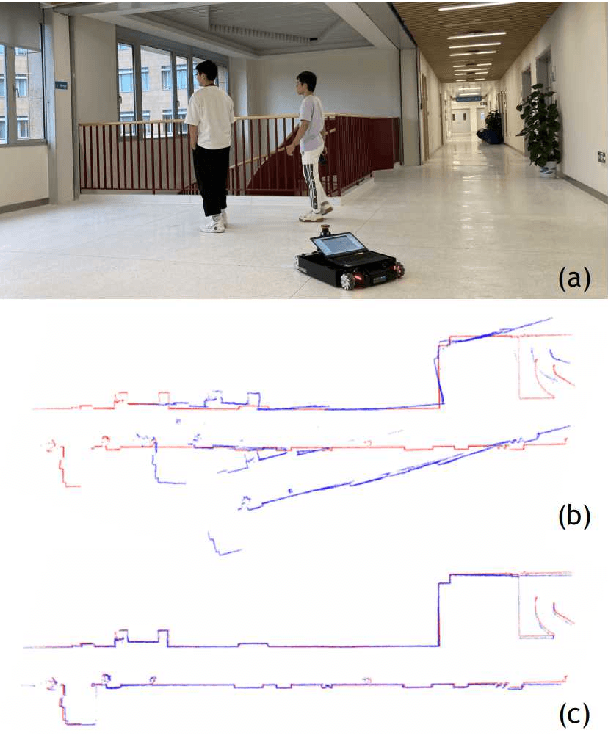

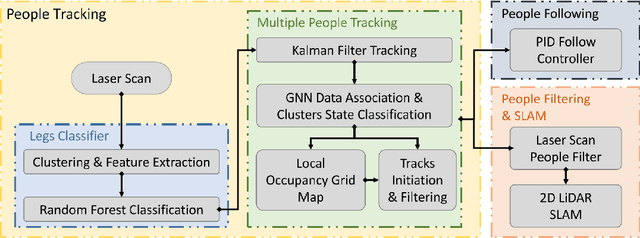

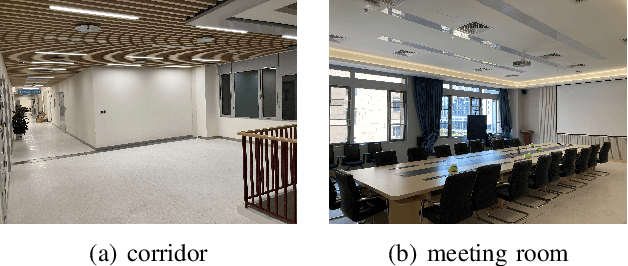

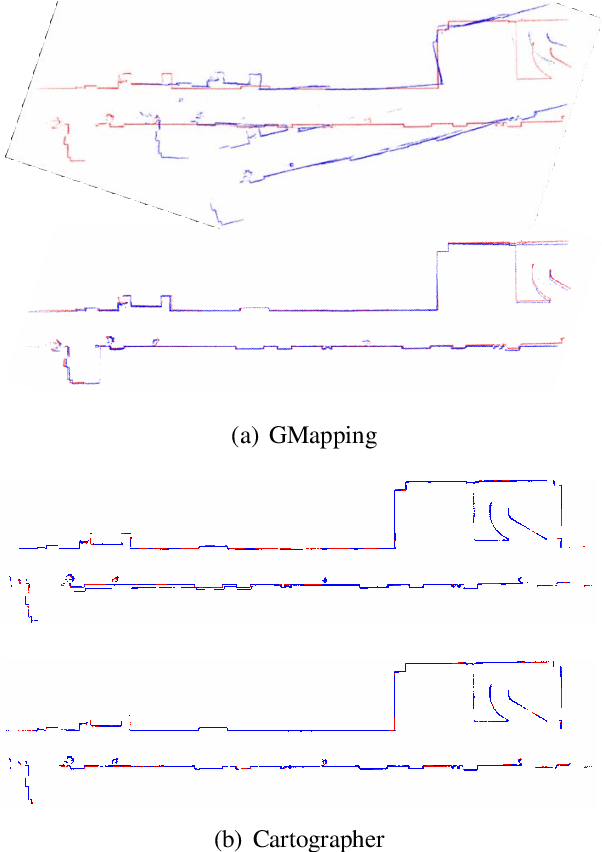

Mapping While Following: 2D LiDAR SLAM in Indoor Dynamic Environments with a Person Tracker

Apr 18, 2022

Abstract:2D LiDAR SLAM (Simultaneous Localization and Mapping) is widely used in indoor environments due to its stability and flexibility. However, its mapping procedure is usually operated by a joystick in static environments, while indoor environments often are dynamic with moving objects such as people. The generated map with noisy points due to the dynamic objects is usually incomplete and distorted. To address this problem, we propose a framework of 2D-LiDAR-based SLAM without manual control that effectively excludes dynamic objects (people) and simplify the process for a robot to map an environment. The framework, which includes three parts: people tracking, filtering and following. We verify our proposed framework in experiments with two classic 2D-LiDAR-based SLAM algorithms in indoor environments. The results show that this framework is effective in handling dynamic objects and reducing the mapping error.

* Presented at 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge