Gregory Goldmacher

OmniMamba4D: Spatio-temporal Mamba for longitudinal CT lesion segmentation

Apr 13, 2025Abstract:Accurate segmentation of longitudinal CT scans is important for monitoring tumor progression and evaluating treatment responses. However, existing 3D segmentation models solely focus on spatial information. To address this gap, we propose OmniMamba4D, a novel segmentation model designed for 4D medical images (3D images over time). OmniMamba4D utilizes a spatio-temporal tetra-orientated Mamba block to effectively capture both spatial and temporal features. Unlike traditional 3D models, which analyze single-time points, OmniMamba4D processes 4D CT data, providing comprehensive spatio-temporal information on lesion progression. Evaluated on an internal dataset comprising of 3,252 CT scans, OmniMamba4D achieves a competitive Dice score of 0.682, comparable to state-of-the-arts (SOTA) models, while maintaining computational efficiency and better detecting disappeared lesions. This work demonstrates a new framework to leverage spatio-temporal information for longitudinal CT lesion segmentation.

Multi-dimension unified Swin Transformer for 3D Lesion Segmentation in Multiple Anatomical Locations

Sep 04, 2023Abstract:In oncology research, accurate 3D segmentation of lesions from CT scans is essential for the modeling of lesion growth kinetics. However, following the RECIST criteria, radiologists routinely only delineate each lesion on the axial slice showing the largest transverse area, and delineate a small number of lesions in 3D for research purposes. As a result, we have plenty of unlabeled 3D volumes and labeled 2D images, and scarce labeled 3D volumes, which makes training a deep-learning 3D segmentation model a challenging task. In this work, we propose a novel model, denoted a multi-dimension unified Swin transformer (MDU-ST), for 3D lesion segmentation. The MDU-ST consists of a Shifted-window transformer (Swin-transformer) encoder and a convolutional neural network (CNN) decoder, allowing it to adapt to 2D and 3D inputs and learn the corresponding semantic information in the same encoder. Based on this model, we introduce a three-stage framework: 1) leveraging large amount of unlabeled 3D lesion volumes through self-supervised pretext tasks to learn the underlying pattern of lesion anatomy in the Swin-transformer encoder; 2) fine-tune the Swin-transformer encoder to perform 2D lesion segmentation with 2D RECIST slices to learn slice-level segmentation information; 3) further fine-tune the Swin-transformer encoder to perform 3D lesion segmentation with labeled 3D volumes. The network's performance is evaluated by the Dice similarity coefficient (DSC) and Hausdorff distance (HD) using an internal 3D lesion dataset with 593 lesions extracted from multiple anatomical locations. The proposed MDU-ST demonstrates significant improvement over the competing models. The proposed method can be used to conduct automated 3D lesion segmentation to assist radiomics and tumor growth modeling studies. This paper has been accepted by the IEEE International Symposium on Biomedical Imaging (ISBI) 2023.

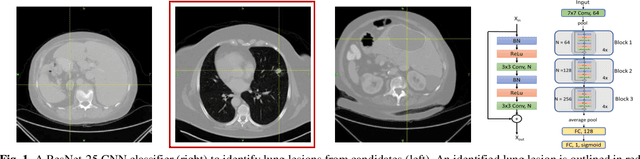

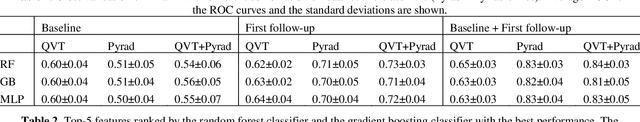

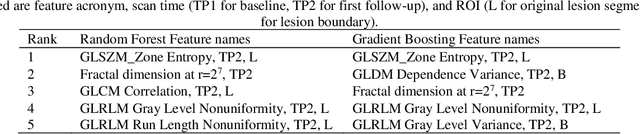

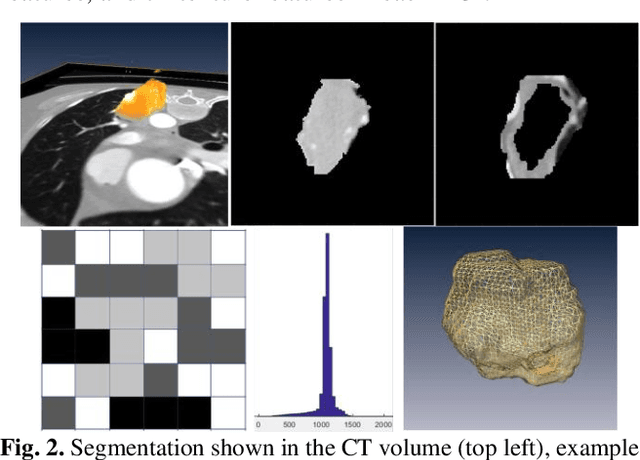

A deep learning-facilitated radiomics solution for the prediction of lung lesion shrinkage in non-small cell lung cancer trials

Mar 05, 2020

Abstract:Herein we propose a deep learning-based approach for the prediction of lung lesion response based on radiomic features extracted from clinical CT scans of patients in non-small cell lung cancer trials. The approach starts with the classification of lung lesions from the set of primary and metastatic lesions at various anatomic locations. Focusing on the lung lesions, we perform automatic segmentation to extract their 3D volumes. Radiomic features are then extracted from the lesion on the pre-treatment scan and the first follow-up scan to predict which lesions will shrink at least 30% in diameter during treatment (either Pembrolizumab or combinations of chemotherapy and Pembrolizumab), which is defined as a partial response by the Response Evaluation Criteria In Solid Tumors (RECIST) guidelines. A 5-fold cross validation on the training set led to an AUC of 0.84 +/- 0.03, and the prediction on the testing dataset reached AUC of 0.73 +/- 0.02 for the outcome of 30% diameter shrinkage.

A Progressively-trained Scale-invariant and Boundary-aware Deep Neural Network for the Automatic 3D Segmentation of Lung Lesions

Nov 11, 2018

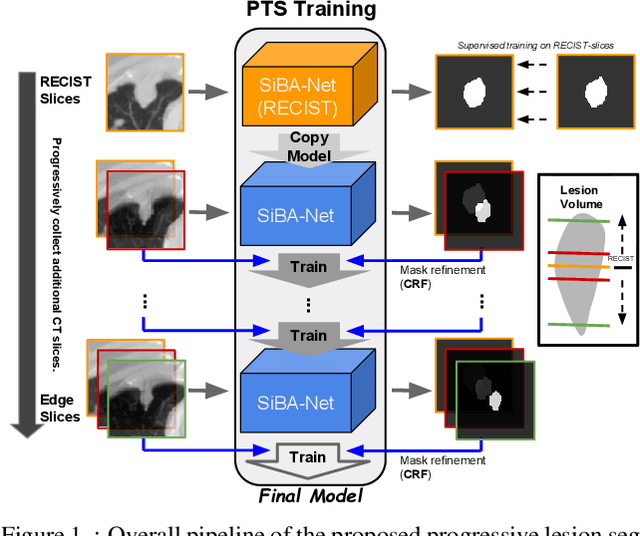

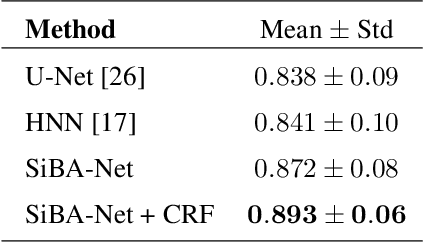

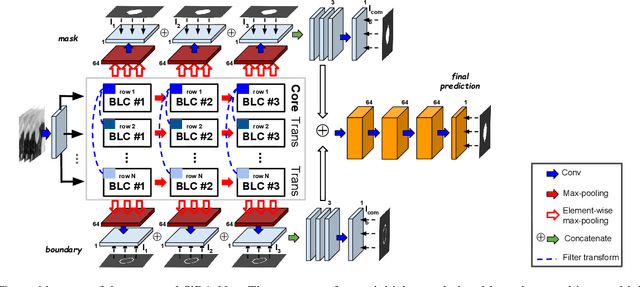

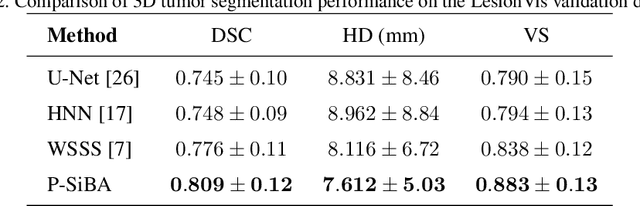

Abstract:Volumetric segmentation of lesions on CT scans is important for many types of analysis, including lesion growth kinetic modeling in clinical trials and machine learning of radiomic features. Manual segmentation is laborious, and impractical for large-scale use. For routine clinical use, and in clinical trials that apply the Response Evaluation Criteria In Solid Tumors (RECIST), clinicians typically outline the boundaries of a lesion on a single slice to extract diameter measurements. In this work, we have collected a large-scale database, named LesionVis, with pixel-wise manual 2D lesion delineations on the RECIST-slices. To extend the 2D segmentations to 3D, we propose a volumetric progressive lesion segmentation (PLS) algorithm to automatically segment the 3D lesion volume from 2D delineations using a scale-invariant and boundary-aware deep convolutional network (SIBA-Net). The SIBA-Net copes with the size transition of a lesion when the PLS progresses from the RECIST-slice to the edge-slices, as well as when performing longitudinal assessment of lesions whose size change over multiple time points. The proposed PLS-SiBA-Net (P-SiBA) approach is assessed on the lung lesion cases from LesionVis. Our experimental results demonstrate that the P-SiBA approach achieves mean Dice similarity coefficients (DSC) of 0.81, which significantly improves 3D segmentation accuracy compared with the approaches proposed previously (highest mean DSC at 0.78 on LesionVis). In summary, by leveraging the limited 2D delineations on the RECIST-slices, P-SiBA is an effective semi-supervised approach to produce accurate lesion segmentations in 3D.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge