Gregory Dobler

There's no difference: Convolutional Neural Networks for transient detection without template subtraction

Mar 14, 2022

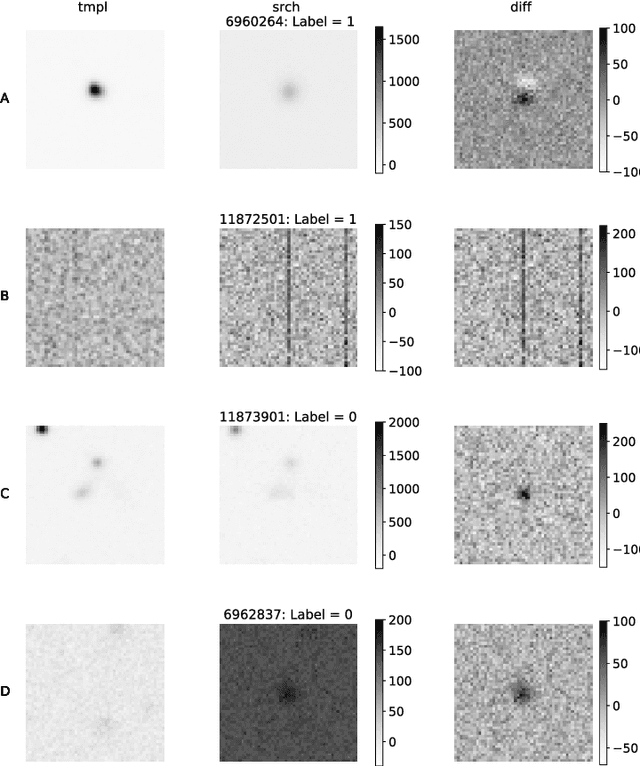

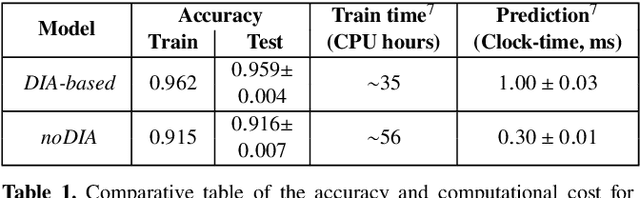

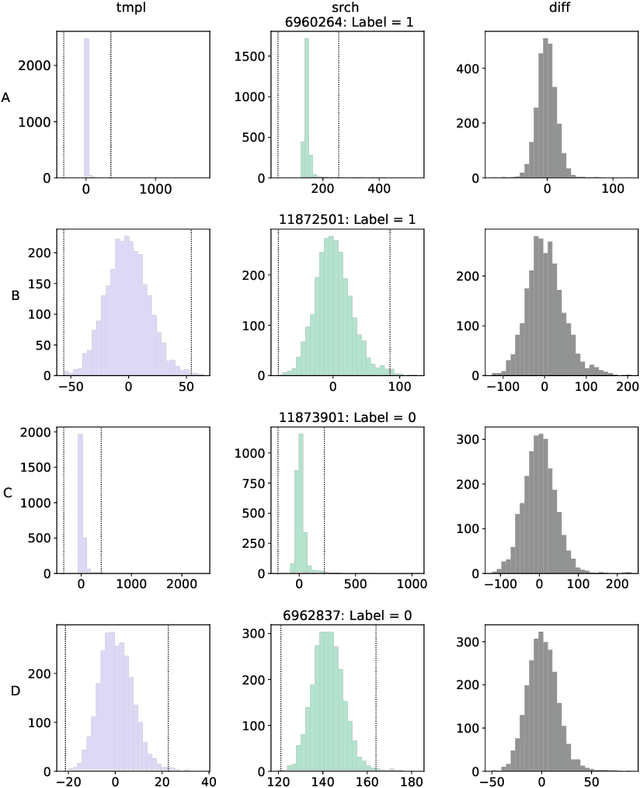

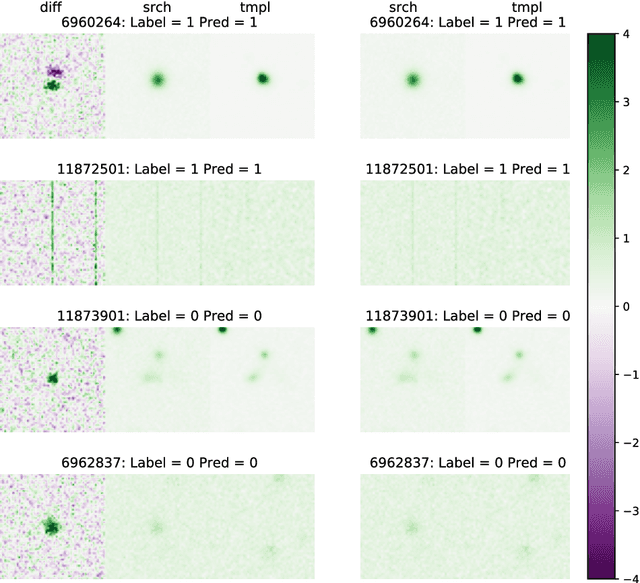

Abstract:We present a Convolutional Neural Network (CNN) model for the separation of astrophysical transients from image artifacts, a task known as "real-bogus" classification, that does not rely on Difference Image Analysis (DIA) which is a computationally expensive process involving image matching on small spatial scales in large volumes of data. We explore the use of CNNs to (1) automate the "real-bogus" classification, (2) reduce the computational costs of transient discovery. We compare the efficiency of two CNNs with similar architectures, one that uses "image triplets" (templates, search, and the corresponding difference image) and one that adopts a similar architecture but takes as input the template and search only. Without substantially changing the model architecture or retuning the hyperparameters to the new input, we observe only a small decrease in model efficiency (97% to 92% accuracy). We further investigate how the model that does not receive the difference image learns the required information from the template and search by exploring the saliency maps. Our work demonstrates that (1) CNNs are excellent models for "real-bogus" classification that rely exclusively on the imaging data and require no feature engineering task; (2) high-accuracy models can be built without the need to construct difference images. Since once trained, neural networks can generate predictions at minimal computational costs, we argue that future implementations of this methodology could dramatically reduce the computational costs in the detection of genuine transients in synoptic surveys like Rubin Observatory's Legacy Survey of Space and Time by bypassing the DIA step entirely.

Do cities have a unique magnetic pulse?

Feb 12, 2022

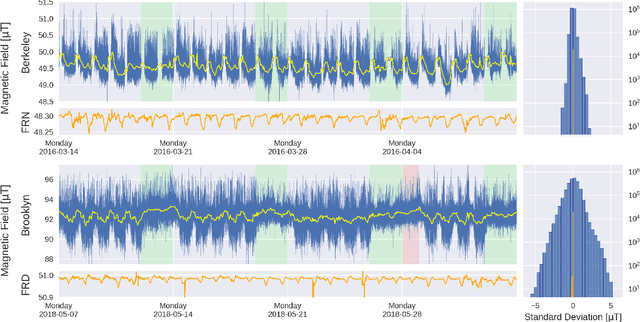

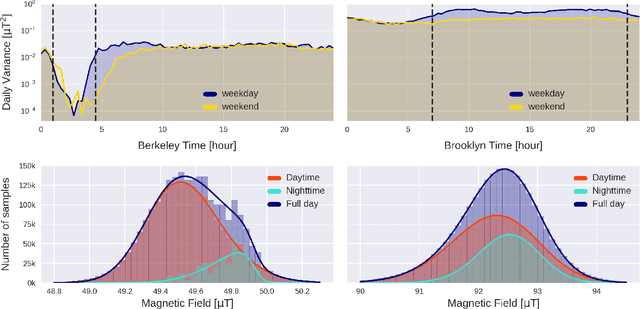

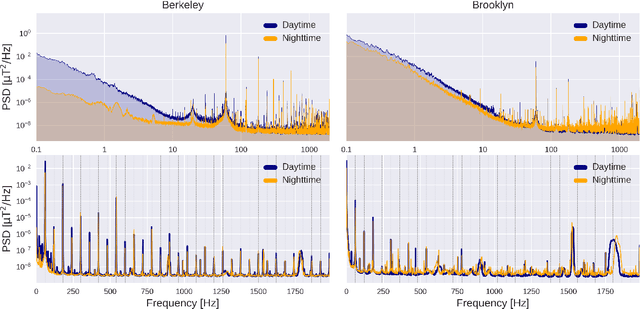

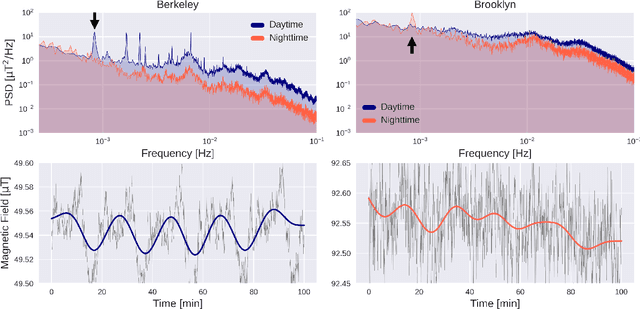

Abstract:We present a comparative analysis of urban magnetic fields between two American cities: Berkeley (California) and Brooklyn Borough of New York City (New York). Our analysis uses data taken over a four-week period during which magnetic field data were continuously recorded using a fluxgate magnetometer of 70 pT/$\sqrt{\mathrm{Hz}}$ sensitivity. We identified significant differences in the magnetic signatures. In particular, we noticed that Berkeley reaches a near-zero magnetic field activity at night whereas magnetic activity in Brooklyn continues during nighttime. We also present auxiliary measurements acquired using magnetoresistive vector magnetometers (VMR), with sensitivity of 300 pT/$\sqrt{\mathrm{Hz}}$, and demonstrate how cross-correlation, and frequency-domain analysis, combined with data filtering can be used to extract urban magnetometry signals and study local anthropogenic activities. Finally, we discuss the potential of using magnetometer networks to characterize the global magnetic field of cities and give directions for future development.

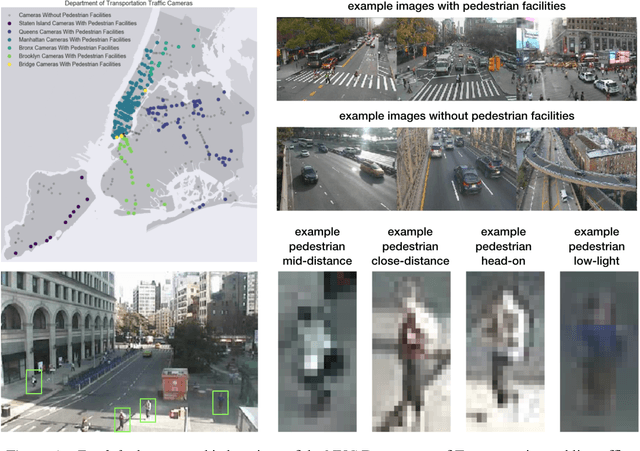

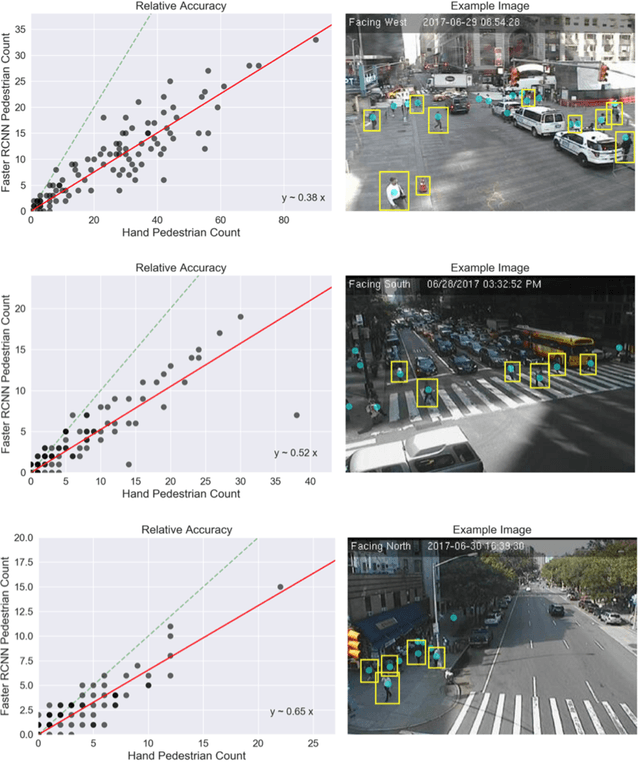

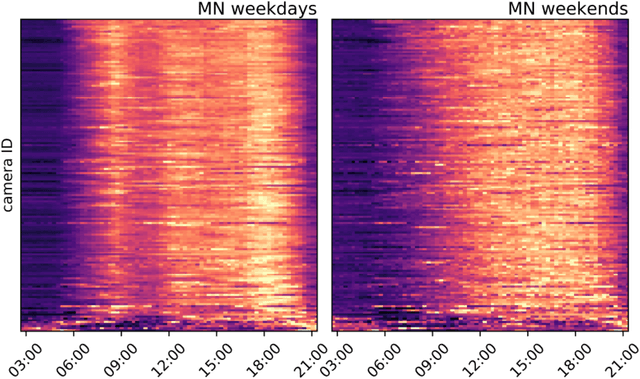

Patterns of Urban Foot Traffic Dynamics

Oct 06, 2019

Abstract:Using publicly available traffic camera data in New York City, we quantify time-dependent patterns in aggregate pedestrian foot traffic. These patterns exhibit repeatable diurnal behaviors that differ for weekdays and weekends but are broadly consistent across neighborhoods in the borough of Manhattan. Weekday patterns contain a characteristic 3-peak structure with increased foot traffic around 9:00am, 12:00-1:00pm, and 5:00pm aligned with the "9-to-5" work day in which pedestrians are on the street during their morning commute, during lunch hour, and then during their evening commute. Weekend days do not show a peaked structure, but rather increase steadily until sunset. Our study period of June 28, 2017 to September 11, 2017 contains two holidays, the 4th of July and Labor Day, and their foot traffic patterns are quantitatively similar to weekend days despite the fact that they fell on weekdays. Projecting all days in our study period onto the weekday/weekend phase space (by regressing against the average weekday and weekend day) we find that Friday foot traffic can be represented as a mixture of both the 3-peak weekday structure and non-peaked weekend structure. We also show that anomalies in the foot traffic patterns can be used for detection of events and network-level disruptions. Finally, we show that clustering of foot traffic time series generates associations between cameras that are spatially aligned with Manhattan neighborhood boundaries indicating that foot traffic dynamics encode information about neighborhood character.

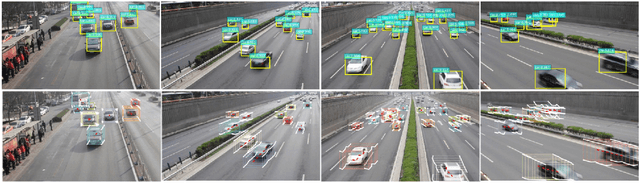

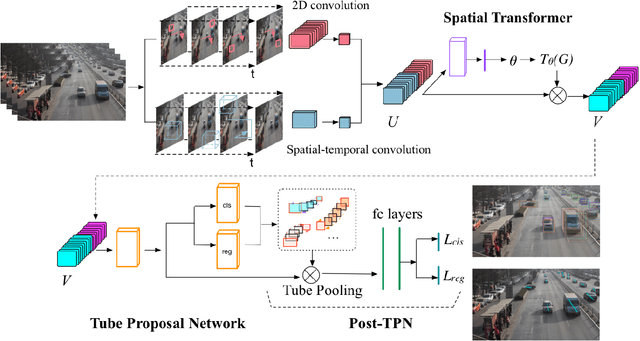

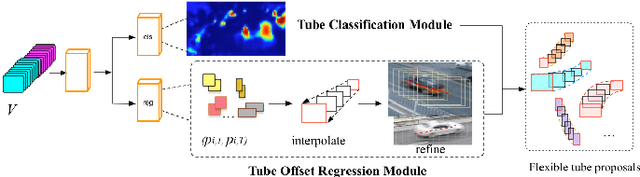

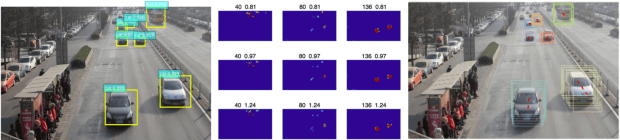

TrackNet: Simultaneous Object Detection and Tracking and Its Application in Traffic Video Analysis

Feb 04, 2019

Abstract:Object detection and object tracking are usually treated as two separate processes. Significant progress has been made for object detection in 2D images using deep learning networks. The usual tracking-by-detection pipeline for object tracking requires that the object is successfully detected in the first frame and all subsequent frames, and tracking is done by associating detection results. Performing object detection and object tracking through a single network remains a challenging open question. We propose a novel network structure named trackNet that can directly detect a 3D tube enclosing a moving object in a video segment by extending the faster R-CNN framework. A Tube Proposal Network (TPN) inside the trackNet is proposed to predict the objectness of each candidate tube and location parameters specifying the bounding tube. The proposed framework is applicable for detecting and tracking any object and in this paper, we focus on its application for traffic video analysis. The proposed model is trained and tested on UA-DETRAC, a large traffic video dataset available for multi-vehicle detection and tracking, and obtained very promising results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge