Gregor Kobsik

Learning Fine-to-Coarse Cuboid Shape Abstraction

Feb 03, 2025

Abstract:The abstraction of 3D objects with simple geometric primitives like cuboids allows to infer structural information from complex geometry. It is important for 3D shape understanding, structural analysis and geometric modeling. We introduce a novel fine-to-coarse unsupervised learning approach to abstract collections of 3D shapes. Our architectural design allows us to reduce the number of primitives from hundreds (fine reconstruction) to only a few (coarse abstraction) during training. This allows our network to optimize the reconstruction error and adhere to a user-specified number of primitives per shape while simultaneously learning a consistent structure across the whole collection of data. We achieve this through our abstraction loss formulation which increasingly penalizes redundant primitives. Furthermore, we introduce a reconstruction loss formulation to account not only for surface approximation but also volume preservation. Combining both contributions allows us to represent 3D shapes more precisely with fewer cuboid primitives than previous work. We evaluate our method on collections of man-made and humanoid shapes comparing with previous state-of-the-art learning methods on commonly used benchmarks. Our results confirm an improvement over previous cuboid-based shape abstraction techniques. Furthermore, we demonstrate our cuboid abstraction in downstream tasks like clustering, retrieval, and partial symmetry detection.

Multidimensional Byte Pair Encoding: Shortened Sequences for Improved Visual Data Generation

Nov 15, 2024

Abstract:In language processing, transformers benefit greatly from text being condensed. This is achieved through a larger vocabulary that captures word fragments instead of plain characters. This is often done with Byte Pair Encoding. In the context of images, tokenisation of visual data is usually limited to regular grids obtained from quantisation methods, without global content awareness. Our work improves tokenisation of visual data by bringing Byte Pair Encoding from 1D to multiple dimensions, as a complementary add-on to existing compression. We achieve this through counting constellations of token pairs and replacing the most frequent token pair with a newly introduced token. The multidimensionality only increases the computation time by a factor of 2 for images, making it applicable even to large datasets like ImageNet within minutes on consumer hardware. This is a lossless preprocessing step. Our evaluation shows improved training and inference performance of transformers on visual data achieved by compressing frequent constellations of tokens: The resulting sequences are shorter, with more uniformly distributed information content, e.g. condensing empty regions in an image into single tokens. As our experiments show, these condensed sequences are easier to process. We additionally introduce a strategy to amplify this compression further by clustering the vocabulary.

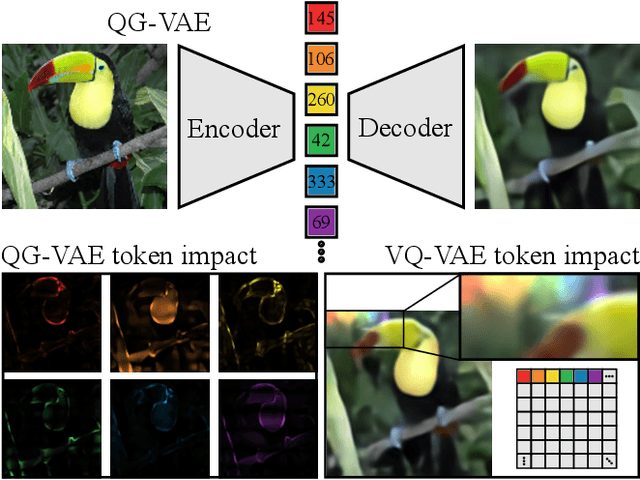

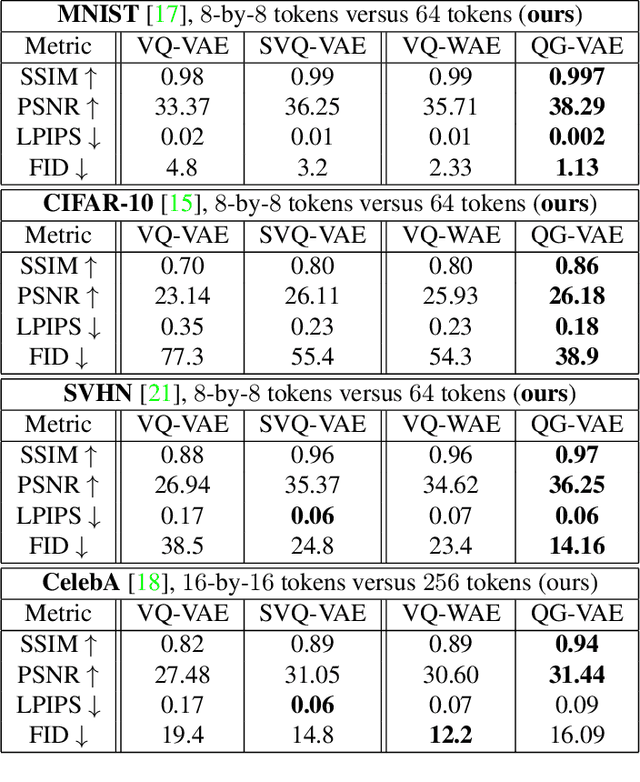

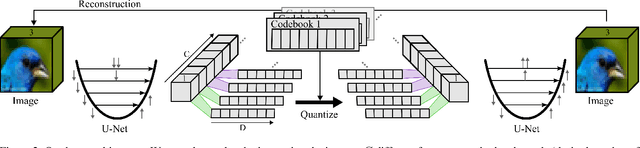

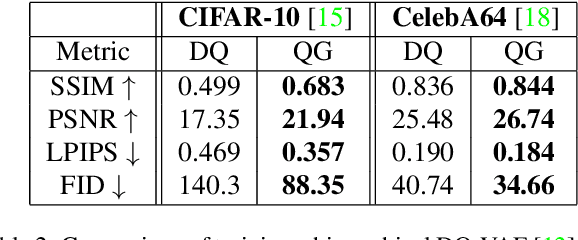

Quantised Global Autoencoder: A Holistic Approach to Representing Visual Data

Jul 16, 2024

Abstract:In quantised autoencoders, images are usually split into local patches, each encoded by one token. This representation is redundant in the sense that the same number of tokens is spend per region, regardless of the visual information content in that region. Adaptive discretisation schemes like quadtrees are applied to allocate tokens for patches with varying sizes, but this just varies the region of influence for a token which nevertheless remains a local descriptor. Modern architectures add an attention mechanism to the autoencoder which infuses some degree of global information into the local tokens. Despite the global context, tokens are still associated with a local image region. In contrast, our method is inspired by spectral decompositions which transform an input signal into a superposition of global frequencies. Taking the data-driven perspective, we learn custom basis functions corresponding to the codebook entries in our VQ-VAE setup. Furthermore, a decoder combines these basis functions in a non-linear fashion, going beyond the simple linear superposition of spectral decompositions. We can achieve this global description with an efficient transpose operation between features and channels and demonstrate our performance on compression.

Partial Symmetry Detection for 3D Geometry using Contrastive Learning with Geodesic Point Cloud Patches

Dec 13, 2023

Abstract:Symmetry detection, especially partial and extrinsic symmetry, is essential for various downstream tasks, like 3D geometry completion, segmentation, compression and structure-aware shape encoding or generation. In order to detect partial extrinsic symmetries, we propose to learn rotation, reflection, translation and scale invariant local shape features for geodesic point cloud patches via contrastive learning, which are robust across multiple classes and generalize over different datasets. We show that our approach is able to extract multiple valid solutions for this ambiguous problem. Furthermore, we introduce a novel benchmark test for partial extrinsic symmetry detection to evaluate our method. Lastly, we incorporate the detected symmetries together with a region growing algorithm to demonstrate a downstream task with the goal of computing symmetry-aware partitions of 3D shapes. To our knowledge, we are the first to propose a self-supervised data-driven method for partial extrinsic symmetry detection.

Octree Transformer: Autoregressive 3D Shape Generation on Hierarchically Structured Sequences

Nov 24, 2021

Abstract:Autoregressive models have proven to be very powerful in NLP text generation tasks and lately have gained popularity for image generation as well. However, they have seen limited use for the synthesis of 3D shapes so far. This is mainly due to the lack of a straightforward way to linearize 3D data as well as to scaling problems with the length of the resulting sequences when describing complex shapes. In this work we address both of these problems. We use octrees as a compact hierarchical shape representation that can be sequentialized by traversal ordering. Moreover, we introduce an adaptive compression scheme, that significantly reduces sequence lengths and thus enables their effective generation with a transformer, while still allowing fully autoregressive sampling and parallel training. We demonstrate the performance of our model by comparing against the state-of-the-art in shape generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge