Grace Abuhamad

Can Active Learning Preemptively Mitigate Fairness Issues?

Apr 14, 2021

Abstract:Dataset bias is one of the prevailing causes of unfairness in machine learning. Addressing fairness at the data collection and dataset preparation stages therefore becomes an essential part of training fairer algorithms. In particular, active learning (AL) algorithms show promise for the task by drawing importance to the most informative training samples. However, the effect and interaction between existing AL algorithms and algorithmic fairness remain under-explored. In this paper, we study whether models trained with uncertainty-based AL heuristics such as BALD are fairer in their decisions with respect to a protected class than those trained with identically independently distributed (i.i.d.) sampling. We found a significant improvement on predictive parity when using BALD, while also improving accuracy compared to i.i.d. sampling. We also explore the interaction of algorithmic fairness methods such as gradient reversal (GRAD) and BALD. We found that, while addressing different fairness issues, their interaction further improves the results on most benchmarks and metrics we explored.

Like a Researcher Stating Broader Impact For the Very First Time

Nov 25, 2020

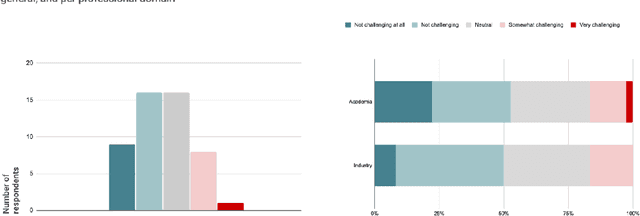

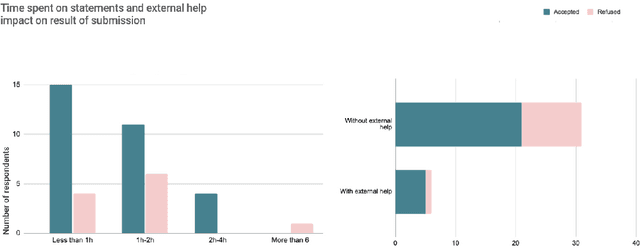

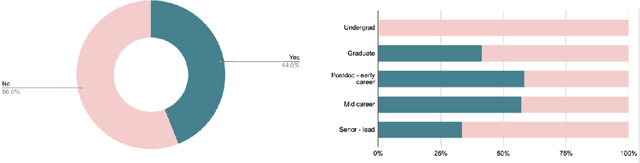

Abstract:In requiring that a statement of broader impact accompany all submissions for this year's conference, the NeurIPS program chairs made ethics part of the stake in groundbreaking AI research. While there is precedent from other fields and increasing awareness within the NeurIPS community, this paper seeks to answer the question of how individual researchers reacted to the new requirement, including not just their views, but also their experience in drafting and their reflections after paper acceptances. We present survey results and considerations to inform the next iteration of the broader impact requirement should it remain a requirement for future NeurIPS conferences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge