Giorgos Flouris

Linguistic Cues of Deception in a Multilingual April Fools' Day Context

Nov 09, 2021

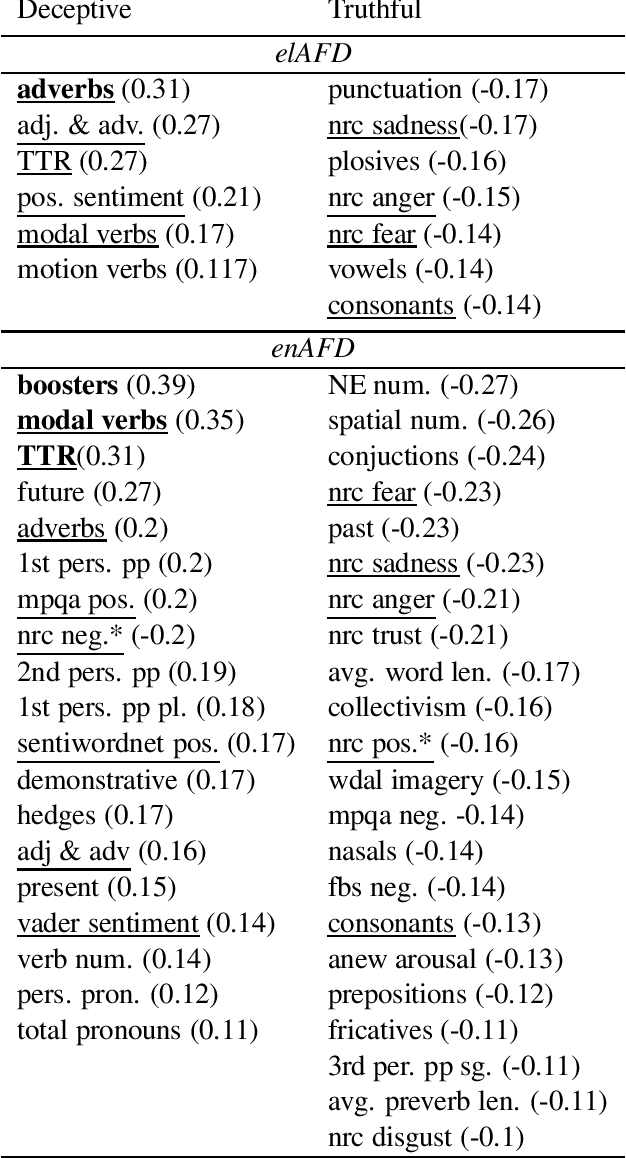

Abstract:In this work we consider the collection of deceptive April Fools' Day(AFD) news articles as a useful addition in existing datasets for deception detection tasks. Such collections have an established ground truth and are relatively easy to construct across languages. As a result, we introduce a corpus that includes diachronic AFD and normal articles from Greek newspapers and news websites. On top of that, we build a rich linguistic feature set, and analyze and compare its deception cues with the only AFD collection currently available, which is in English. Following a current research thread, we also discuss the individualism/collectivism dimension in deception with respect to these two datasets. Lastly, we build classifiers by testing various monolingual and crosslingual settings. The results showcase that AFD datasets can be helpful in deception detection studies, and are in alignment with the observations of other deception detection works.

Deception detection in text and its relation to the cultural dimension of individualism/collectivism

May 26, 2021

Abstract:Deception detection is a task with many applications both in direct physical and in computer-mediated communication. Our focus is on automatic deception detection in text across cultures. We view culture through the prism of the individualism/collectivism dimension and we approximate culture by using country as a proxy. Having as a starting point recent conclusions drawn from the social psychology discipline, we explore if differences in the usage of specific linguistic features of deception across cultures can be confirmed and attributed to norms in respect to the individualism/collectivism divide. We also investigate if a universal feature set for cross-cultural text deception detection tasks exists. We evaluate the predictive power of different feature sets and approaches. We create culture/language-aware classifiers by experimenting with a wide range of n-gram features based on phonology, morphology and syntax, other linguistic cues like word and phoneme counts, pronouns use, etc., and token embeddings. We conducted our experiments over 11 datasets from 5 languages i.e., English, Dutch, Russian, Spanish and Romanian, from six countries (US, Belgium, India, Russia, Mexico and Romania), and we applied two classification methods i.e, logistic regression and fine-tuned BERT models. The results showed that our task is fairly complex and demanding. There are indications that some linguistic cues of deception have cultural origins, and are consistent in the context of diverse domains and dataset settings for the same language. This is more evident for the usage of pronouns and the expression of sentiment in deceptive language. The results of this work show that the automatic deception detection across cultures and languages cannot be handled in a unified manner, and that such approaches should be augmented with knowledge about cultural differences and the domains of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge