Gillian Macnaught

Unsupervised learning for cross-domain medical image synthesis using deformation invariant cycle consistency networks

Aug 12, 2018

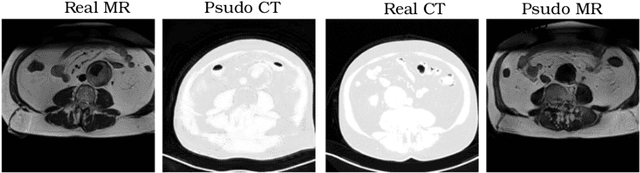

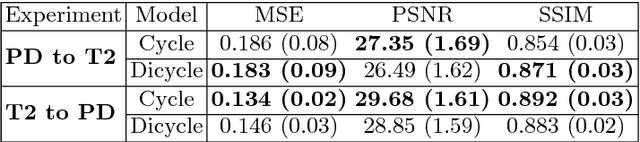

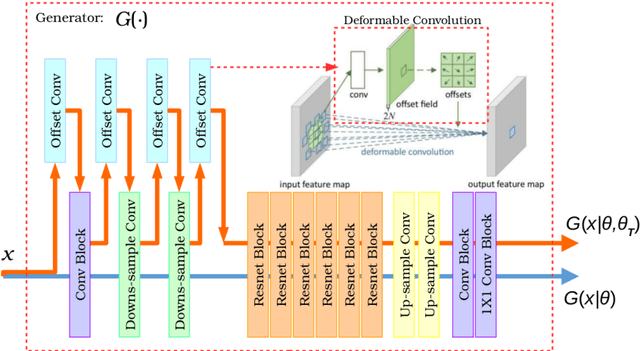

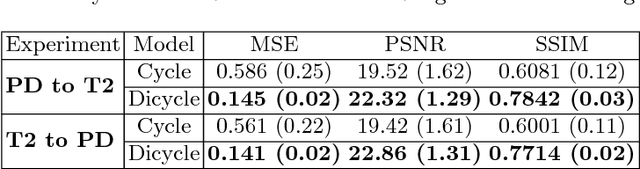

Abstract:Recently, the cycle-consistent generative adversarial networks (CycleGAN) has been widely used for synthesis of multi-domain medical images. The domain-specific nonlinear deformations captured by CycleGAN make the synthesized images difficult to be used for some applications, for example, generating pseudo-CT for PET-MR attenuation correction. This paper presents a deformation-invariant CycleGAN (DicycleGAN) method using deformable convolutional layers and new cycle-consistency losses. Its robustness dealing with data that suffer from domain-specific nonlinear deformations has been evaluated through comparison experiments performed on a multi-sequence brain MR dataset and a multi-modality abdominal dataset. Our method has displayed its ability to generate synthesized data that is aligned with the source while maintaining a proper quality of signal compared to CycleGAN-generated data. The proposed model also obtained comparable performance with CycleGAN when data from the source and target domains are alignable through simple affine transformations.

A two-stage 3D Unet framework for multi-class segmentation on full resolution image

Apr 12, 2018

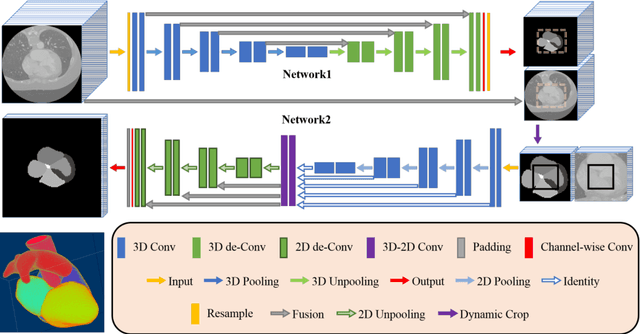

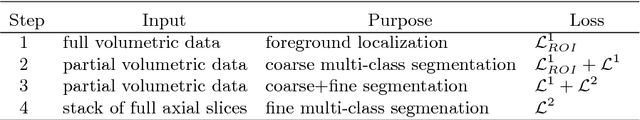

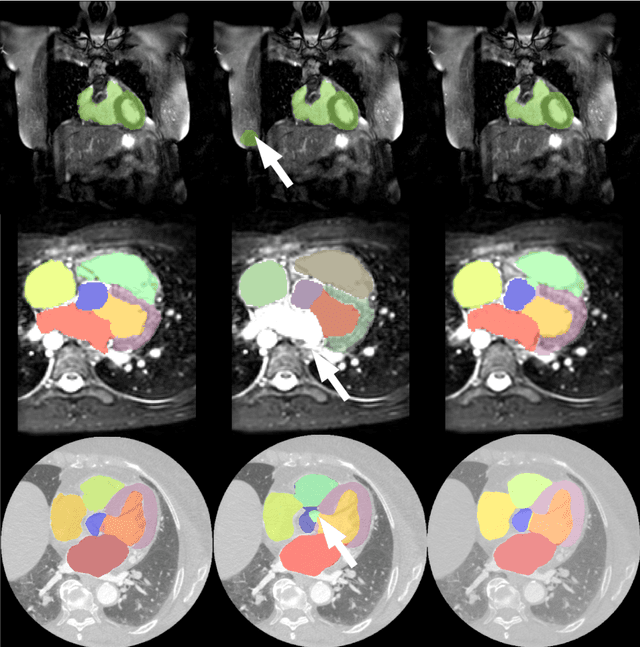

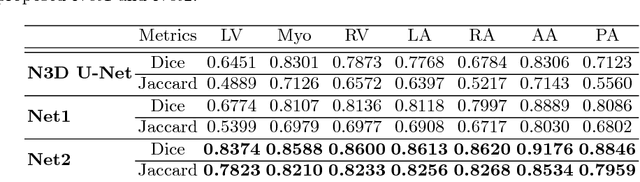

Abstract:Deep convolutional neural networks (CNNs) have been intensively used for multi-class segmentation of data from different modalities and achieved state-of-the-art performances. However, a common problem when dealing with large, high resolution 3D data is that the volumes input into the deep CNNs has to be either cropped or downsampled due to limited memory capacity of computing devices. These operations lead to loss of resolution and increment of class imbalance in the input data batches, which can downgrade the performances of segmentation algorithms. Inspired by the architecture of image super-resolution CNN (SRCNN) and self-normalization network (SNN), we developed a two-stage modified Unet framework that simultaneously learns to detect a ROI within the full volume and to classify voxels without losing the original resolution. Experiments on a variety of multi-modal volumes demonstrated that, when trained with a simply weighted dice coefficients and our customized learning procedure, this framework shows better segmentation performances than state-of-the-art Deep CNNs with advanced similarity metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge