Gianluigi Lauro

TrainSim: A Railway Simulation Framework for LiDAR and Camera Dataset Generation

Feb 28, 2023

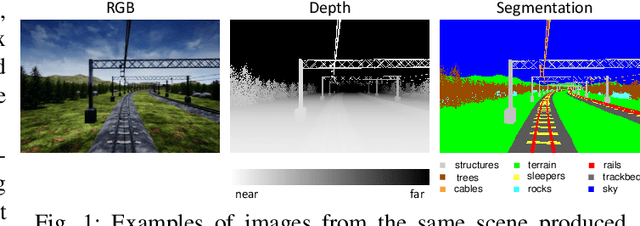

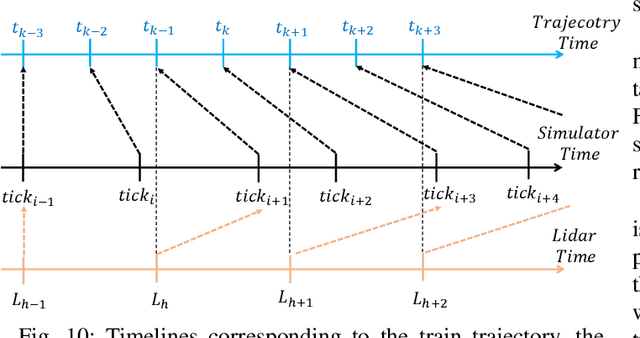

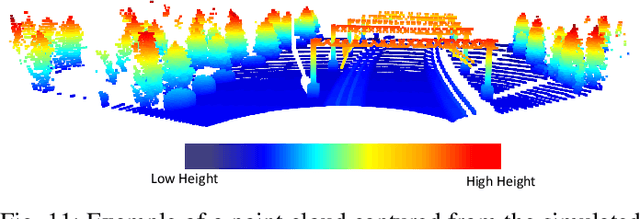

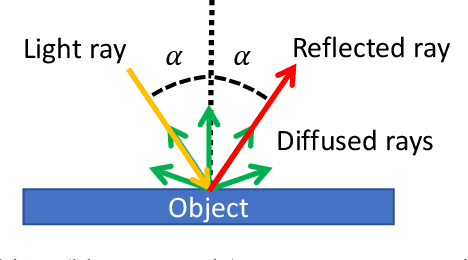

Abstract:The railway industry is searching for new ways to automate a number of complex train functions, such as object detection, track discrimination, and accurate train positioning, which require the artificial perception of the railway environment through different types of sensors, including cameras, LiDARs, wheel encoders, and inertial measurement units. A promising approach for processing such sensory data is the use of deep learning models, which proved to achieve excellent performance in other application domains, as robotics and self-driving cars. However, testing new algorithms and solutions requires the availability of a large amount of labeled data, acquired in different scenarios and operating conditions, which are difficult to obtain in a real railway setting due to strict regulations and practical constraints in accessing the trackside infrastructure and equipping a train with the required sensors. To address such difficulties, this paper presents a visual simulation framework able to generate realistic railway scenarios in a virtual environment and automatically produce inertial data and labeled datasets from emulated LiDARs and cameras useful for training deep neural networks or testing innovative algorithms. A set of experimental results are reported to show the effectiveness of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge