Gianluca Carloni

Human-aligned Deep Learning: Explainability, Causality, and Biological Inspiration

Apr 18, 2025Abstract:This work aligns deep learning (DL) with human reasoning capabilities and needs to enable more efficient, interpretable, and robust image classification. We approach this from three perspectives: explainability, causality, and biological vision. Introduction and background open this work before diving into operative chapters. First, we assess neural networks' visualization techniques for medical images and validate an explainable-by-design method for breast mass classification. A comprehensive review at the intersection of XAI and causality follows, where we introduce a general scaffold to organize past and future research, laying the groundwork for our second perspective. In the causality direction, we propose novel modules that exploit feature co-occurrence in medical images, leading to more effective and explainable predictions. We further introduce CROCODILE, a general framework that integrates causal concepts, contrastive learning, feature disentanglement, and prior knowledge to enhance generalization. Lastly, we explore biological vision, examining how humans recognize objects, and propose CoCoReco, a connectivity-inspired network with context-aware attention mechanisms. Overall, our key findings include: (i) simple activation maximization lacks insight for medical imaging DL models; (ii) prototypical-part learning is effective and radiologically aligned; (iii) XAI and causal ML are deeply connected; (iv) weak causal signals can be leveraged without a priori information to improve performance and interpretability; (v) our framework generalizes across medical domains and out-of-distribution data; (vi) incorporating biological circuit motifs improves human-aligned recognition. This work contributes toward human-aligned DL and highlights pathways to bridge the gap between research and clinical adoption, with implications for improved trust, diagnostic accuracy, and safe deployment.

Connectivity-Inspired Network for Context-Aware Recognition

Sep 06, 2024

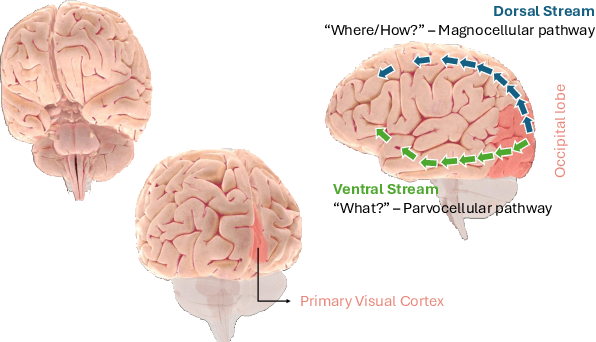

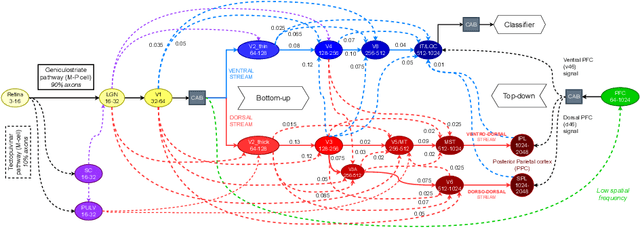

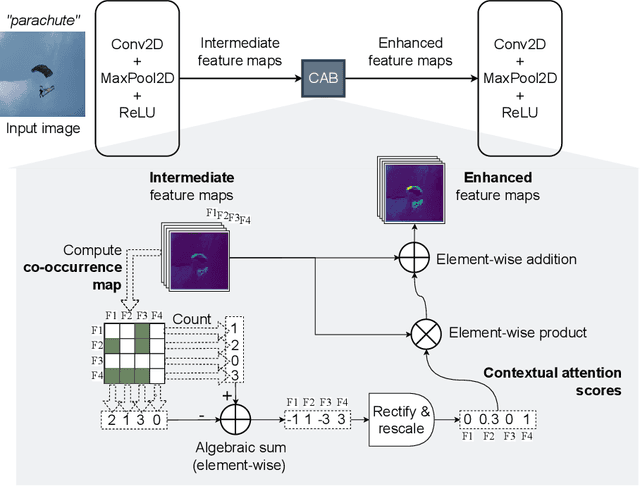

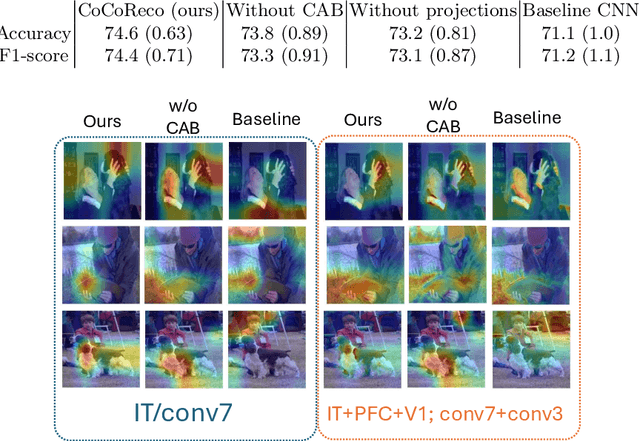

Abstract:The aim of this paper is threefold. We inform the AI practitioner about the human visual system with an extensive literature review; we propose a novel biologically motivated neural network for image classification; and, finally, we present a new plug-and-play module to model context awareness. We focus on the effect of incorporating circuit motifs found in biological brains to address visual recognition. Our convolutional architecture is inspired by the connectivity of human cortical and subcortical streams, and we implement bottom-up and top-down modulations that mimic the extensive afferent and efferent connections between visual and cognitive areas. Our Contextual Attention Block is simple and effective and can be integrated with any feed-forward neural network. It infers weights that multiply the feature maps according to their causal influence on the scene, modeling the co-occurrence of different objects in the image. We place our module at different bottlenecks to infuse a hierarchical context awareness into the model. We validated our proposals through image classification experiments on benchmark data and found a consistent improvement in performance and the robustness of the produced explanations via class activation. Our code is available at https://github.com/gianlucarloni/CoCoReco.

CROCODILE: Causality aids RObustness via COntrastive DIsentangled LEarning

Aug 09, 2024

Abstract:Due to domain shift, deep learning image classifiers perform poorly when applied to a domain different from the training one. For instance, a classifier trained on chest X-ray (CXR) images from one hospital may not generalize to images from another hospital due to variations in scanner settings or patient characteristics. In this paper, we introduce our CROCODILE framework, showing how tools from causality can foster a model's robustness to domain shift via feature disentanglement, contrastive learning losses, and the injection of prior knowledge. This way, the model relies less on spurious correlations, learns the mechanism bringing from images to prediction better, and outperforms baselines on out-of-distribution (OOD) data. We apply our method to multi-label lung disease classification from CXRs, utilizing over 750000 images from four datasets. Our bias-mitigation method improves domain generalization and fairness, broadening the applicability and reliability of deep learning models for a safer medical image analysis. Find our code at: https://github.com/gianlucarloni/crocodile.

Cine cardiac MRI reconstruction using a convolutional recurrent network with refinement

Sep 23, 2023Abstract:Cine Magnetic Resonance Imaging (MRI) allows for understanding of the heart's function and condition in a non-invasive manner. Undersampling of the $k$-space is employed to reduce the scan duration, thus increasing patient comfort and reducing the risk of motion artefacts, at the cost of reduced image quality. In this challenge paper, we investigate the use of a convolutional recurrent neural network (CRNN) architecture to exploit temporal correlations in supervised cine cardiac MRI reconstruction. This is combined with a single-image super-resolution refinement module to improve single coil reconstruction by 4.4\% in structural similarity and 3.9\% in normalised mean square error compared to a plain CRNN implementation. We deploy a high-pass filter to our $\ell_1$ loss to allow greater emphasis on high-frequency details which are missing in the original data. The proposed model demonstrates considerable enhancements compared to the baseline case and holds promising potential for further improving cardiac MRI reconstruction.

Exploiting Causality Signals in Medical Images: A Pilot Study with Empirical Results

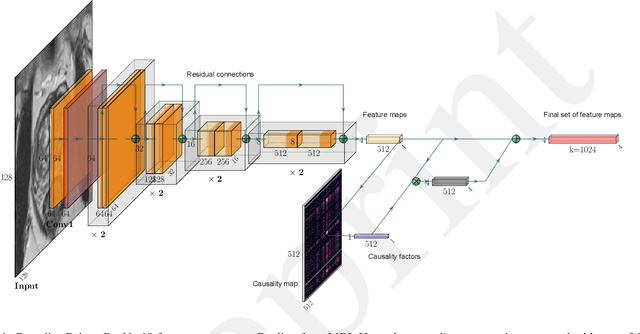

Sep 19, 2023Abstract:We present a new method for automatically classifying medical images that uses weak causal signals in the scene to model how the presence of a feature in one part of the image affects the appearance of another feature in a different part of the image. Our method consists of two components: a convolutional neural network backbone and a causality-factors extractor module. The latter computes weights for the feature maps to enhance each feature map according to its causal influence in the image's scene. We can modify the functioning of the causality module by using two external signals, thus obtaining different variants of our method. We evaluate our method on a public dataset of prostate MRI images for prostate cancer diagnosis, using quantitative experiments, qualitative assessment, and ablation studies. Our results show that our method improves classification performance and produces more robust predictions, focusing on relevant parts of the image. That is especially important in medical imaging, where accurate and reliable classifications are essential for effective diagnosis and treatment planning.

Causality-Driven One-Shot Learning for Prostate Cancer Grading from MRI

Sep 19, 2023

Abstract:In this paper, we present a novel method to automatically classify medical images that learns and leverages weak causal signals in the image. Our framework consists of a convolutional neural network backbone and a causality-extractor module that extracts cause-effect relationships between feature maps that can inform the model on the appearance of a feature in one place of the image, given the presence of another feature within some other place of the image. To evaluate the effectiveness of our approach in low-data scenarios, we train our causality-driven architecture in a One-shot learning scheme, where we propose a new meta-learning procedure entailing meta-training and meta-testing tasks that are designed using related classes but at different levels of granularity. We conduct binary and multi-class classification experiments on a publicly available dataset of prostate MRI images. To validate the effectiveness of the proposed causality-driven module, we perform an ablation study and conduct qualitative assessments using class activation maps to highlight regions strongly influencing the network's decision-making process. Our findings show that causal relationships among features play a crucial role in enhancing the model's ability to discern relevant information and yielding more reliable and interpretable predictions. This would make it a promising approach for medical image classification tasks.

The role of causality in explainable artificial intelligence

Sep 18, 2023

Abstract:Causality and eXplainable Artificial Intelligence (XAI) have developed as separate fields in computer science, even though the underlying concepts of causation and explanation share common ancient roots. This is further enforced by the lack of review works jointly covering these two fields. In this paper, we investigate the literature to try to understand how and to what extent causality and XAI are intertwined. More precisely, we seek to uncover what kinds of relationships exist between the two concepts and how one can benefit from them, for instance, in building trust in AI systems. As a result, three main perspectives are identified. In the first one, the lack of causality is seen as one of the major limitations of current AI and XAI approaches, and the "optimal" form of explanations is investigated. The second is a pragmatic perspective and considers XAI as a tool to foster scientific exploration for causal inquiry, via the identification of pursue-worthy experimental manipulations. Finally, the third perspective supports the idea that causality is propaedeutic to XAI in three possible manners: exploiting concepts borrowed from causality to support or improve XAI, utilizing counterfactuals for explainability, and considering accessing a causal model as explaining itself. To complement our analysis, we also provide relevant software solutions used to automate causal tasks. We believe our work provides a unified view of the two fields of causality and XAI by highlighting potential domain bridges and uncovering possible limitations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge