Geesara Prathap

Ground and Non-Ground Separation Filter for UAV Lidar Point Cloud

Nov 16, 2019

Abstract:This paper proposes a novel approach for separating ground plane and non-ground objects on Lidar 3D point cloud as a filter. It is specially designed for real-time applications on unmanned aerial vehicles and works on sparse Lidar point clouds without preliminary mapping. We use this filter as a crucial component of fast obstacle avoidance system for agriculture drone operating at low altitude. As the first step, a point cloud is transformed into a depth image and then places with high density nearest to the vehicle (local maxima) are identified. Then we merge original depth image with identified locations after maximizing intensities of pixels in which local maxima were found. Next step is to calculate range angle image which represents angles between two consecutive laser beams based on improved depth image. Once a range angle image is constructed, smoothing is applied to reduce the noise. Finally, we find out connected components in the improved depth image while incorporating smoothed range angle image. This allows separating the non-ground objects. The rest of the locations of depth image belong to the ground plane. The filter has been tested on a simulated environment as well as an actual drone and provides real-time performance. We make our source code and dataset available online\footnote[2]{Source code and dataset are available at https://github.com/GPrathap/hagen.git

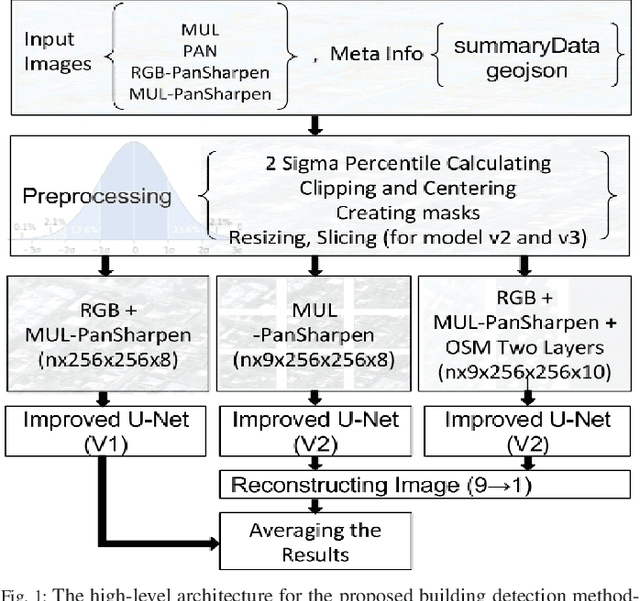

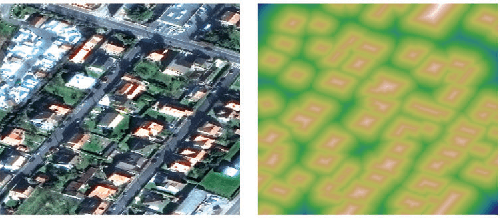

Deep Learning Approach for Building Detection in Satellite Multispectral Imagery

Nov 10, 2018

Abstract:Building detection from satellite multispectral imagery data is being a fundamental but a challenging problem mainly because it requires correct recovery of building footprints from high-resolution images. In this work, we propose a deep learning approach for building detection by applying numerous enhancements throughout the process. Initial dataset is preprocessed by 2-sigma percentile normalization. Then data preparation includes ensemble modelling where 3 models were created while incorporating OpenStreetMap data. Binary Distance Transformation (BDT) is used for improving data labeling process and the U-Net (Convolutional Networks for Biomedical Image Segmentation) is modified by adding batch normalization wrappers. Afterwards, it is explained how each component of our approach is correlated with the final detection accuracy. Finally, we compare our results with winning solutions of SpaceNet 2 competition for real satellite multispectral images of Vegas, Paris, Shanghai and Khartoum, demonstrating the importance of our solution for achieving higher building detection accuracy.

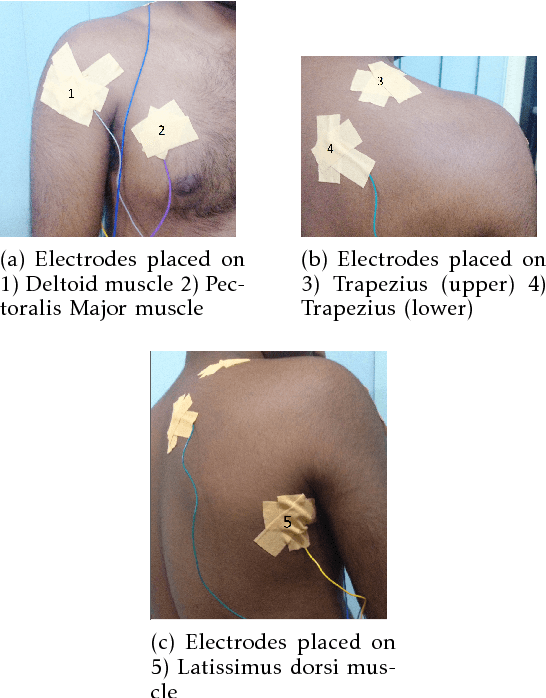

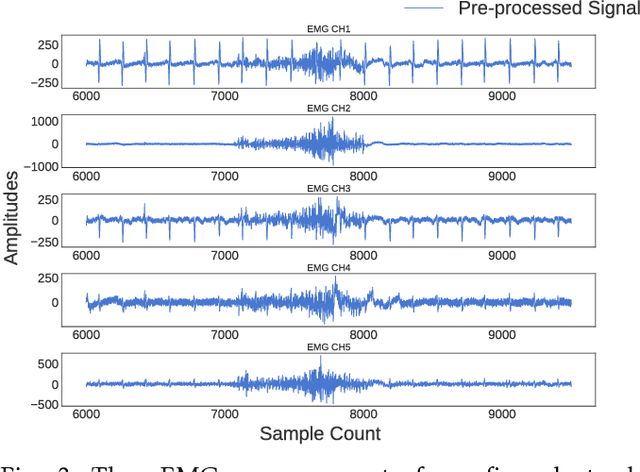

Near Real-Time Data Labeling Using a Depth Sensor for EMG Based Prosthetic Arms

Nov 10, 2018

Abstract:Recognizing sEMG (Surface Electromyography) signals belonging to a particular action (e.g., lateral arm raise) automatically is a challenging task as EMG signals themselves have a lot of variation even for the same action due to several factors. To overcome this issue, there should be a proper separation which indicates similar patterns repetitively for a particular action in raw signals. A repetitive pattern is not always matched because the same action can be carried out with different time duration. Thus, a depth sensor (Kinect) was used for pattern identification where three joint angles were recording continuously which is clearly separable for a particular action while recording sEMG signals. To Segment out a repetitive pattern in angle data, MDTW (Moving Dynamic Time Warping) approach is introduced. This technique is allowed to retrieve suspected motion of interest from raw signals. MDTW based on DTW algorithm, but it will be moving through the whole dataset in a pre-defined manner which is capable of picking up almost all the suspected segments inside a given dataset an optimal way. Elevated bicep curl and lateral arm raise movements are taken as motions of interest to show how the proposed technique can be employed to achieve auto identification and labelling. The full implementation is available at https://github.com/GPrathap/OpenBCIPython

Towards Human Pulse Rate Estimation from Face Video: Automatic Component Selection and Comparison of Blind Source Separation Methods

Oct 28, 2018

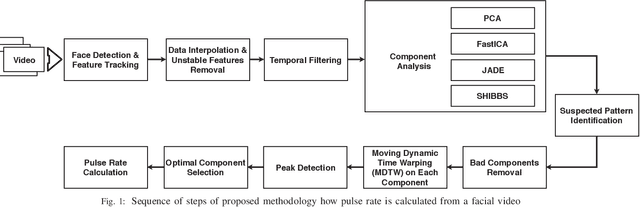

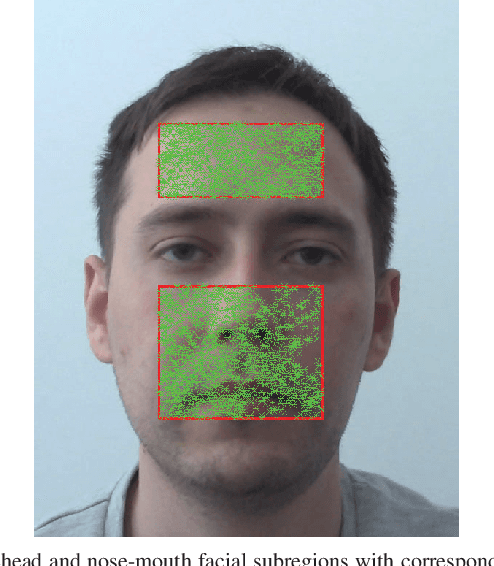

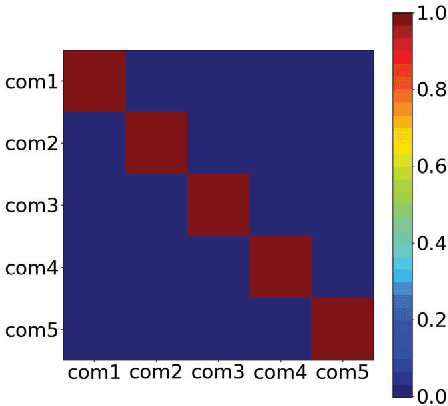

Abstract:Human heartbeat can be measured using several different ways appropriately based on the patient condition which includes contact base such as measured by using instruments and non-contact base such as computer vision assisted techniques. Non-contact based approached are getting popular due to those techniques are capable of mitigating some of the limitations of contact-based techniques especially in clinical section. However, existing vision guided approaches are not able to prove high accurate result due to various reason such as the property of camera, illumination changes, skin tones in face image, etc. We propose a technique that uses video as an input and returns pulse rate in output. Initially, key point detection is carried out on two facial subregions: forehead and nose-mouth. After removing unstable features, the temporal filtering is applied to isolate frequencies of interest. Then four component analysis methods are employed in order to distinguish the cardiovascular pulse signal from extraneous noise caused by respiration, vestibular activity and other changes in facial expression. Afterwards, proposed peak detection technique is applied for each component which extracted from one of the four different component selection algorithms. This will enable to locate the positions of peaks in each component. Proposed automatic components selection technique is employed in order to select an optimal component which will be used to calculate the heartbeat. Finally, we conclude with a comparison of four component analysis methods (PCA, FastICA, JADE, SHIBBS), processing face video datasets of fifteen volunteers with verification by an ECG/EKG Workstation as a ground truth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge