Gérard Assayag

LTCI

Cross-modal variational inference for bijective signal-symbol translation

Feb 10, 2020

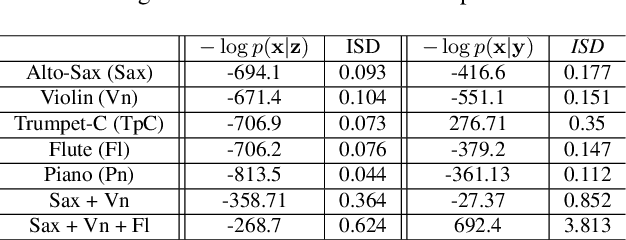

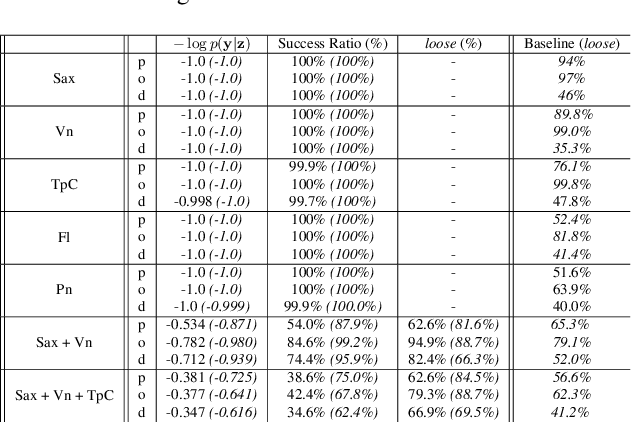

Abstract:Extraction of symbolic information from signals is an active field of research enabling numerous applications especially in the Musical Information Retrieval domain. This complex task, that is also related to other topics such as pitch extraction or instrument recognition, is a demanding subject that gave birth to numerous approaches, mostly based on advanced signal processing-based algorithms. However, these techniques are often non-generic, allowing the extraction of definite physical properties of the signal (pitch, octave), but not allowing arbitrary vocabularies or more general annotations. On top of that, these techniques are one-sided, meaning that they can extract symbolic data from an audio signal, but cannot perform the reverse process and make symbol-to-signal generation. In this paper, we propose an bijective approach for signal/symbol translation by turning this problem into a density estimation task over signal and symbolic domains, considered both as related random variables. We estimate this joint distribution with two different variational auto-encoders, one for each domain, whose inner representations are forced to match with an additive constraint, allowing both models to learn and generate separately while allowing signal-to-symbol and symbol-to-signal inference. In this article, we test our models on pitch, octave and dynamics symbols, which comprise a fundamental step towards music transcription and label-constrained audio generation. In addition to its versatility, this system is rather light during training and generation while allowing several interesting creative uses that we outline at the end of the article.

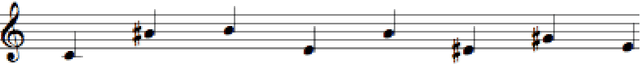

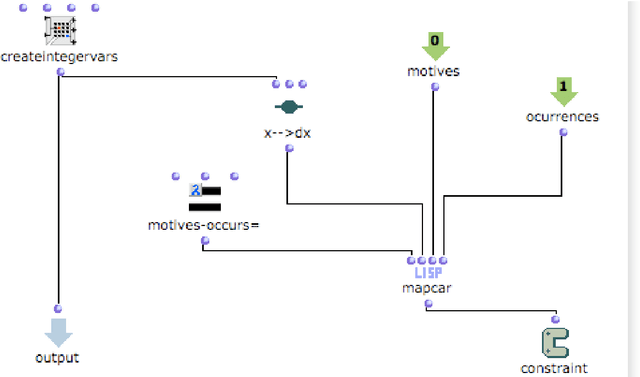

Gelisp: A Library to Represent Musical CSPs and Search Strategies

Oct 09, 2015

Abstract:In this paper we present Gelisp, a new library to represent musical Constraint Satisfaction Problems and search strategies intuitively. Gelisp has two interfaces, a command-line one for Common Lisp and a graphical one for OpenMusic. Using Gelisp, we solved a problem of automatic music generation proposed by composer Michael Jarrell and we found solutions for the All-interval series.

Emergence of synchrony in an Adaptive Interaction Model

Jun 18, 2015

Abstract:In a Human-Computer Interaction context, we aim to elaborate an adaptive and generic interaction model in two different use cases: Embodied Conversational Agents and Creative Musical Agents for musical improvisation. To reach this goal, we'll try to use the concepts of adaptation and synchronization to enhance the interactive abilities of our agents and guide the development of our interaction model, and will try to make synchrony emerge from non-verbal dimensions of interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge