Frédéric Bevilacqua

LTCI

Entangling Practice with Artistic and Educational Aims: Interviews on Technology-based Movement Sound Interactions

Sep 28, 2022Abstract:Movement-sound interactive systems are at the interface of different artistic and educational practices. Within this multiplicity of uses, we examine common denominators in terms of learning, appropriation and relationship to technological systems. While these topics have been previously reported at NIME, we wanted to investigate how practitioners, coming from different perspectives, relate to these questions. We conducted interviews with 6 artists who are engaged in movementsound interactions: 1 performer, 1 performer/composer, 1 composer, 1 teacher/composer, 1 dancer/teacher, 1 dancer. Through a thematic analysis of the transcripts we identified three main themes related to (1) the mediating role of technological tools (2) usability and normativity, and (3) learning and practice. These results provide ground for discussion about the design and study of movement-sound interactive systems.

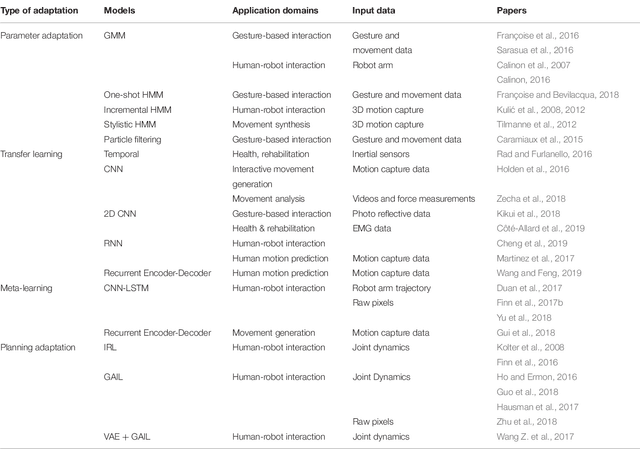

Machine Learning Approaches For Motor Learning: A Short Review

Feb 11, 2020

Abstract:The use of machine learning to model motor learning mechanisms is still limited, while it could help to design novel interactive systems for movement learning or rehabilitation. This approach requires to account for the motor variability induced by motor learning mechanisms. This represents specific challenges concerning fast adaptability of the computational models, from small variations to more drastic changes, including new movement classes. We propose a short review on machine learning based movement models and their existing adaptation mechanisms. We discuss the current challenges for applying these models in motor learning support systems, delineating promising research directions at the intersection of machine learning and motor learning.

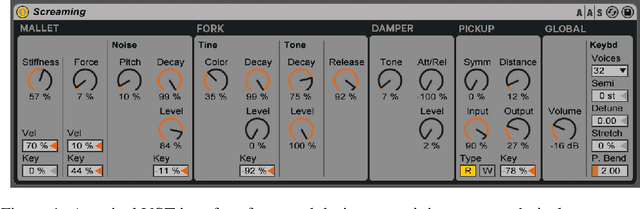

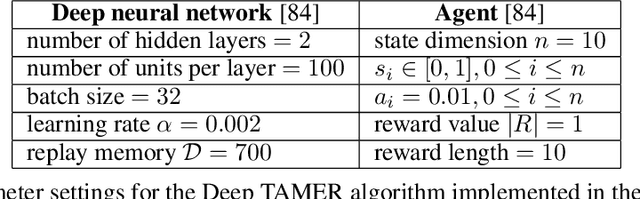

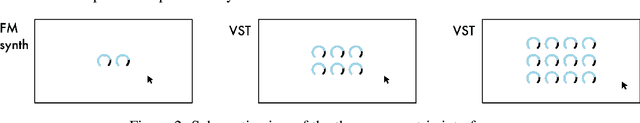

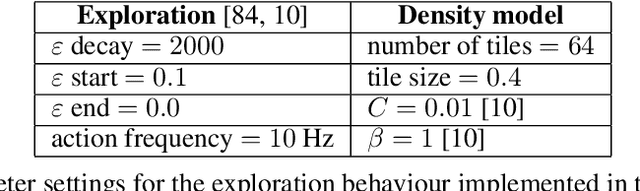

Designing Deep Reinforcement Learning for Human Parameter Exploration

Jul 01, 2019

Abstract:Software tools for generating digital sound often present users with high-dimensional, parametric interfaces, that may not facilitate exploration of diverse sound designs. In this paper, we propose to investigate artificial agents using deep reinforcement learning to explore parameter spaces in partnership with users for sound design. We describe a series of user-centred studies to probe the creative benefits of these agents and adapting their design to exploration. Preliminary studies observing users' exploration strategies with parametric interfaces and testing different agent exploration behaviours led to the design of a fully-functioning prototype, called Co-Explorer, that we evaluated in a workshop with professional sound designers. We found that the Co-Explorer enables a novel creative workflow centred on human-machine partnership, which has been positively received by practitioners. We also highlight varied user exploration behaviors throughout partnering with our system. Finally, we frame design guidelines for enabling such co-exploration workflow in creative digital applications.

Emergence of synchrony in an Adaptive Interaction Model

Jun 18, 2015

Abstract:In a Human-Computer Interaction context, we aim to elaborate an adaptive and generic interaction model in two different use cases: Embodied Conversational Agents and Creative Musical Agents for musical improvisation. To reach this goal, we'll try to use the concepts of adaptation and synchronization to enhance the interactive abilities of our agents and guide the development of our interaction model, and will try to make synchrony emerge from non-verbal dimensions of interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge