Fuzhang Wu

Image Retargetability

Feb 12, 2018

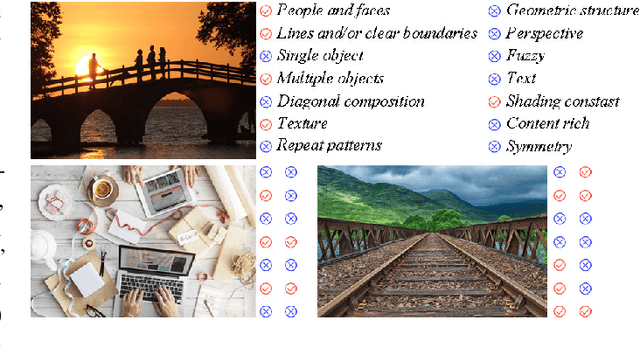

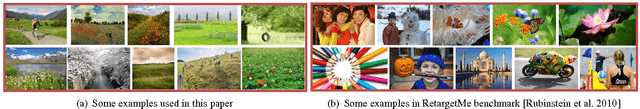

Abstract:Real-world applications could benefit from the ability to automatically retarget an image to different aspect ratios and resolutions, while preserving its visually and semantically important content. However, not all images can be equally well processed that way. In this work, we introduce the notion of image retargetability to describe how well a particular image can be handled by content-aware image retargeting. We propose to learn a deep convolutional neural network to rank photo retargetability in which the relative ranking of photo retargetability is directly modeled in the loss function. Our model incorporates joint learning of meaningful photographic attributes and image content information which can help regularize the complicated retargetability rating problem. To train and analyze this model, we have collected a database which contains retargetability scores and meaningful image attributes assigned by six expert raters. Experiments demonstrate that our unified model can generate retargetability rankings that are highly consistent with human labels. To further validate our model, we show applications of image retargetability in retargeting method selection, retargeting method assessment and photo collage generation.

Image Retargeting by Content-Aware Synthesis

Aug 21, 2014

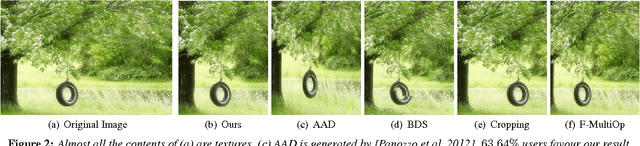

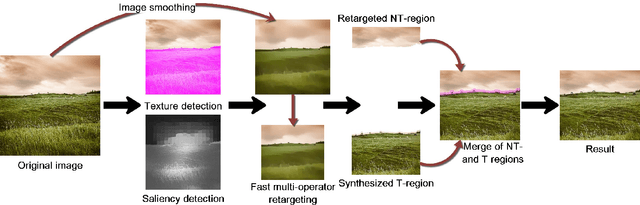

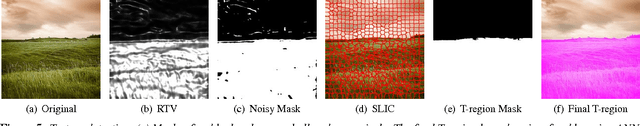

Abstract:Real-world images usually contain vivid contents and rich textural details, which will complicate the manipulation on them. In this paper, we design a new framework based on content-aware synthesis to enhance content-aware image retargeting. By detecting the textural regions in an image, the textural image content can be synthesized rather than simply distorted or cropped. This method enables the manipulation of textural & non-textural regions with different strategy since they have different natures. We propose to retarget the textural regions by content-aware synthesis and non-textural regions by fast multi-operators. To achieve practical retargeting applications for general images, we develop an automatic and fast texture detection method that can detect multiple disjoint textural regions. We adjust the saliency of the image according to the features of the textural regions. To validate the proposed method, comparisons with state-of-the-art image targeting techniques and a user study were conducted. Convincing visual results are shown to demonstrate the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge