Fuhao Shi

Deep Online Fused Video Stabilization

Feb 02, 2021

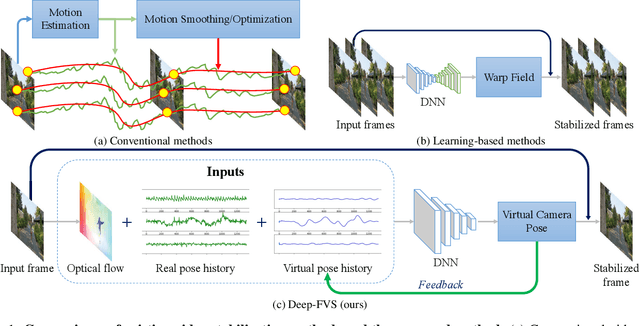

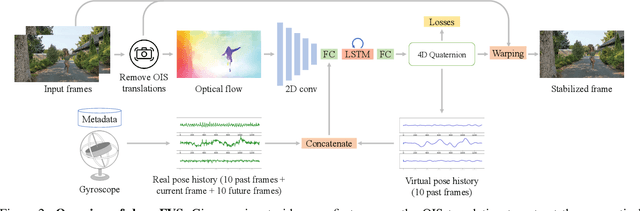

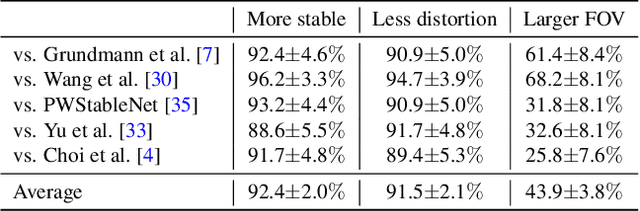

Abstract:We present a deep neural network (DNN) that uses both sensor data (gyroscope) and image content (optical flow) to stabilize videos through unsupervised learning. The network fuses optical flow with real/virtual camera pose histories into a joint motion representation. Next, the LSTM block infers the new virtual camera pose, and this virtual pose is used to generate a warping grid that stabilizes the frame. Novel relative motion representation as well as a multi-stage training process are presented to optimize our model without any supervision. To the best of our knowledge, this is the first DNN solution that adopts both sensor data and image for stabilization. We validate the proposed framework through ablation studies and demonstrated the proposed method outperforms the state-of-art alternative solutions via quantitative evaluations and a user study.

Steadiface: Real-Time Face-Centric Stabilization on Mobile Phones

May 03, 2019

Abstract:We present Steadiface, a new real-time face-centric video stabilization method that simultaneously removes hand shake and keeps subject's head stable. We use a CNN to estimate the face landmarks and use them to optimize a stabilized head center. We then formulate an optimization problem to find a virtual camera pose that locates the face to the stabilized head center while retains smooth rotation and translation transitions across frames. We test the proposed method on fieldtest videos and show it stabilizes both the head motion and background. It is robust to large head pose, occlusion, facial appearance variations, and different kinds of camera motions. We show our method advances the state of art in selfie video stabilization by comparing against alternative methods. The whole process runs very efficiently on a modern mobile phone (8.1 ms/frame).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge