Frank Vetere

Directive Explanations for Actionable Explainability in Machine Learning Applications

Feb 03, 2021

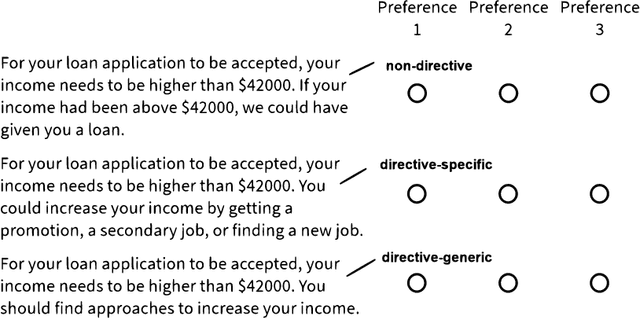

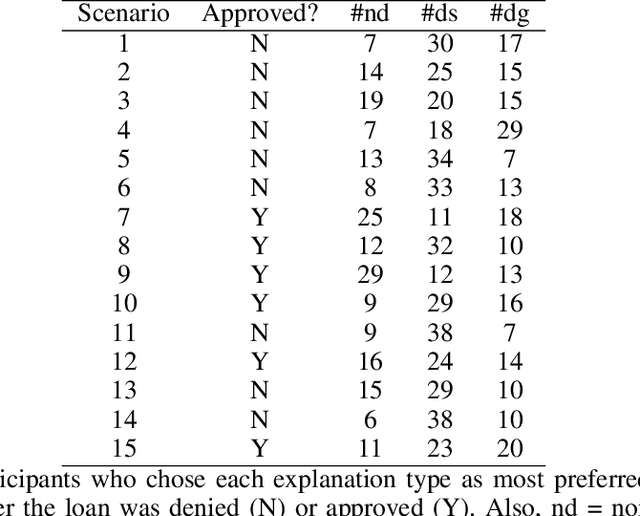

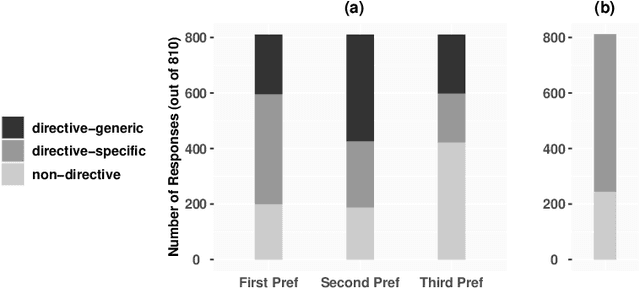

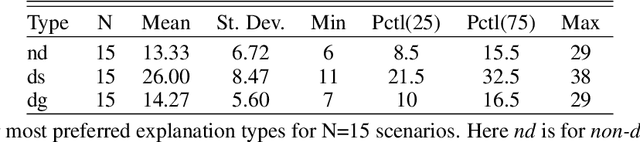

Abstract:This paper investigates the prospects of using directive explanations to assist people in achieving recourse of machine learning decisions. Directive explanations list which specific actions an individual needs to take to achieve their desired outcome. If a machine learning model makes a decision that is detrimental to an individual (e.g. denying a loan application), then it needs to both explain why it made that decision and also explain how the individual could obtain their desired outcome (if possible). At present, this is often done using counterfactual explanations, but such explanations generally do not tell individuals how to act. We assert that counterfactual explanations can be improved by explicitly providing people with actions they could use to achieve their desired goal. This paper makes two contributions. First, we present the results of an online study investigating people's perception of directive explanations. Second, we propose a conceptual model to generate such explanations. Our online study showed a significant preference for directive explanations ($p<0.001$). However, the participants' preferred explanation type was affected by multiple factors, such as individual preferences, social factors, and the feasibility of the directives. Our findings highlight the need for a human-centred and context-specific approach for creating directive explanations.

Distal Explanations for Explainable Reinforcement Learning Agents

Jan 28, 2020

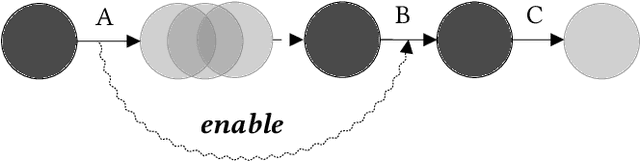

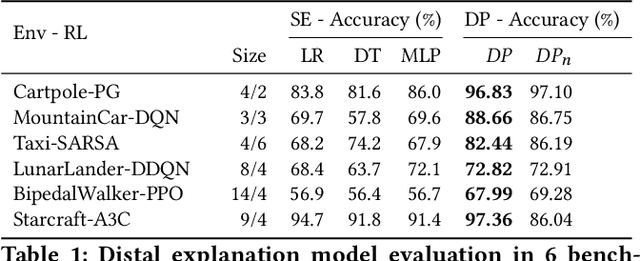

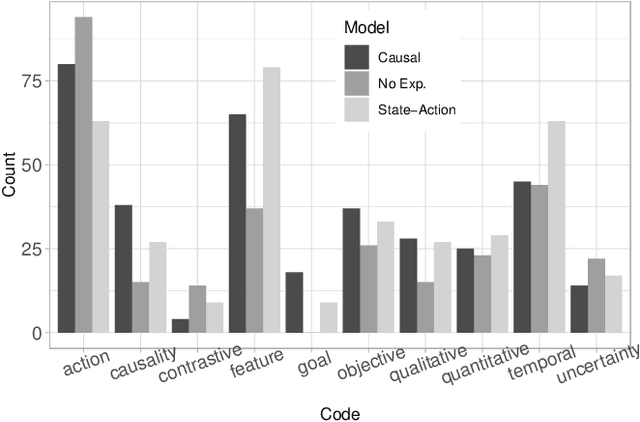

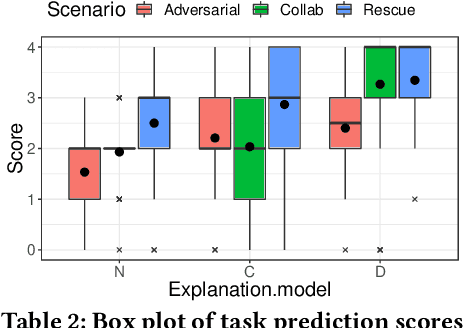

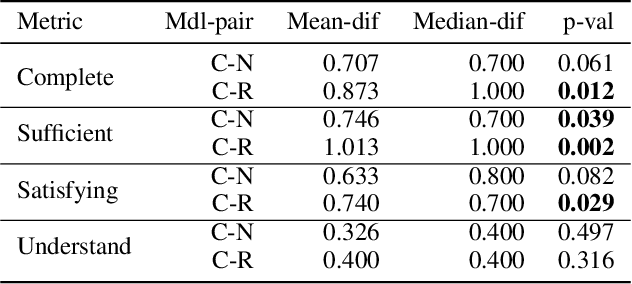

Abstract:Causal explanations present an intuitive way to understand the course of events through causal chains, and are widely accepted in cognitive science as the prominent model humans use for explanation. Importantly, causal models can generate opportunity chains, which take the form of `A enables B and B causes C'. We ground the notion of opportunity chains in human-agent experimental data, where we present participants with explanations from different models and ask them to provide their own explanations for agent behaviour. Results indicate that humans do in-fact use the concept of opportunity chains frequently for describing artificial agent behaviour. Recently, action influence models have been proposed to provide causal explanations for model-free reinforcement learning (RL). While these models can generate counterfactuals---things that did not happen but could have under different conditions---they lack the ability to generate explanations of opportunity chains. We introduce a distal explanation model that can analyse counterfactuals and opportunity chains using decision trees and causal models. We employ a recurrent neural network to learn opportunity chains and make use of decision trees to improve the accuracy of task prediction and the generated counterfactuals. We computationally evaluate the model in 6 RL benchmarks using different RL algorithms, and show that our model performs better in task prediction. We report on a study with 90 participants who receive explanations of RL agents behaviour in solving three scenarios: 1) Adversarial; 2) Search and rescue; and 3) Human-Agent collaborative scenarios. We investigate the participants' understanding of the agent through task prediction and their subjective satisfaction of the explanations and show that our distal explanation model results in improved outcomes over the three scenarios compared with two baseline explanation models.

Explainable Reinforcement Learning Through a Causal Lens

May 27, 2019

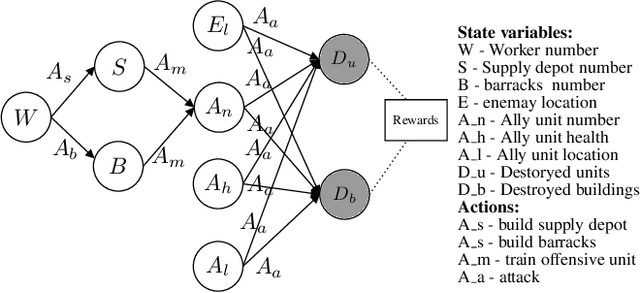

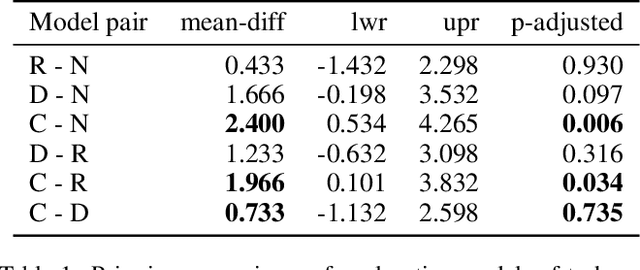

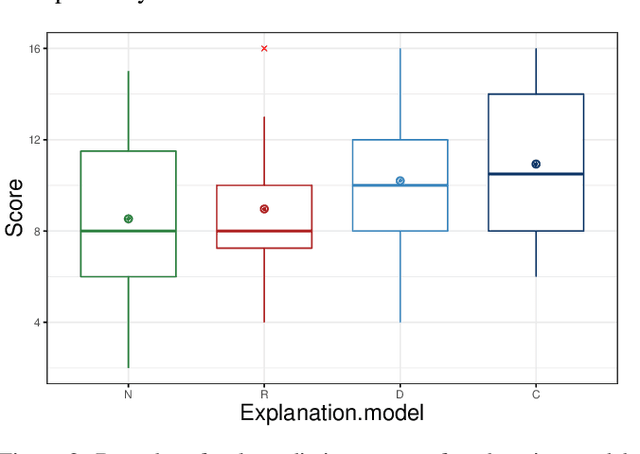

Abstract:Prevalent theories in cognitive science propose that humans understand and represent the knowledge of the world through causal relationships. In making sense of the world, we build causal models in our mind to encode cause-effect relations of events and use these to explain why new events happen. In this paper, we use causal models to derive causal explanations of behaviour of reinforcement learning agents. We present an approach that learns a structural causal model during reinforcement learning and encodes causal relationships between variables of interest. This model is then used to generate explanations of behaviour based on counterfactual analysis of the causal model. We report on a study with 120 participants who observe agents playing a real-time strategy game (Starcraft II) and then receive explanations of the agents' behaviour. We investigated: 1) participants' understanding gained by explanations through task prediction; 2) explanation satisfaction and 3) trust. Our results show that causal model explanations perform better on these measures compared to two other baseline explanation models.

A Grounded Interaction Protocol for Explainable Artificial Intelligence

Mar 05, 2019

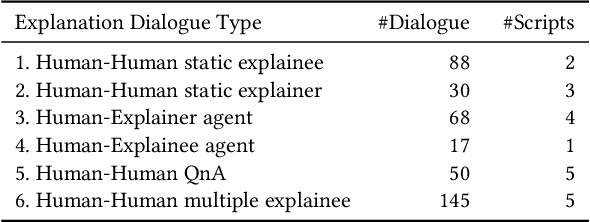

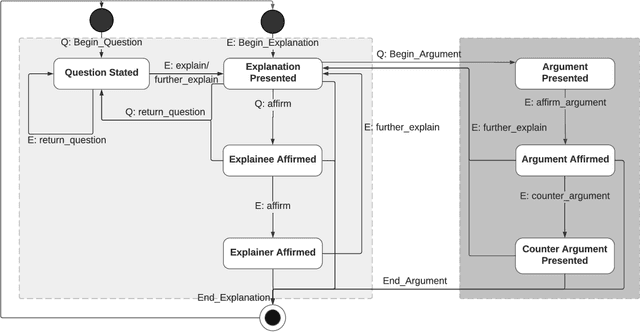

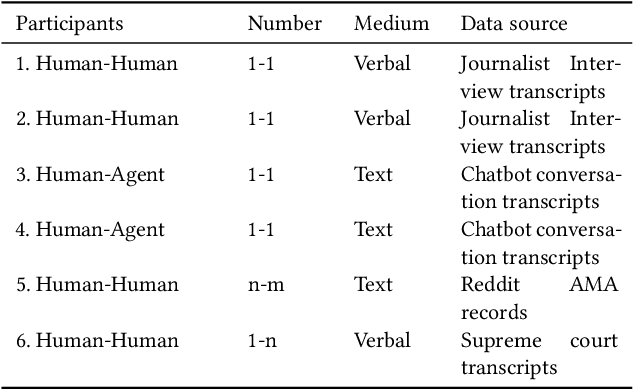

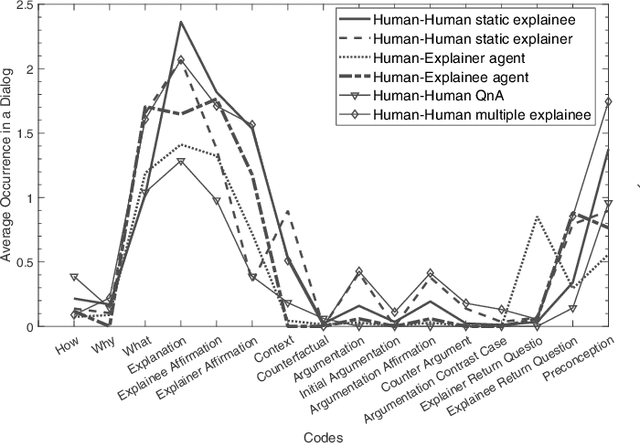

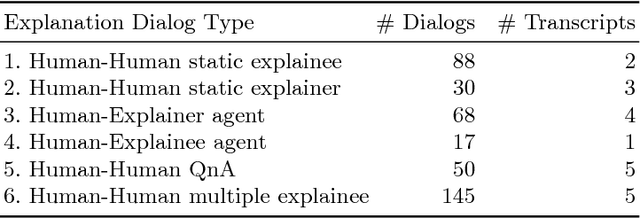

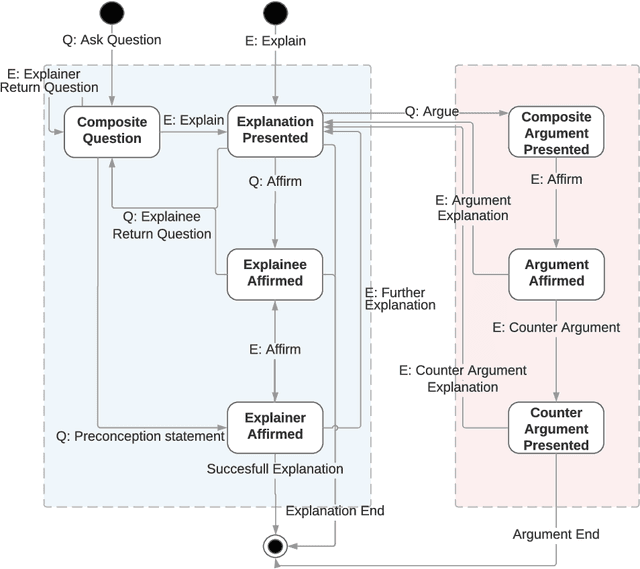

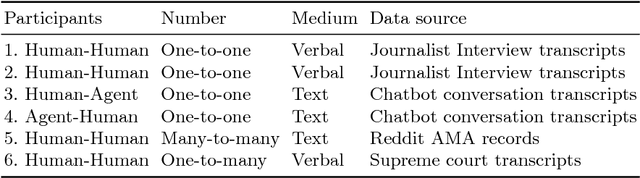

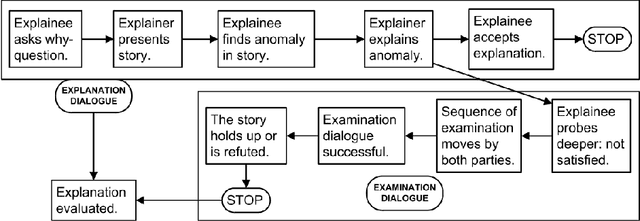

Abstract:Explainable Artificial Intelligence (XAI) systems need to include an explanation model to communicate the internal decisions, behaviours and actions to the interacting humans. Successful explanation involves both cognitive and social processes. In this paper we focus on the challenge of meaningful interaction between an explainer and an explainee and investigate the structural aspects of an interactive explanation to propose an interaction protocol. We follow a bottom-up approach to derive the model by analysing transcripts of different explanation dialogue types with 398 explanation dialogues. We use grounded theory to code and identify key components of an explanation dialogue. We formalize the model using the agent dialogue framework (ADF) as a new dialogue type and then evaluate it in a human-agent interaction study with 101 dialogues from 14 participants. Our results show that the proposed model can closely follow the explanation dialogues of human-agent conversations.

Interaction Design for Explainable AI: Workshop Proceedings

Dec 13, 2018

Abstract:As artificial intelligence (AI) systems become increasingly complex and ubiquitous, these systems will be responsible for making decisions that directly affect individuals and society as a whole. Such decisions will need to be justified due to ethical concerns as well as trust, but achieving this has become difficult due to the `black-box' nature many AI models have adopted. Explainable AI (XAI) can potentially address this problem by explaining its actions, decisions and behaviours of the system to users. However, much research in XAI is done in a vacuum using only the researchers' intuition of what constitutes a `good' explanation while ignoring the interaction and the human aspect. This workshop invites researchers in the HCI community and related fields to have a discourse about human-centred approaches to XAI rooted in interaction and to shed light and spark discussion on interaction design challenges in XAI.

Towards a Grounded Dialog Model for Explainable Artificial Intelligence

Jun 21, 2018

Abstract:To generate trust with their users, Explainable Artificial Intelligence (XAI) systems need to include an explanation model that can communicate the internal decisions, behaviours and actions to the interacting humans. Successful explanation involves both cognitive and social processes. In this paper we focus on the challenge of meaningful interaction between an explainer and an explainee and investigate the structural aspects of an explanation in order to propose a human explanation dialog model. We follow a bottom-up approach to derive the model by analysing transcripts of 398 different explanation dialog types. We use grounded theory to code and identify key components of which an explanation dialog consists. We carry out further analysis to identify the relationships between components and sequences and cycles that occur in a dialog. We present a generalized state model obtained by the analysis and compare it with an existing conceptual dialog model of explanation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge