Directive Explanations for Actionable Explainability in Machine Learning Applications

Paper and Code

Feb 03, 2021

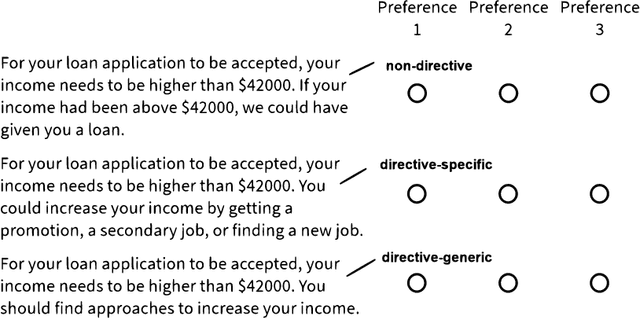

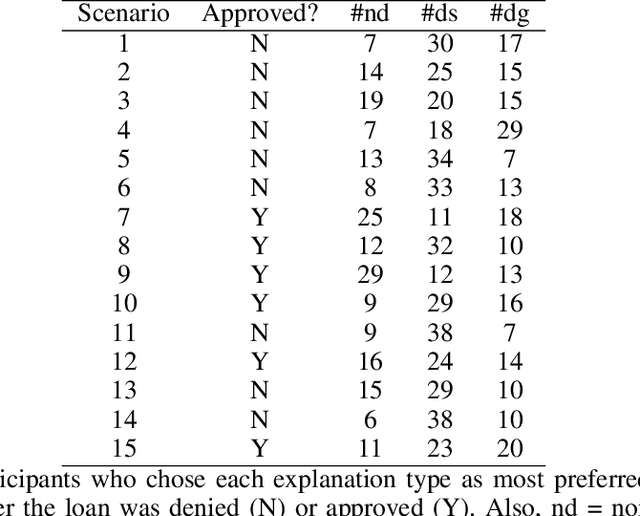

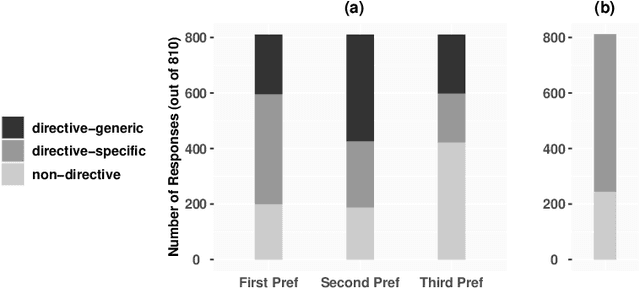

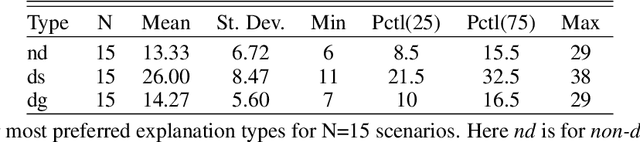

This paper investigates the prospects of using directive explanations to assist people in achieving recourse of machine learning decisions. Directive explanations list which specific actions an individual needs to take to achieve their desired outcome. If a machine learning model makes a decision that is detrimental to an individual (e.g. denying a loan application), then it needs to both explain why it made that decision and also explain how the individual could obtain their desired outcome (if possible). At present, this is often done using counterfactual explanations, but such explanations generally do not tell individuals how to act. We assert that counterfactual explanations can be improved by explicitly providing people with actions they could use to achieve their desired goal. This paper makes two contributions. First, we present the results of an online study investigating people's perception of directive explanations. Second, we propose a conceptual model to generate such explanations. Our online study showed a significant preference for directive explanations ($p<0.001$). However, the participants' preferred explanation type was affected by multiple factors, such as individual preferences, social factors, and the feasibility of the directives. Our findings highlight the need for a human-centred and context-specific approach for creating directive explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge