Frank Sippel

Fast Edge-Aware Occlusion Detection in the Context of Multispectral Camera Arrays

Aug 26, 2024

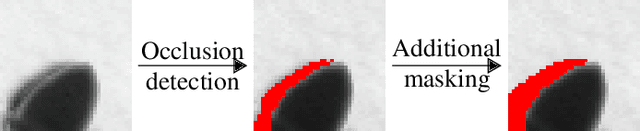

Abstract:Multispectral imaging is very beneficial in diverse applications, like healthcare and agriculture, since it can capture absorption bands of molecules in different spectral areas. A promising approach for multispectral snapshot imaging are camera arrays. Image processing is necessary to warp all different views to the same view to retrieve a consistent multispectral datacube. This process is also called multispectral image registration. After a cross spectral disparity estimation, an occlusion detection is required to find the pixels that were not recorded by the peripheral cameras. In this paper, a novel fast edge-aware occlusion detection is presented, which is shown to reduce the runtime by at least a factor of 12. Moreover, an evaluation on ground truth data reveals better performance in terms of precision and recall. Finally, the quality of a final multispectral datacube can be improved by more than 1.5 dB in terms of PSNR as well as in terms of SSIM in an existing multispectral registration pipeline. The source code is available at \url{https://github.com/FAU-LMS/fast-occlusion-detection}.

High-Resolution Hyperspectral Video Imaging Using A Hexagonal Camera Array

Jul 12, 2024

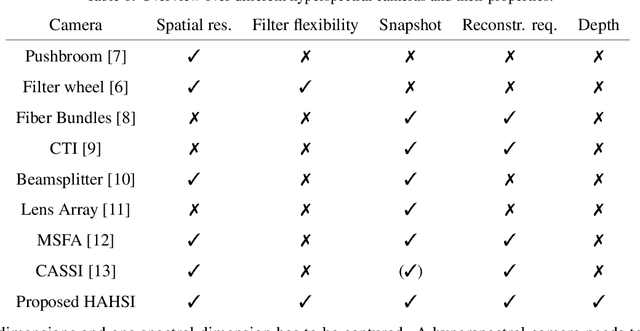

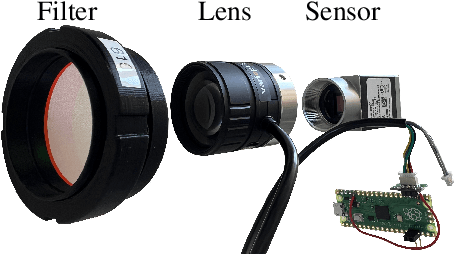

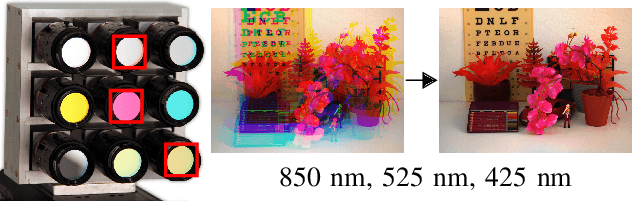

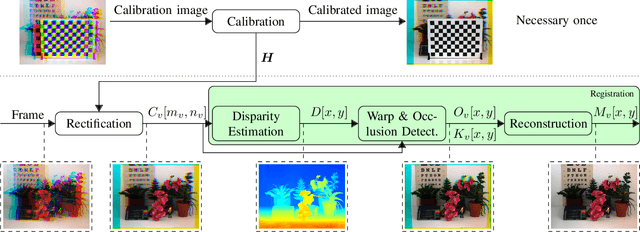

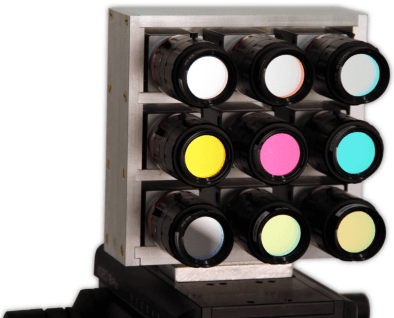

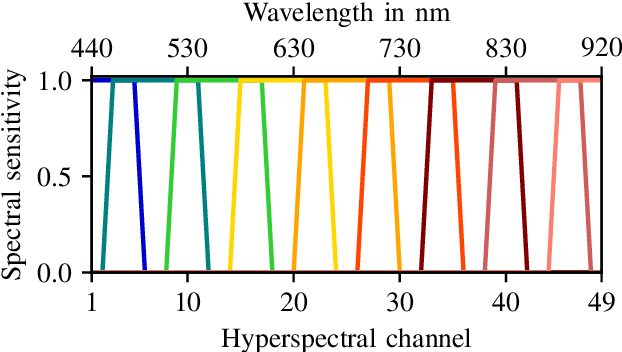

Abstract:Retrieving the reflectance spectrum from objects is an essential task for many classification and detection problems, since many materials and processes have a unique spectral behaviour. In many cases, it is highly desirable to capture hyperspectral images due to the high spectral flexibility. Often, it is even necessary to capture hyperspectral videos or at least to be able to record a hyperspectral image at once, also called snapshot hyperspectral imaging, to avoid spectral smearing. For this task, a high-resolution snapshot hyperspectral camera array using a hexagonal shape is introduced.The hexagonal array for hyperspectral imaging uses off-the-shelf hardware, which enables high flexibility regarding employed cameras, lenses and filters. Hence, the spectral range can be easily varied by mounting a different set of filters. Moreover, the concept of using off-the-shelf hardware enables low prices in comparison to other approaches with highly specialized hardware. Since classical industrial cameras are used in this hyperspectral camera array, the spatial and temporal resolution is very high, while recording 37 hyperspectral channels in the range from 400 nm to 760 nm in 10 nm steps. A registration process is required for near-field imaging, which maps the peripheral camera views to the center view. It is shown that this combination using a hyperspectral camera array and the corresponding image registration pipeline is superior in comparison to other popular snapshot approaches. For this evaluation, a synthetic hyperspectral database is rendered. On the synthetic data, the novel approach outperforms its best competitor by more than 3 dB in reconstruction quality. This synthetic data is also used to show the superiority of the hexagonal shape in comparison to an orthogonal-spaced one. Moreover, a real-world high resolution hyperspectral video database is provided.

Multispectral Snapshot Image Registration Using Learned Cross Spectral Disparity Estimation and a Deep Guided Occlusion Reconstruction Network

Jun 17, 2024

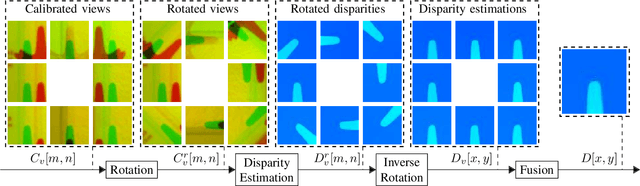

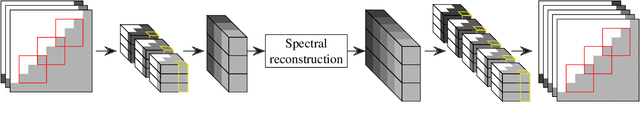

Abstract:Multispectral imaging aims at recording images in different spectral bands. This is extremely beneficial in diverse discrimination applications, for example in agriculture, recycling or healthcare. One approach for snapshot multispectral imaging, which is capable of recording multispectral videos, is by using camera arrays, where each camera records a different spectral band. Since the cameras are at different spatial positions, a registration procedure is necessary to map every camera to the same view. In this paper, we present a multispectral snapshot image registration with three novel components. First, a cross spectral disparity estimation network is introduced, which is trained on a popular stereo database using pseudo spectral data augmentation. Subsequently, this disparity estimation is used to accurately detect occlusions by warping the disparity map in a layer-wise manner. Finally, these detected occlusions are reconstructed by a learned deep guided neural network, which leverages the structure from other spectral components. It is shown that each element of this registration process as well as the final result is superior to the current state of the art. In terms of PSNR, our registration achieves an improvement of over 3 dB. At the same time, the runtime is decreased by a factor of over 3 on a CPU. Additionally, the registration is executable on a GPU, where the runtime can be decreased by a factor of 111. The source code and the data is available at https://github.com/FAU-LMS/MSIR.

A Guided Upsampling Network for Short Wave Infrared Images Using Graph Regularization

Dec 14, 2023

Abstract:Exploiting the infrared area of the spectrum for classification problems is getting increasingly popular, because many materials have characteristic absorption bands in this area. However, sensors in the short wave infrared (SWIR) area and even higher wavelengths have a very low spatial resolution in comparison to classical cameras that operate in the visible wavelength area. Thus, in this paper an upsampling method for SWIR images guided by a visible image is presented. For that, the proposed guided upsampling network (GUNet) uses a graph-regularized optimization problem based on learned affinities is presented. The evaluation is based on a novel synthetic near-field visible-SWIR stereo database. Different guided upsampling methods are evaluated, which shows an improvement of nearly 1 dB on this database for the proposed upsampling method in comparison to the second best guided upsampling network. Furthermore, a visual example of an upsampled SWIR image of a real-world scene is depicted for showing real-world applicability.

Color Agnostic Cross-Spectral Disparity Estimation

Dec 14, 2023Abstract:Since camera modules become more and more affordable, multispectral camera arrays have found their way from special applications to the mass market, e.g., in automotive systems, smartphones, or drones. Due to multiple modalities, the registration of different viewpoints and the required cross-spectral disparity estimation is up to the present extremely challenging. To overcome this problem, we introduce a novel spectral image synthesis in combination with a color agnostic transform. Thus, any recently published stereo matching network can be turned to a cross-spectral disparity estimator. Our novel algorithm requires only RGB stereo data to train a cross-spectral disparity estimator and a generalization from artificial training data to camera-captured images is obtained. The theoretical examination of the novel color agnostic method is completed by an extensive evaluation compared to state of the art including self-recorded multispectral data and a reference implementation. The novel color agnostic disparity estimation improves cross-spectral as well as conventional color stereo matching by reducing the average end-point error by 41% for cross-spectral and by 22% for mono-modal content, respectively.

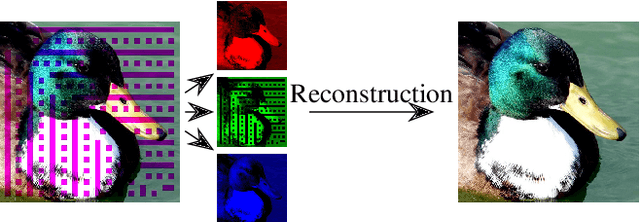

Cross Spectral Image Reconstruction Using a Deep Guided Neural Network

Jun 27, 2023Abstract:Cross spectral camera arrays, where each camera records different spectral content, are becoming increasingly popular for RGB, multispectral and hyperspectral imaging, since they are capable of a high resolution in every dimension using off-the-shelf hardware. For these, it is necessary to build an image processing pipeline to calculate a consistent image data cube, i.e., it should look like as if every camera records the scene from the center camera. Since the cameras record the scene from a different angle, this pipeline needs a reconstruction component for pixels that are not visible to peripheral cameras. For that, a novel deep guided neural network (DGNet) is presented. Since only little cross spectral data is available for training, this neural network is highly regularized. Furthermore, a new data augmentation process is introduced to generate the cross spectral content. On synthetic and real multispectral camera array data, the proposed network outperforms the state of the art by up to 2 dB in terms of PSNR on average. Besides, DGNet also tops its best competitor in terms of SSIM as well as in runtime by a factor of nearly 12. Moreover, a qualitative evaluation reveals visually more appealing results for real camera array data.

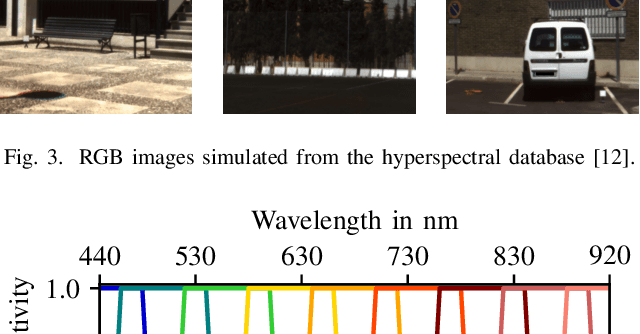

A Synthetic Hyperspectral Array Video Database with Applications to Cross-Spectral Reconstruction and Hyperspectral Video Coding

Jan 19, 2023Abstract:In this paper, a synthetic hyperspectral video database is introduced. Since it is impossible to record ground truth hyperspectral videos, this database offers the possibility to leverage the evaluation of algorithms in diverse applications. For all scenes, depth maps are provided as well to yield the position of a pixel in all spatial dimensions as well as the reflectance in spectral dimension. Two novel algorithms for two different applications are proposed to prove the diversity of applications that can be addressed by this novel database. First, a cross-spectral image reconstruction algorithm is extended to exploit the temporal correlation between two consecutive frames. The evaluation using this hyperspectral database shows an increase in PSNR of up to 5.6 dB dependent on the scene. Second, a hyperspectral video coder is introduced which extends an existing hyperspectral image coder by exploiting temporal correlation. The evaluation shows rate savings of up to 10% depending on the scene. The novel hyperspectral video database and source code is available at https:// github.com/ FAU-LMS/ HyViD for use by the research community.

Optimal Filter Selection for Multispectral Object Classification Using Fast Binary Search

Sep 16, 2022

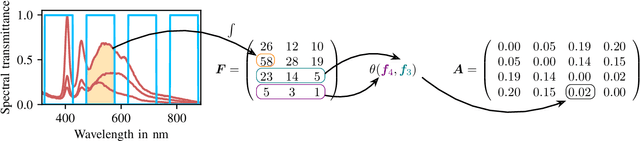

Abstract:When designing multispectral imaging systems for classifying different spectra it is necessary to choose a small number of filters from a set with several hundred different ones. Tackling this problem by full search leads to a tremendous number of possibilities to check and is NP-hard. In this paper we introduce a novel fast binary search for optimal filter selection that guarantees a minimum distance metric between the different spectra to classify. In our experiments, this procedure reaches the same optimal solution as with full search at much lower complexity. The desired number of filters influences the full search in factorial order while the fast binary search stays constant. Thus, fast binary search allows to find the optimal solution of all combinations in an adequate amount of time and avoids prevailing heuristics. Moreover, our fast binary search algorithm outperforms other filter selection techniques in terms of misclassified spectra in a real-world classification problem.

Hyperspectral Image Reconstruction from Multispectral Images Using Non-Local Filtering

Sep 16, 2022

Abstract:Using light spectra is an essential element in many applications, for example, in material classification. Often this information is acquired by using a hyperspectral camera. Unfortunately, these cameras have some major disadvantages like not being able to record videos. Therefore, multispectral cameras with wide-band filters are used, which are much cheaper and are often able to capture videos. However, using multispectral cameras requires an additional reconstruction step to yield spectral information. Usually, this reconstruction step has to be done in the presence of imaging noise, which degrades the reconstructed spectra severely. Typically, same or similar pixels are found across the image with the advantage of having independent noise. In contrast to state-of-the-art spectral reconstruction methods which only exploit neighboring pixels by block-based processing, this paper introduces non-local filtering in spectral reconstruction. First, a block-matching procedure finds similar non-local multispectral blocks. Thereafter, the hyperspectral pixels are reconstructed by filtering the matched multispectral pixels collaboratively using a reconstruction Wiener filter. The proposed novel procedure even works under very strong noise. The method is able to lower the spectral angle up to 18% and increase the peak signal-to-noise-ratio up to 1.1dB in noisy scenarios compared to state-of-the-art methods. Moreover, the visual results are much more appealing.

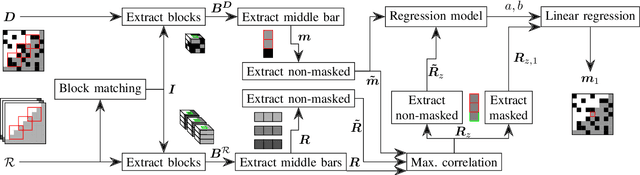

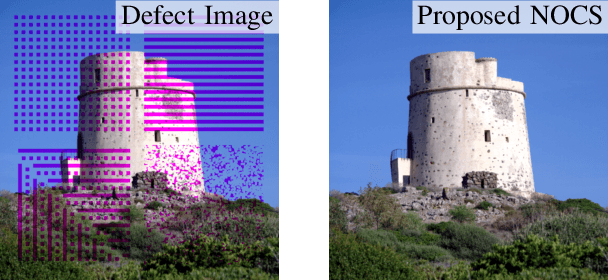

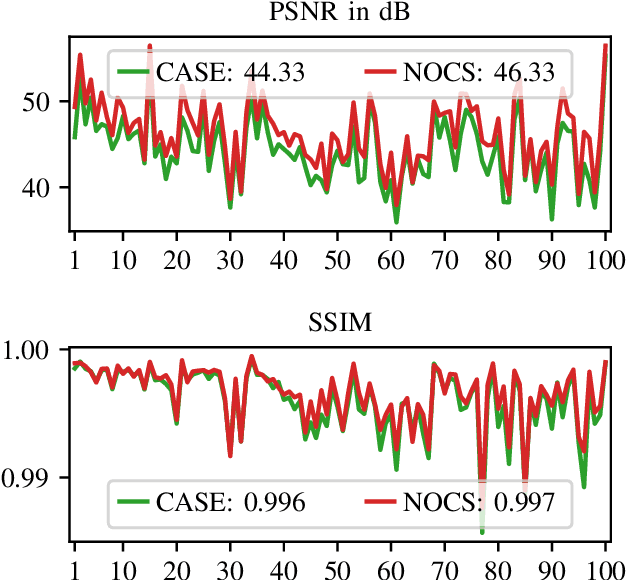

Spatio-spectral Image Reconstruction Using Non-local Filtering

Sep 16, 2022

Abstract:In many image processing tasks it occurs that pixels or blocks of pixels are missing or lost in only some channels. For example during defective transmissions of RGB images, it may happen that one or more blocks in one color channel are lost. Nearly all modern applications in image processing and transmission use at least three color channels, some of the applications employ even more bands, for example in the infrared and ultraviolet area of the light spectrum. Typically, only some pixels and blocks in a subset of color channels are distorted. Thus, other channels can be used to reconstruct the missing pixels, which is called spatio-spectral reconstruction. Current state-of-the-art methods purely rely on the local neighborhood, which works well for homogeneous regions. However, in high-frequency regions like edges or textures, these methods fail to properly model the relationship between color bands. Hence, this paper introduces non-local filtering for building a linear regression model that describes the inter-band relationship and is used to reconstruct the missing pixels. Our novel method is able to increase the PSNR on average by 2 dB and yields visually much more appealing images in high-frequency regions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge