Francesco Fraternali

ACES -- Automatic Configuration of Energy Harvesting Sensors with Reinforcement Learning

Sep 04, 2019

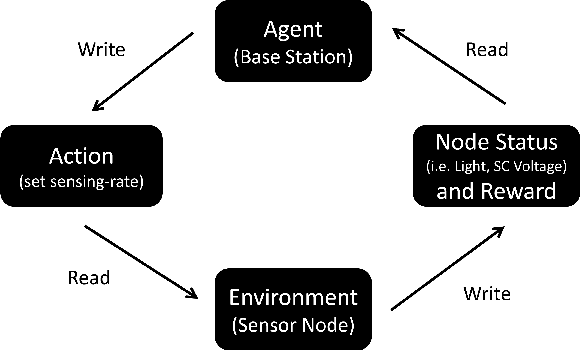

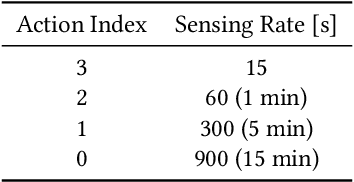

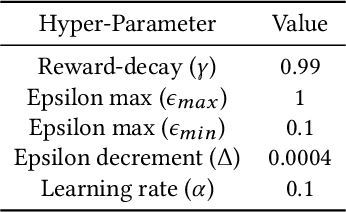

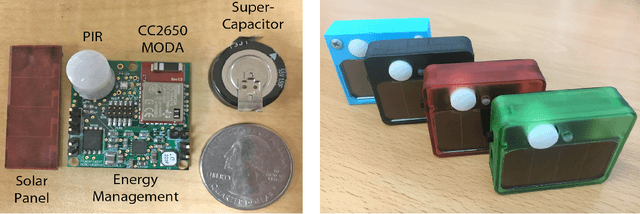

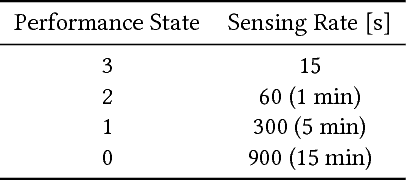

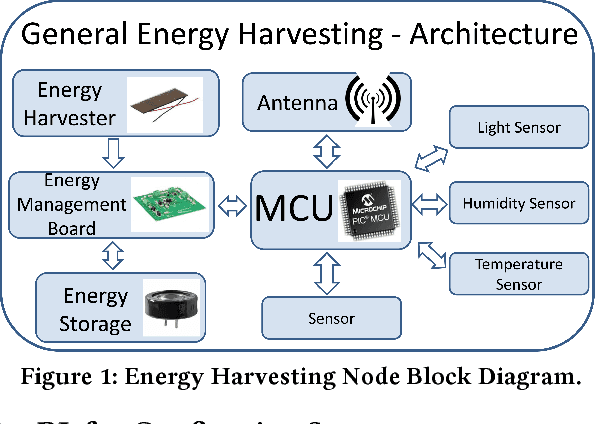

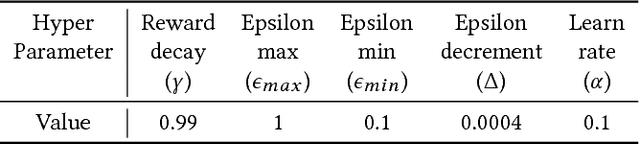

Abstract:Internet of Things forms the backbone of modern building applications. Wireless sensors are being increasingly adopted for their flexibility and reduced cost of deployment. However, most wireless sensors are powered by batteries today and large deployments are inhibited by manual battery replacement. Energy harvesting sensors provide an attractive alternative, but they need to provide adequate quality of service to applications given uncertain energy availability. We propose using reinforcement learning to optimize the operation of energy harvesting sensors to maximize sensing quality with available energy. We present our system ACES that uses reinforcement learning for periodic and event-driven sensing indoors with ambient light energy harvesting. Our custom-built board uses a supercapacitor to store energy temporarily, senses light, motion events and relays them using Bluetooth Low Energy. Using simulations and real deployments, we show that our sensor nodes adapt to their lighting conditions and continuously sends measurements and events across nights and weekends. We use deployment data to continually adapt sensing to changing environmental patterns and transfer learning to reduce the training time in real deployments. In our 60 node deployment lasting two weeks, we observe a dead time of 0.1%. The periodic sensors that measure luminosity have a mean sampling period of 90 seconds and the event sensors that detect motion with PIR captured 86% of the events on average compared to a battery-powered node.

Resource-Efficient Computing in Wearable Systems

Jul 07, 2019

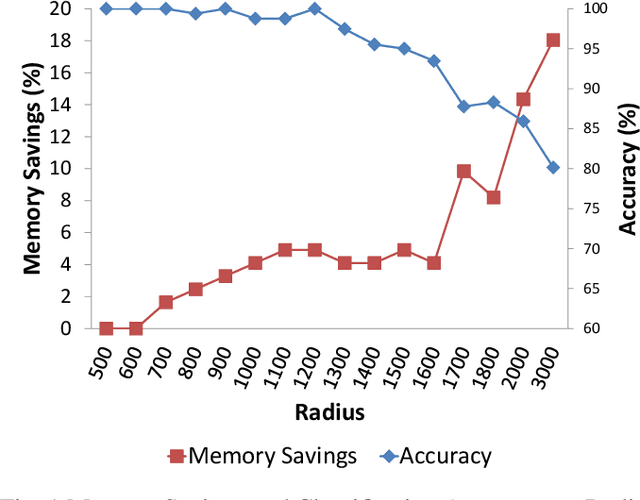

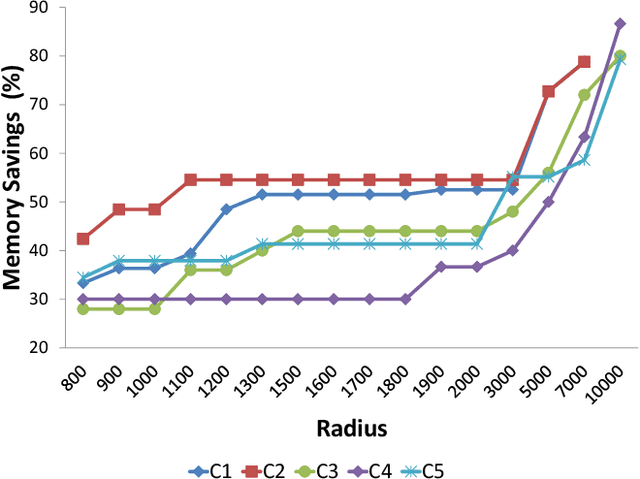

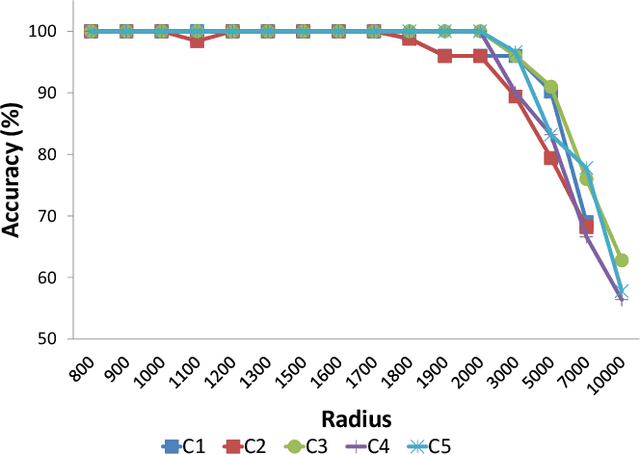

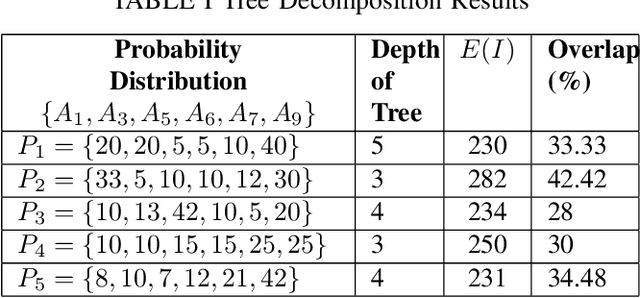

Abstract:We propose two optimization techniques to minimize memory usage and computation while meeting system timing constraints for real-time classification in wearable systems. Our method derives a hierarchical classifier structure for Support Vector Machine (SVM) in order to reduce the amount of computations, based on the probability distribution of output classes occurrences. Also, we propose a memory optimization technique based on SVM parameters, which results in storing fewer support vectors and as a result requiring less memory. To demonstrate the efficiency of our proposed techniques, we performed an activity recognition experiment and were able to save up to 35% and 56% in memory storage when classifying 14 and 6 different activities, respectively. In addition, we demonstrated that there is a trade-off between accuracy of classification and memory savings, which can be controlled based on application requirements.

Scaling Configuration of Energy Harvesting Sensors with Reinforcement Learning

Nov 27, 2018

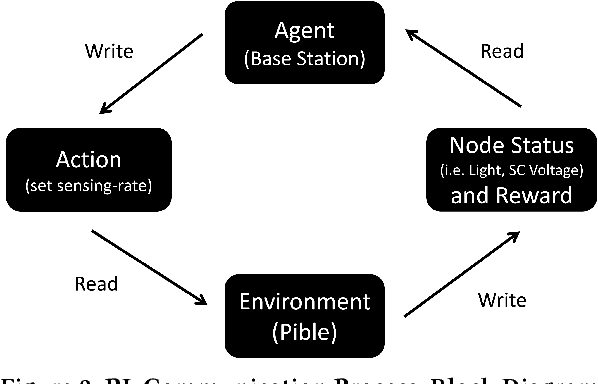

Abstract:With the advent of the Internet of Things (IoT), an increasing number of energy harvesting methods are being used to supplement or supplant battery based sensors. Energy harvesting sensors need to be configured according to the application, hardware, and environmental conditions to maximize their usefulness. As of today, the configuration of sensors is either manual or heuristics based, requiring valuable domain expertise. Reinforcement learning (RL) is a promising approach to automate configuration and efficiently scale IoT deployments, but it is not yet adopted in practice. We propose solutions to bridge this gap: reduce the training phase of RL so that nodes are operational within a short time after deployment and reduce the computational requirements to scale to large deployments. We focus on configuration of the sampling rate of indoor solar panel based energy harvesting sensors. We created a simulator based on 3 months of data collected from 5 sensor nodes subject to different lighting conditions. Our simulation results show that RL can effectively learn energy availability patterns and configure the sampling rate of the sensor nodes to maximize the sensing data while ensuring that energy storage is not depleted. The nodes can be operational within the first day by using our methods. We show that it is possible to reduce the number of RL policies by using a single policy for nodes that share similar lighting conditions.

* 7 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge