ACES -- Automatic Configuration of Energy Harvesting Sensors with Reinforcement Learning

Paper and Code

Sep 04, 2019

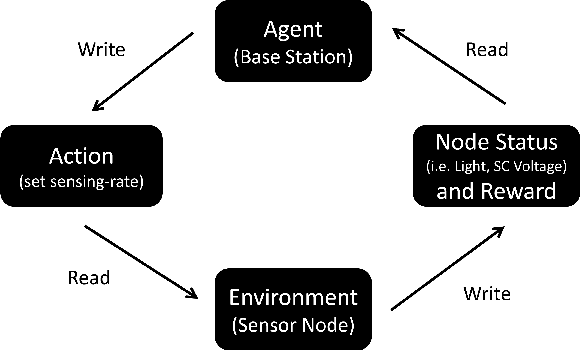

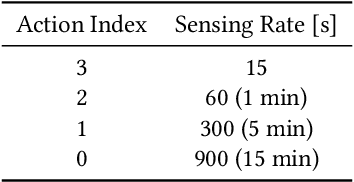

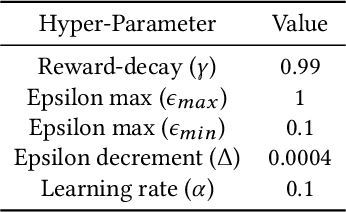

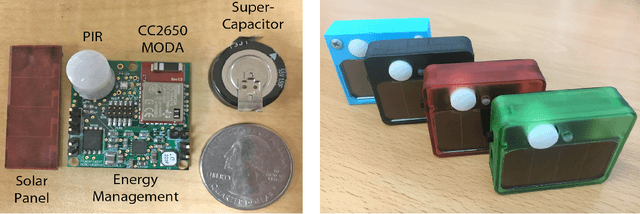

Internet of Things forms the backbone of modern building applications. Wireless sensors are being increasingly adopted for their flexibility and reduced cost of deployment. However, most wireless sensors are powered by batteries today and large deployments are inhibited by manual battery replacement. Energy harvesting sensors provide an attractive alternative, but they need to provide adequate quality of service to applications given uncertain energy availability. We propose using reinforcement learning to optimize the operation of energy harvesting sensors to maximize sensing quality with available energy. We present our system ACES that uses reinforcement learning for periodic and event-driven sensing indoors with ambient light energy harvesting. Our custom-built board uses a supercapacitor to store energy temporarily, senses light, motion events and relays them using Bluetooth Low Energy. Using simulations and real deployments, we show that our sensor nodes adapt to their lighting conditions and continuously sends measurements and events across nights and weekends. We use deployment data to continually adapt sensing to changing environmental patterns and transfer learning to reduce the training time in real deployments. In our 60 node deployment lasting two weeks, we observe a dead time of 0.1%. The periodic sensors that measure luminosity have a mean sampling period of 90 seconds and the event sensors that detect motion with PIR captured 86% of the events on average compared to a battery-powered node.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge