Florian Sobieczky

Reinforcement Learning for Accelerated Aerodynamic Shape Optimisation

Jul 23, 2025Abstract:We introduce a reinforcement learning (RL) based adaptive optimization algorithm for aerodynamic shape optimization focused on dimensionality reduction. The form in which RL is applied here is that of a surrogate-based, actor-critic policy evaluation MCMC approach allowing for temporal 'freezing' of some of the parameters to be optimized. The goals are to minimize computational effort, and to use the observed optimization results for interpretation of the discovered extrema in terms of their role in achieving the desired flow-field. By a sequence of local optimized parameter changes around intermediate CFD simulations acting as ground truth, it is possible to speed up the global optimization if (a) the local neighbourhoods of the parameters in which the changed parameters must reside are sufficiently large to compete with the grid-sized steps and its large number of simulations, and (b) the estimates of the rewards and costs on these neighbourhoods necessary for a good step-wise parameter adaption are sufficiently accurate. We give an example of a simple fluid-dynamical problem on which the method allows interpretation in the sense of a feature importance scoring.

Surrogate Modeling for Explainable Predictive Time Series Corrections

Dec 27, 2024

Abstract:We introduce a local surrogate approach for explainable time-series forecasting. An initially non-interpretable predictive model to improve the forecast of a classical time-series 'base model' is used. 'Explainability' of the correction is provided by fitting the base model again to the data from which the error prediction is removed (subtracted), yielding a difference in the model parameters which can be interpreted. We provide illustrative examples to demonstrate the potential of the method to discover and explain underlying patterns in the data.

Predictive change point detection for heterogeneous data

May 11, 2023Abstract:A change point detection (CPD) framework assisted by a predictive machine learning model called ''Predict and Compare'' is introduced and characterised in relation to other state-of-the-art online CPD routines which it outperforms in terms of false positive rate and out-of-control average run length. The method's focus is on improving standard methods from sequential analysis such as the CUSUM rule in terms of these quality measures. This is achieved by replacing typically used trend estimation functionals such as the running mean with more sophisticated predictive models (Predict step), and comparing their prognosis with actual data (Compare step). The two models used in the Predict step are the ARIMA model and the LSTM recursive neural network. However, the framework is formulated in general terms, so as to allow the use of other prediction or comparison methods than those tested here. The power of the method is demonstrated in a tribological case study in which change points separating the run-in, steady-state, and divergent wear phases are detected in the regime of very few false positives.

Explainable AI by BAPC -- Before and After correction Parameter Comparison

Mar 12, 2021

Abstract:By means of a local surrogate approach, an analytical method to yield explanations of AI-predictions in the framework of regression models is defined. In the case of the AI-model producing additive corrections to the predictions of a base model, the explanations are delivered in the form of a shift of its interpretable parameters as long as the AI- predictions are small in a rigorously defined sense. Criteria are formulated giving a precise relation between lost accuracy and lacking model fidelity. Two applications show how physical or econometric parameters may be used to interpret the action of neural network and random forest models in the sense of the underlying base model. This is an extended version of our paper presented at the ISM 2020 conference, where we first introduced our new approach BAPC.

Graph-based calibration transfer

May 29, 2020

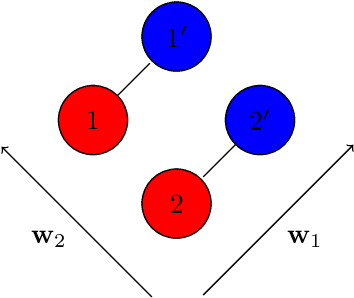

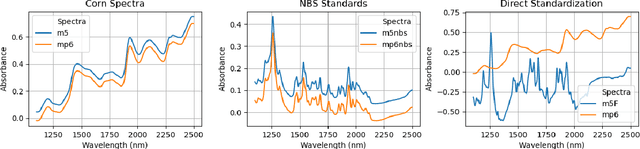

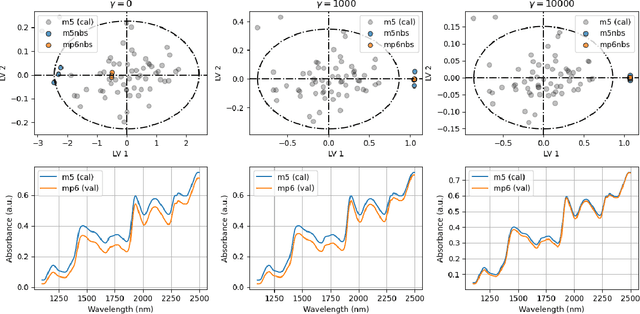

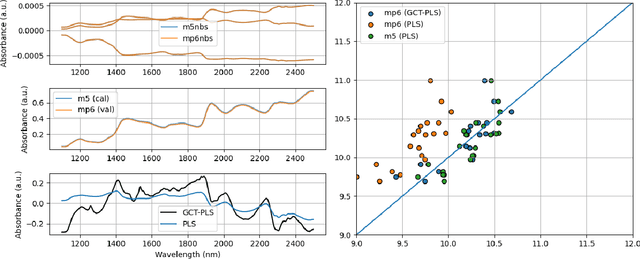

Abstract:The problem of transferring calibrations from a primary to a secondary instrument, i.e. calibration transfer (CT), has been a matter of considerable research in chemometrics over the past decades. Current state-of-the-art (SoA) methods like (piecewise) direct standardization perform well when suitable transfer standards are available. However, stable calibration standards that share similar (spectral) features with the calibration samples are not always available. Towards enabling CT with arbitrary calibration standards, we propose a novel CT technique that employs manifold regularization of the partial least squares (PLS) objective. In particular, our method enforces that calibration standards, measured on primary and secondary instruments, have (nearly) invariant projections in the latent variable space of the primary calibration model. Thereby, our approach implicitly removes inter-device variation in the predictive directions of X which is in contrast to most state-of-the-art techniques that employ explicit pre-processing of the input data. We test our approach on the well-known corn benchmark data set employing the NBS glass standard spectra for instrument standardization and compare the results with current SoA methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge