Florence Sèdes

IRIT

Are Large Language Models the New Interface for Data Pipelines?

Jun 06, 2024

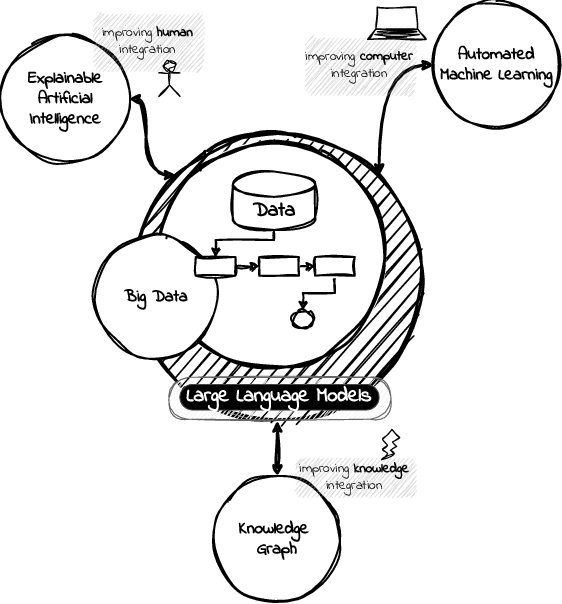

Abstract:A Language Model is a term that encompasses various types of models designed to understand and generate human communication. Large Language Models (LLMs) have gained significant attention due to their ability to process text with human-like fluency and coherence, making them valuable for a wide range of data-related tasks fashioned as pipelines. The capabilities of LLMs in natural language understanding and generation, combined with their scalability, versatility, and state-of-the-art performance, enable innovative applications across various AI-related fields, including eXplainable Artificial Intelligence (XAI), Automated Machine Learning (AutoML), and Knowledge Graphs (KG). Furthermore, we believe these models can extract valuable insights and make data-driven decisions at scale, a practice commonly referred to as Big Data Analytics (BDA). In this position paper, we provide some discussions in the direction of unlocking synergies among these technologies, which can lead to more powerful and intelligent AI solutions, driving improvements in data pipelines across a wide range of applications and domains integrating humans, computers, and knowledge.

An interdisciplinary conceptual study of Artificial Intelligence (AI) for helping benefit-risk assessment practices: Towards a comprehensive qualification matrix of AI programs and devices (pre-print 2020)

May 07, 2021

Abstract:This paper proposes a comprehensive analysis of existing concepts coming from different disciplines tackling the notion of intelligence, namely psychology and engineering, and from disciplines aiming to regulate AI innovations, namely AI ethics and law. The aim is to identify shared notions or discrepancies to consider for qualifying AI systems. Relevant concepts are integrated into a matrix intended to help defining more precisely when and how computing tools (programs or devices) may be qualified as AI while highlighting critical features to serve a specific technical, ethical and legal assessment of challenges in AI development. Some adaptations of existing notions of AI characteristics are proposed. The matrix is a risk-based conceptual model designed to allow an empirical, flexible and scalable qualification of AI technologies in the perspective of benefit-risk assessment practices, technological monitoring and regulatory compliance: it offers a structured reflection tool for stakeholders in AI development that are engaged in responsible research and innovation.Pre-print version (achieved on May 2020)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge