Florence Le Ber

ICube

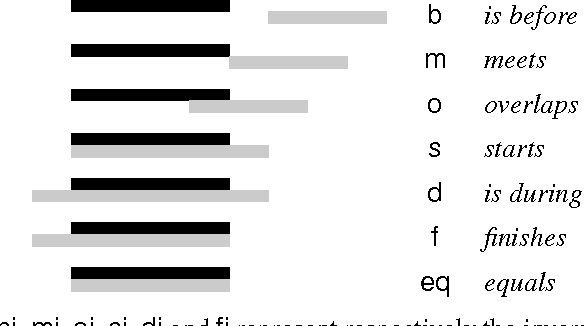

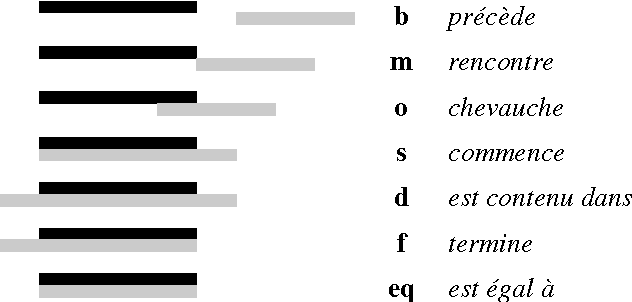

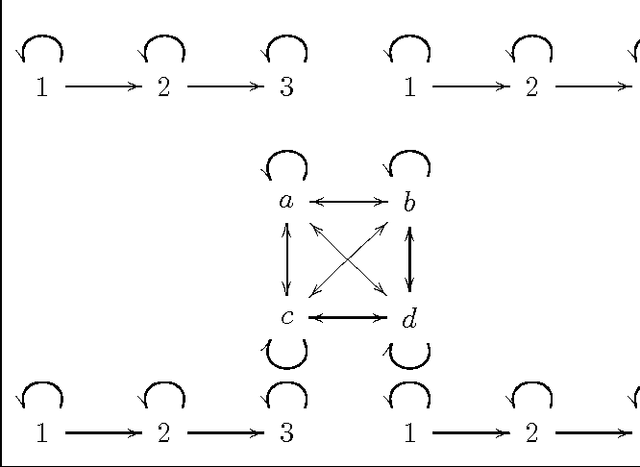

Belief revision in the propositional closure of a qualitative algebra

Dec 12, 2014

Abstract:Belief revision is an operation that aims at modifying old be-liefs so that they become consistent with new ones. The issue of belief revision has been studied in various formalisms, in particular, in qualitative algebras (QAs) in which the result is a disjunction of belief bases that is not necessarily repre-sentable in a QA. This motivates the study of belief revision in formalisms extending QAs, namely, their propositional clo-sures: in such a closure, the result of belief revision belongs to the formalism. Moreover, this makes it possible to define a contraction operator thanks to the Harper identity. Belief revision in the propositional closure of QAs is studied, an al-gorithm for a family of revision operators is designed, and an open-source implementation is made freely available on the web.

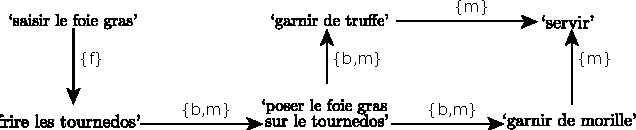

Case Adaptation with Qualitative Algebras

Oct 10, 2013

Abstract:This paper proposes an approach for the adaptation of spatial or temporal cases in a case-based reasoning system. Qualitative algebras are used as spatial and temporal knowledge representation languages. The intuition behind this adaptation approach is to apply a substitution and then repair potential inconsistencies, thanks to belief revision on qualitative algebras. A temporal example from the cooking domain is given. (The paper on which this extended abstract is based was the recipient of the best paper award of the 2012 International Conference on Case-Based Reasoning.)

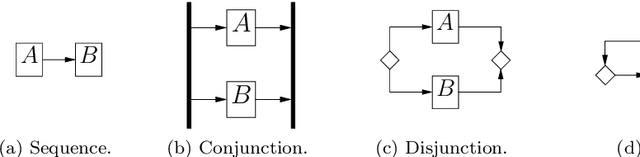

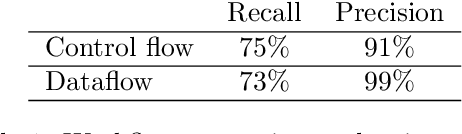

Automatic case acquisition from texts for process-oriented case-based reasoning

Apr 14, 2013

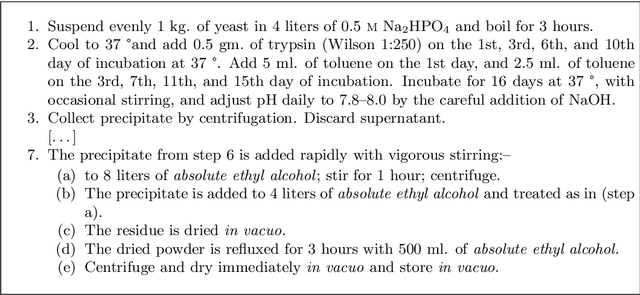

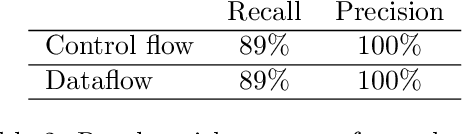

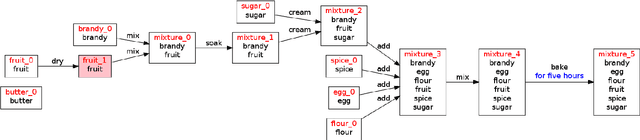

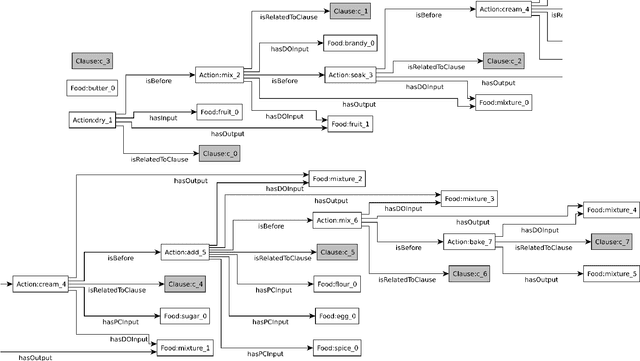

Abstract:This paper introduces a method for the automatic acquisition of a rich case representation from free text for process-oriented case-based reasoning. Case engineering is among the most complicated and costly tasks in implementing a case-based reasoning system. This is especially so for process-oriented case-based reasoning, where more expressive case representations are generally used and, in our opinion, actually required for satisfactory case adaptation. In this context, the ability to acquire cases automatically from procedural texts is a major step forward in order to reason on processes. We therefore detail a methodology that makes case acquisition from processes described as free text possible, with special attention given to assembly instruction texts. This methodology extends the techniques we used to extract actions from cooking recipes. We argue that techniques taken from natural language processing are required for this task, and that they give satisfactory results. An evaluation based on our implemented prototype extracting workflows from recipe texts is provided.

* Sous presse, publication pr\'evue en 2013

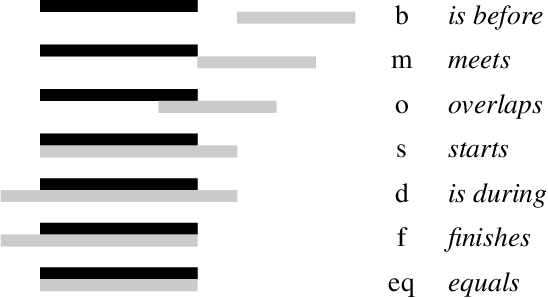

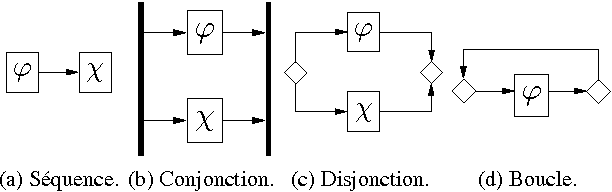

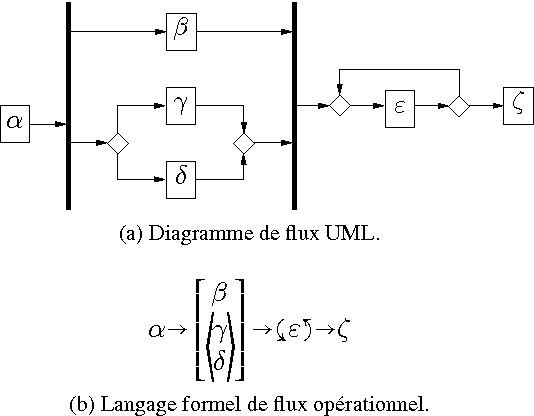

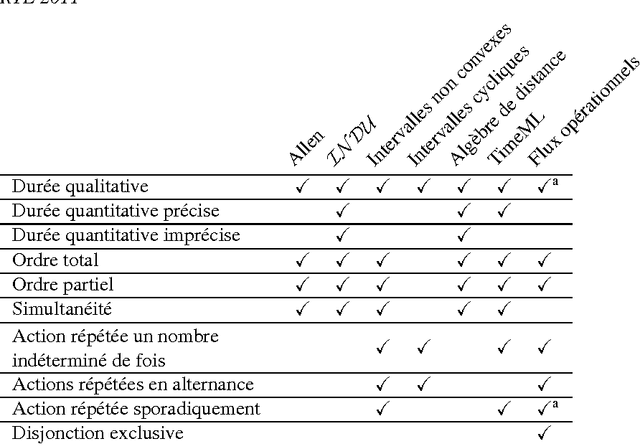

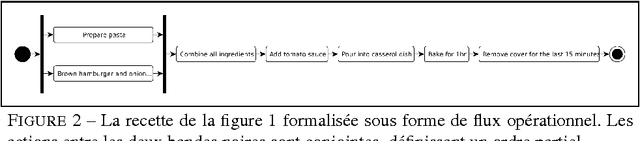

Extension du formalisme des flux opérationnels par une algèbre temporelle

Sep 25, 2012

Abstract:Workflows constitute an important language to represent knowledge about processes, but also increasingly to reason on such knowledge. On the other hand, there is a limit to which time constraints between activities can be expressed. Qualitative interval algebras can model processes using finer temporal relations, but they cannot reproduce all workflow patterns. This paper defines a common ground model-theoretical semantics for both workflows and interval algebras, making it possible for reasoning systems working with either to interoperate. Thanks to this, interesting properties and inferences can be defined, both on workflows and on an extended formalism combining workflows with interval algebras. Finally, similar formalisms proposing a sound formal basis for workflows and extending them are discussed.

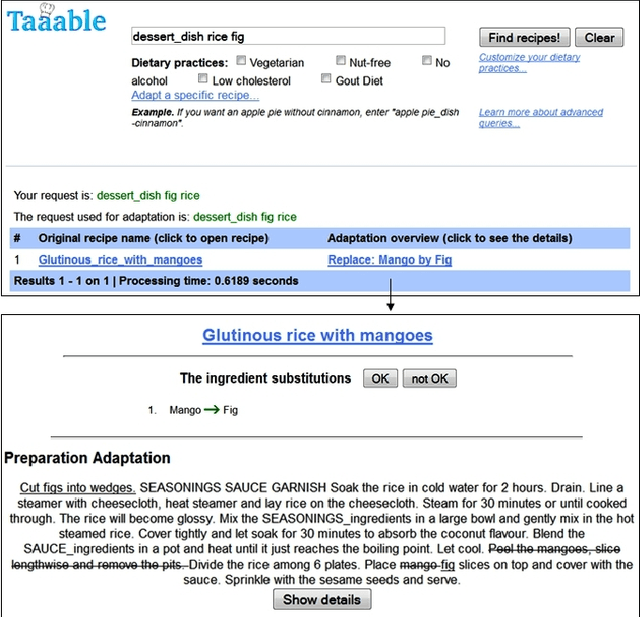

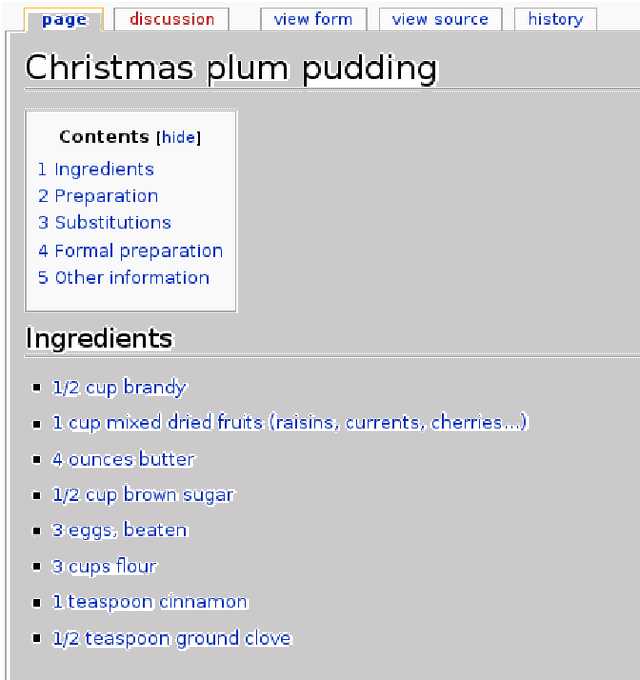

Semi-automatic annotation process for procedural texts: An application on cooking recipes

Sep 25, 2012

Abstract:Taaable is a case-based reasoning system that adapts cooking recipes to user constraints. Within it, the preparation part of recipes is formalised as a graph. This graph is a semantic representation of the sequence of instructions composing the cooking process and is used to compute the procedure adaptation, conjointly with the textual adaptation. It is composed of cooking actions and ingredients, among others, represented as vertices, and semantic relations between those, shown as arcs, and is built automatically thanks to natural language processing. The results of the automatic annotation process is often a disconnected graph, representing an incomplete annotation, or may contain errors. Therefore, a validating and correcting step is required. In this paper, we present an existing graphic tool named \kcatos, conceived for representing and editing decision trees, and show how it has been adapted and integrated in WikiTaaable, the semantic wiki in which the knowledge used by Taaable is stored. This interface provides the wiki users with a way to correct the case representation of the cooking process, improving at the same time the quality of the knowledge about cooking procedures stored in WikiTaaable.

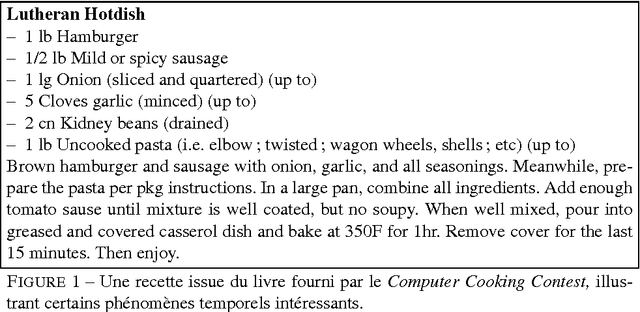

Quels formalismes temporels pour représenter des connaissances extraites de textes de recettes de cuisine ?

Oct 24, 2011

Abstract:The Taaable projet goal is to create a case-based reasoning system for retrieval and adaptation of cooking recipes. Within this framework, we are discussing the temporal aspects of recipes and the means of representing those in order to adapt their text.

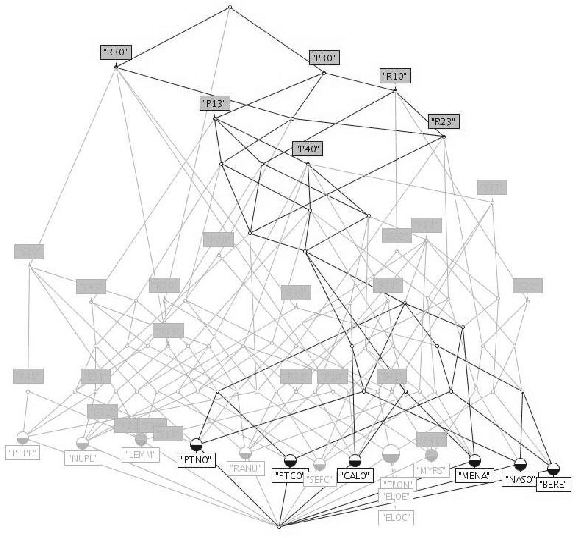

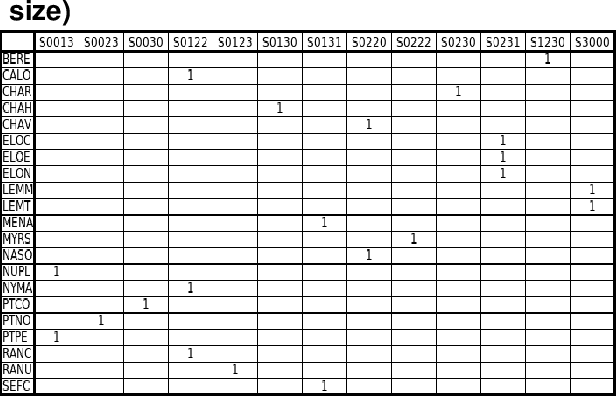

Mining Complex Hydrobiological Data with Galois Lattices

Nov 06, 2008

Abstract:We have used Galois lattices for mining hydrobiological data. These data are about macrophytes, that are macroscopic plants living in water bodies. These plants are characterized by several biological traits, that own several modalities. Our aim is to cluster the plants according to their common traits and modalities and to find out the relations between traits. Galois lattices are efficient methods for such an aim, but apply on binary data. In this article, we detail a few approaches we used to transform complex hydrobiological data into binary data and compare the first results obtained thanks to Galois lattices.

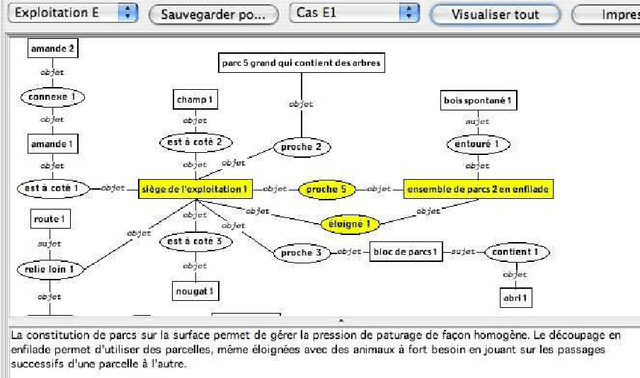

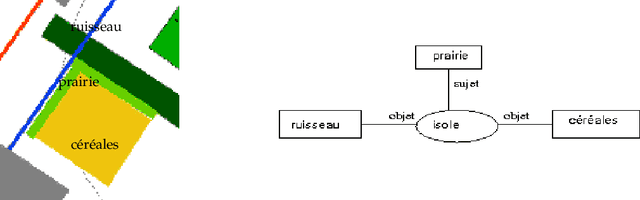

Étude longitudinale d'une procédure de modélisation de connaissances en matière de gestion du territoire agricole

Nov 06, 2008

Abstract:This paper gives an introduction to this issue, and presents the framework and the main steps of the Rosa project. Four teams of researchers, agronomists, computer scientists, psychologists and linguists were involved during five years within this project that aimed at the development of a knowledge based system. The purpose of the Rosa system is the modelling and the comparison of farm spatial organizations. It relies on a formalization of agronomical knowledge and thus induces a joint knowledge building process involving both the agronomists and the computer scientists. The paper describes the steps of the modelling process as well as the filming procedures set up by the psychologists and linguists in order to make explicit and to analyze the underlying knowledge building process.

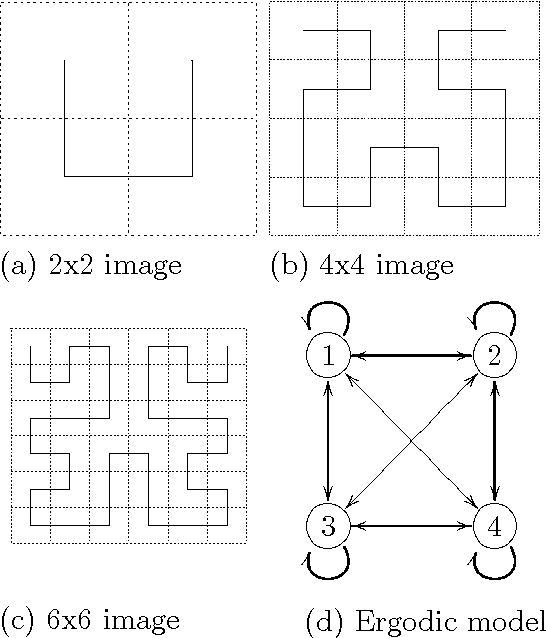

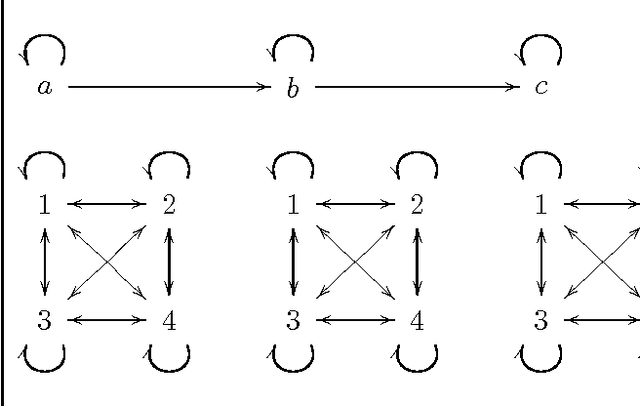

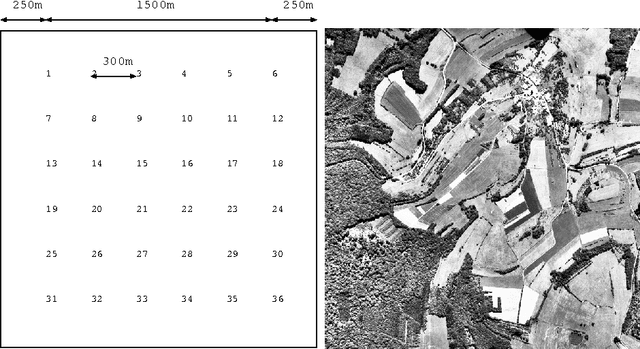

Temporal and Spatial Data Mining with Second-Order Hidden Models

May 09, 2005

Abstract:In the frame of designing a knowledge discovery system, we have developed stochastic models based on high-order hidden Markov models. These models are capable to map sequences of data into a Markov chain in which the transitions between the states depend on the \texttt{n} previous states according to the order of the model. We study the process of achieving information extraction fromspatial and temporal data by means of an unsupervised classification. We use therefore a French national database related to the land use of a region, named Teruti, which describes the land use both in the spatial and temporal domain. Land-use categories (wheat, corn, forest, ...) are logged every year on each site regularly spaced in the region. They constitute a temporal sequence of images in which we look for spatial and temporal dependencies. The temporal segmentation of the data is done by means of a second-order Hidden Markov Model (\hmmd) that appears to have very good capabilities to locate stationary segments, as shown in our previous work in speech recognition. Thespatial classification is performed by defining a fractal scanning ofthe images with the help of a Hilbert-Peano curve that introduces atotal order on the sites, preserving the relation ofneighborhood between the sites. We show that the \hmmd performs aclassification that is meaningful for the agronomists.Spatial and temporal classification may be achieved simultaneously by means of a 2 levels \hmmd that measures the \aposteriori probability to map a temporal sequence of images onto a set of hidden classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge