Fernando Silva

Synthetic Time Series Generation via Complex Networks

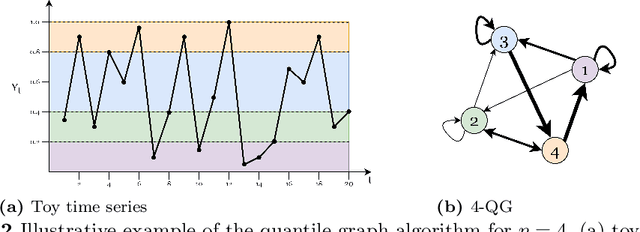

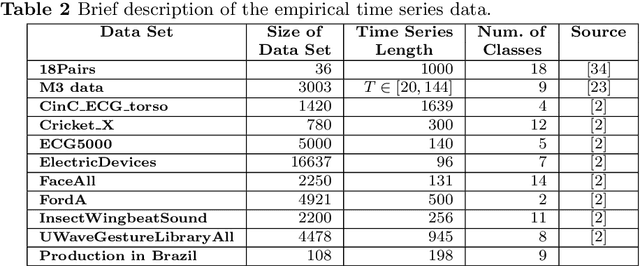

Jan 30, 2026Abstract:Time series data are essential for a wide range of applications, particularly in developing robust machine learning models. However, access to high-quality datasets is often limited due to privacy concerns, acquisition costs, and labeling challenges. Synthetic time series generation has emerged as a promising solution to address these constraints. In this work, we present a framework for generating synthetic time series by leveraging complex networks mappings. Specifically, we investigate whether time series transformed into Quantile Graphs (QG) -- and then reconstructed via inverse mapping -- can produce synthetic data that preserve the statistical and structural properties of the original. We evaluate the fidelity and utility of the generated data using both simulated and real-world datasets, and compare our approach against state-of-the-art Generative Adversarial Network (GAN) methods. Results indicate that our quantile graph-based methodology offers a competitive and interpretable alternative for synthetic time series generation.

Reconstructing Spatiotemporal Data with C-VAEs

Jul 12, 2023Abstract:The continuous representation of spatiotemporal data commonly relies on using abstract data types, such as \textit{moving regions}, to represent entities whose shape and position continuously change over time. Creating this representation from discrete snapshots of real-world entities requires using interpolation methods to compute in-between data representations and estimate the position and shape of the object of interest at arbitrary temporal points. Existing region interpolation methods often fail to generate smooth and realistic representations of a region's evolution. However, recent advancements in deep learning techniques have revealed the potential of deep models trained on discrete observations to capture spatiotemporal dependencies through implicit feature learning. In this work, we explore the capabilities of Conditional Variational Autoencoder (C-VAE) models to generate smooth and realistic representations of the spatiotemporal evolution of moving regions. We evaluate our proposed approach on a sparsely annotated dataset on the burnt area of a forest fire. We apply compression operations to sample from the dataset and use the C-VAE model and other commonly used interpolation algorithms to generate in-between region representations. To evaluate the performance of the methods, we compare their interpolation results with manually annotated data and regions generated by a U-Net model. We also assess the quality of generated data considering temporal consistency metrics. The proposed C-VAE-based approach demonstrates competitive results in geometric similarity metrics. It also exhibits superior temporal consistency, suggesting that C-VAE models may be a viable alternative to modelling the spatiotemporal evolution of 2D moving regions.

Novel Features for Time Series Analysis: A Complex Networks Approach

Oct 11, 2021

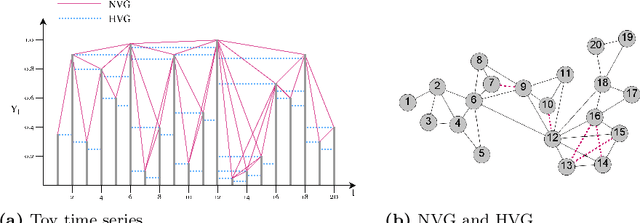

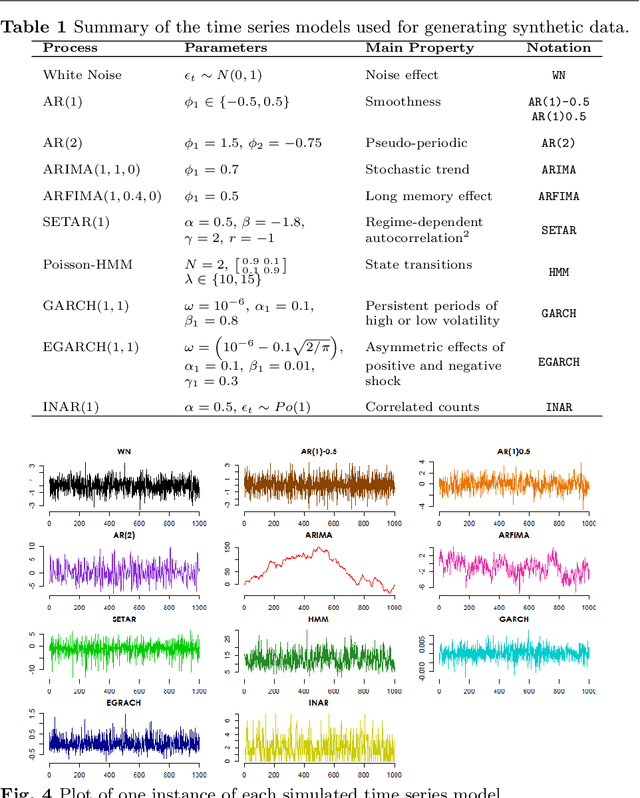

Abstract:Time series data are ubiquitous in several domains as climate, economics and health care. Mining features from these time series is a crucial task with a multidisciplinary impact. Usually, these features are obtained from structural characteristics of time series, such as trend, seasonality and autocorrelation, sometimes requiring data transformations and parametric models. A recent conceptual approach relies on time series mapping to complex networks, where the network science methodologies can help characterize time series. In this paper, we consider two mapping concepts, visibility and transition probability and propose network topological measures as a new set of time series features. To evaluate the usefulness of the proposed features, we address the problem of time series clustering. More specifically, we propose a clustering method that consists in mapping the time series into visibility graphs and quantile graphs, calculating global topological metrics of the resulting networks, and using data mining techniques to form clusters. We apply this method to a data sets of synthetic and empirical time series. The results indicate that network-based features capture the information encoded in each of the time series models, resulting in high accuracy in a clustering task. Our results are promising and show that network analysis can be used to characterize different types of time series and that different mapping methods capture different characteristics of the time series.

Time Series Analysis via Network Science: Concepts and Algorithms

Oct 11, 2021Abstract:There is nowadays a constant flux of data being generated and collected in all types of real world systems. These data sets are often indexed by time, space or both requiring appropriate approaches to analyze the data. In univariate settings, time series analysis is a mature and solid field. However, in multivariate contexts, time series analysis still presents many limitations. In order to address these issues, the last decade has brought approaches based on network science. These methods involve transforming an initial time series data set into one or more networks, which can be analyzed in depth to provide insight into the original time series. This review provides a comprehensive overview of existing mapping methods for transforming time series into networks for a wide audience of researchers and practitioners in machine learning, data mining and time series. Our main contribution is a structured review of existing methodologies, identifying their main characteristics and their differences. We describe the main conceptual approaches, provide authoritative references and give insight into their advantages and limitations in a unified notation and language. We first describe the case of univariate time series, which can be mapped to single layer networks, and we divide the current mappings based on the underlying concept: visibility, transition and proximity. We then proceed with multivariate time series discussing both single layer and multiple layer approaches. Although still very recent, this research area has much potential and with this survey we intend to pave the way for future research on the topic.

GoT-WAVE: Temporal network alignment using graphlet-orbit transitions

Aug 24, 2018

Abstract:Global pairwise network alignment (GPNA) aims to find a one-to-one node mapping between two networks that identifies conserved network regions. GPNA algorithms optimize node conservation (NC) and edge conservation (EC). NC quantifies topological similarity between nodes. Graphlet-based degree vectors (GDVs) are a state-of-the-art topological NC measure. Dynamic GDVs (DGDVs) were used as a dynamic NC measure within the first-ever algorithms for GPNA of temporal networks: DynaMAGNA++ and DynaWAVE. The latter is superior for larger networks. We recently developed a different graphlet-based measure of temporal node similarity, graphlet-orbit transitions (GoTs). Here, we use GoTs instead of DGDVs as a new dynamic NC measure within DynaWAVE, resulting in a new approach, GoT-WAVE. On synthetic networks, GoT-WAVE improves DynaWAVE's accuracy by 25% and speed by 64%. On real networks, when optimizing only dynamic NC, each method is superior ~50% of the time. While DynaWAVE benefits more from also optimizing dynamic EC, only GoT-WAVE can support directed edges. Hence, GoT-WAVE is a promising new temporal GPNA algorithm, which efficiently optimizes dynamic NC. Future work on better incorporating dynamic EC may yield further improvements.

Evolution of Collective Behaviors for a Real Swarm of Aquatic Surface Robots

Feb 02, 2016

Abstract:Swarm robotics is a promising approach for the coordination of large numbers of robots. While previous studies have shown that evolutionary robotics techniques can be applied to obtain robust and efficient self-organized behaviors for robot swarms, most studies have been conducted in simulation, and the few that have been conducted on real robots have been confined to laboratory environments. In this paper, we demonstrate for the first time a swarm robotics system with evolved control successfully operating in a real and uncontrolled environment. We evolve neural network-based controllers in simulation for canonical swarm robotics tasks, namely homing, dispersion, clustering, and monitoring. We then assess the performance of the controllers on a real swarm of up to ten aquatic surface robots. Our results show that the evolved controllers transfer successfully to real robots and achieve a performance similar to the performance obtained in simulation. We validate that the evolved controllers display key properties of swarm intelligence-based control, namely scalability, flexibility, and robustness on the real swarm. We conclude with a proof-of-concept experiment in which the swarm performs a complete environmental monitoring task by combining multiple evolved controllers.

* 31 pages, 15 figures, journal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge