Fernando Moreu

Department of Civil, Construction and Environmental Engineering, University of New Mexico, New Mexico

Methodology to Deploy CNN-Based Computer Vision Models on Immersive Wearable Devices

Jun 28, 2024Abstract:Convolutional Neural Network (CNN) models often lack the ability to incorporate human input, which can be addressed by Augmented Reality (AR) headsets. However, current AR headsets face limitations in processing power, which has prevented researchers from performing real-time, complex image recognition tasks using CNNs in AR headsets. This paper presents a method to deploy CNN models on AR headsets by training them on computers and transferring the optimized weight matrices to the headset. The approach transforms the image data and CNN layers into a one-dimensional format suitable for the AR platform. We demonstrate this method by training the LeNet-5 CNN model on the MNIST dataset using PyTorch and deploying it on a HoloLens AR headset. The results show that the model maintains an accuracy of approximately 98%, similar to its performance on a computer. This integration of CNN and AR enables real-time image processing on AR headsets, allowing for the incorporation of human input into AI models.

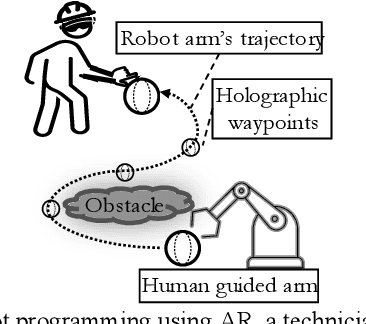

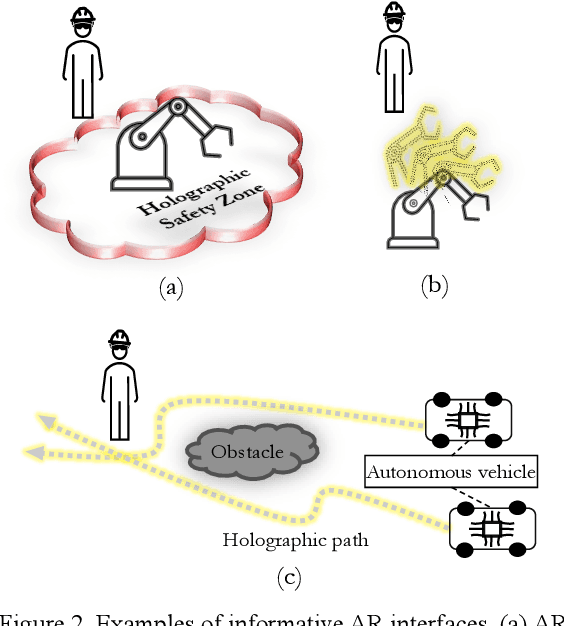

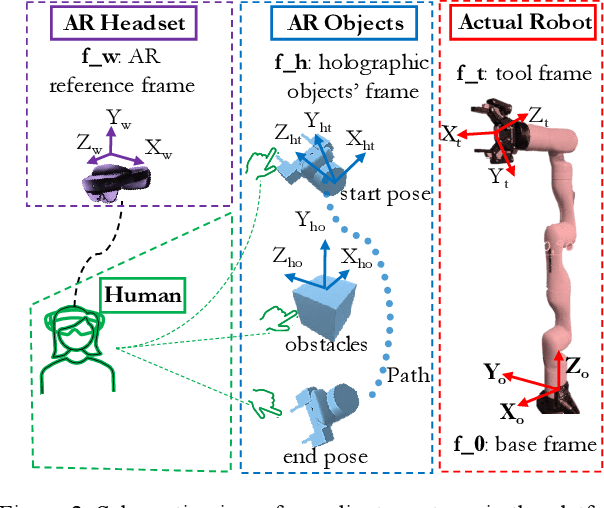

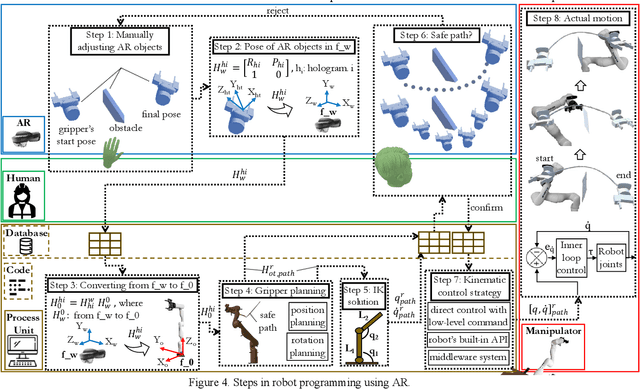

Immersive Robot Programming Interface for Human-Guided Automation and Randomized Path Planning

Jun 04, 2024

Abstract:Researchers are exploring Augmented Reality (AR) interfaces for online robot programming to streamline automation and user interaction in variable manufacturing environments. This study introduces an AR interface for online programming and data visualization that integrates the human in the randomized robot path planning, reducing the inherent randomness of the methods with human intervention. The interface uses holographic items which correspond to physical elements to interact with a redundant manipulator. Utilizing Rapidly Random Tree Star (RRT*) and Spherical Linear Interpolation (SLERP) algorithms, the interface achieves end-effector s progression through collision-free path with smooth rotation. Next, Sequential Quadratic Programming (SQP) achieve robot s configurations for this progression. The platform executes the RRT* algorithm in a loop, with each iteration independently exploring the shortest path through random sampling, leading to variations in the optimized paths produced. These paths are then demonstrated to AR users, who select the most appropriate path based on the environmental context and their intuition. The accuracy and effectiveness of the interface are validated through its implementation and testing with a seven Degree-OF-Freedom (DOF) manipulator, indicating its potential to advance current practices in robot programming. The validation of this paper include two implementations demonstrating the value of human-in-the-loop and context awareness in robotics.

Feedback and Control of Dynamics and Robotics using Augmented Reality

Mar 23, 2023Abstract:Human-machine interaction (HMI) and human-robot interaction (HRI) can assist structural monitoring and structural dynamics testing in the laboratory and field. In vibratory experimentation, one mode of generating vibration is to use electrodynamic exciters. Manual control is a common way of setting the input of the exciter by the operator. To measure the structural responses to these generated vibrations sensors are attached to the structure. These sensors can be deployed by repeatable robots with high endurance, which require on-the-fly control. If the interface between operators and the controls was augmented, then operators can visualize the experiments, exciter levels, and define robot input with a better awareness of the area of interest. Robots can provide better aid to humans if intelligent on-the-fly control of the robot is: (1) quantified and presented to the human; (2) conducted in real-time for human feedback informed by data. Information provided by the new interface would be used to change the control input based on their understanding of real-time parameters. This research proposes using Augmented Reality (AR) applications to provide humans with sensor feedback and control of actuators and robots. This method improves cognition by allowing the operator to maintain awareness of structures while adjusting conditions accordingly with the assistance of the new real-time interface. One interface application is developed to plot sensor data in addition to voltage, frequency, and duration controls for vibration generation. Two more applications are developed under similar framework, one to control the position of a mediating robot and one to control the frequency of the robot movement. This paper presents the proposed model for the new control loop and then compares the new approach with a traditional method by measuring time delay in control input and user efficiency.

Measuring Total Transverse Reference-free Displacements of Railroad Bridges using 2 Degrees of Freedom (2DOF): Experimental Validation

Oct 17, 2021

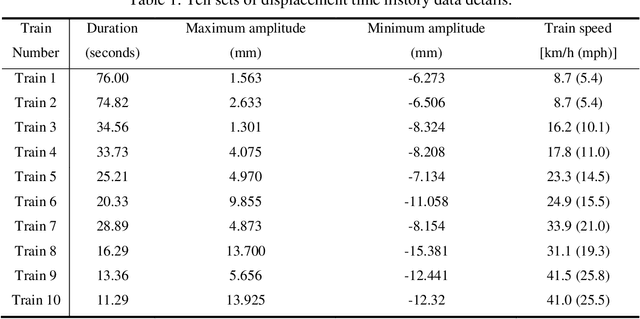

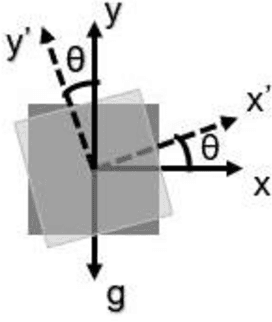

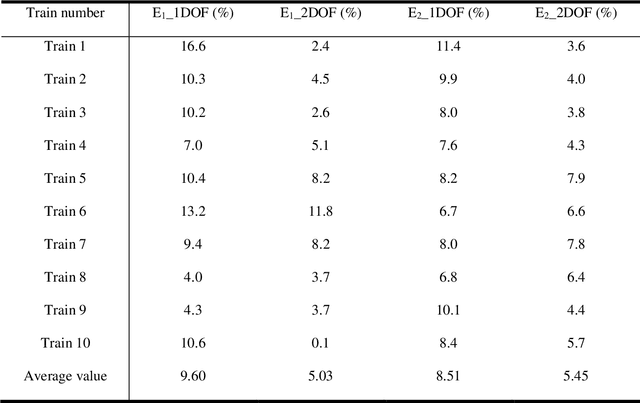

Abstract:Railroad bridge engineers are interested in the displacement of railroad bridges when the train is crossing the bridge for engineering decision making of their assets. Measuring displacements under train crossing events is difficult. If simplified reference-free methods would be accurate and validated, owners would conduct objective performance assessment of their bridge inventories under trains. Researchers have developed new sensing technologies (reference-free) to overcome the limitations of reference point-based displacement sensors. Reference-free methods use accelerometers to estimate displacements, by decomposing the total displacement in two parts: a high-frequency dynamic displacement component, and a low-frequency pseudo-static displacement component. In the past, researchers have used the Euler-Bernoulli beam theory formula to estimate the pseudo-static displacement assuming railroad bridge piles and columns can be simplified as cantilever beams. However, according to railroad bridge managers, railroad bridges have a different degree of fixity for each pile of each bent. Displacements can be estimated assuming a similar degree of fixity for deep foundations, but inherent errors will affect the accuracy of displacement estimation. This paper solves this problem expanding the 1 Degree of Freedom (1DOF) solution to a new 2 Degrees of Freedom (2DOF), to collect displacements under trains and enable cost-effective condition-based information related to bridge safety. Researchers developed a simplified beam to demonstrate the total displacement estimation using 2DOF and further conducted experimental results in the laboratory. The estimated displacement of the 2DOF model is more accurate than that of the 1DOF model for ten train crossing events. With only one sensor added to the ground of the pile, this method provides owners with approximately 40% more accurate displacements.

Crack detection using tap-testing and machine learning techniques to prevent potential rockfall incidents

Oct 10, 2021

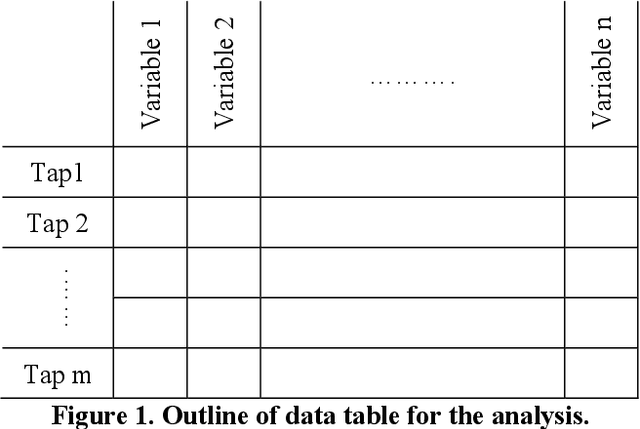

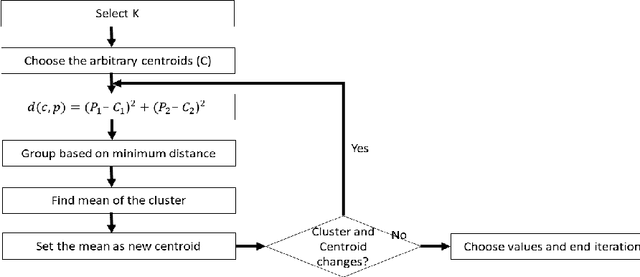

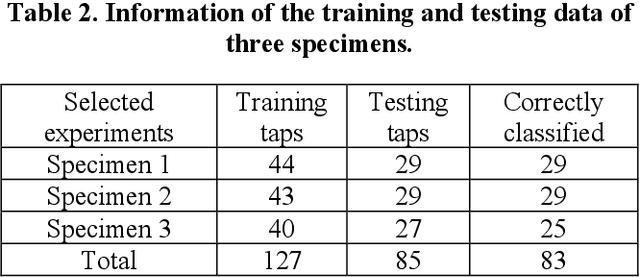

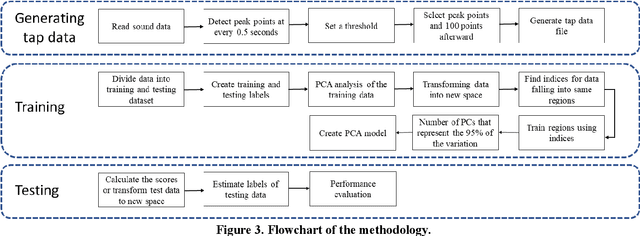

Abstract:Rockfalls are a hazard for the safety of infrastructure as well as people. Identifying loose rocks by inspection of slopes adjacent to roadways and other infrastructure and removing them in advance can be an effective way to prevent unexpected rockfall incidents. This paper proposes a system towards an automated inspection for potential rockfalls. A robot is used to repeatedly strike or tap on the rock surface. The sound from the tapping is collected by the robot and subsequently classified with the intent of identifying rocks that are broken and prone to fall. Principal Component Analysis (PCA) of the collected acoustic data is used to recognize patterns associated with rocks of various conditions, including intact as well as rock with different types and locations of cracks. The PCA classification was first demonstrated simulating sounds of different characteristics that were automatically trained and tested. Secondly, a laboratory test was conducted tapping rock specimens with three different levels of discontinuity in depth and shape. A real microphone mounted on the robot recorded the sound and the data were classified in three clusters within 2D space. A model was created using the training data to classify the reminder of the data (the test data). The performance of the method is evaluated with a confusion matrix.

Reducing Gaze Distraction for Real-time Vibration Monitoring Using Augmented Reality

Oct 06, 2021

Abstract:Operators want to maintain awareness of the structure being tested while observing sensor data. Normally the human's gaze shifts to a separate device or screen during the experiment for data information, missing the structure's physical response. The human-computer interaction provides valuable data and information but separates the human from the reality. The sensor data does not collect experiment safety, quality, and other contextual information of critical value to the operator. To solve this problem, this research provides humans with real-time information about vibrations using an Augmented Reality (AR) application. An application is developed to augment sensor data on top of the area of interest, which allows the user to perceive real-time changes that the data may not warn of. This paper presents the results of an experiment that show how AR can provide a channel for direct sensor feedback while increasing awareness of reality. In the experiment a researcher attempts to closely follow a moving sensor with their own sensor while observing the moving sensor's data with and without AR. The results of the reported experiment indicate that augmenting the information collected from sensors in real-time narrows the operator's focus to the structure of interest for more efficient and informed experimentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge