Kaveh Malek

Department of Mechanical Engineering, University of New Mexico, New Mexico

Methodology to Deploy CNN-Based Computer Vision Models on Immersive Wearable Devices

Jun 28, 2024Abstract:Convolutional Neural Network (CNN) models often lack the ability to incorporate human input, which can be addressed by Augmented Reality (AR) headsets. However, current AR headsets face limitations in processing power, which has prevented researchers from performing real-time, complex image recognition tasks using CNNs in AR headsets. This paper presents a method to deploy CNN models on AR headsets by training them on computers and transferring the optimized weight matrices to the headset. The approach transforms the image data and CNN layers into a one-dimensional format suitable for the AR platform. We demonstrate this method by training the LeNet-5 CNN model on the MNIST dataset using PyTorch and deploying it on a HoloLens AR headset. The results show that the model maintains an accuracy of approximately 98%, similar to its performance on a computer. This integration of CNN and AR enables real-time image processing on AR headsets, allowing for the incorporation of human input into AI models.

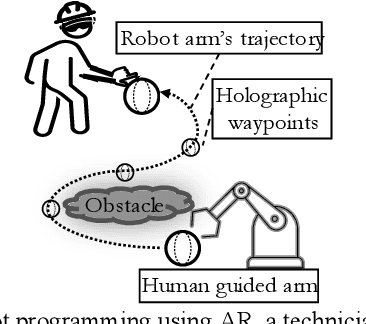

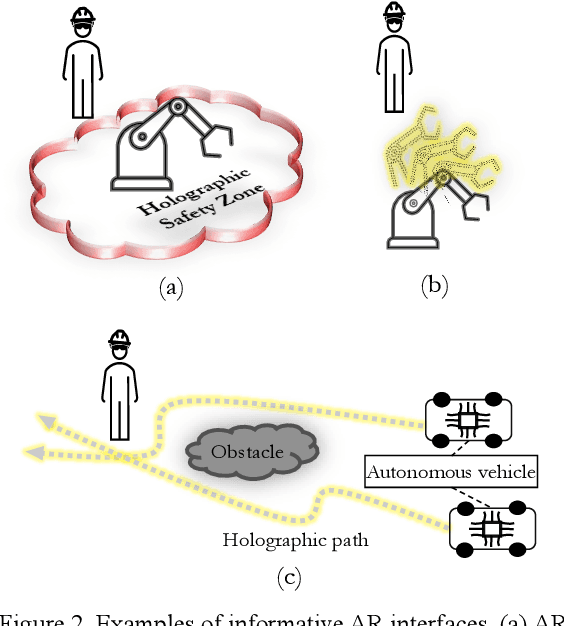

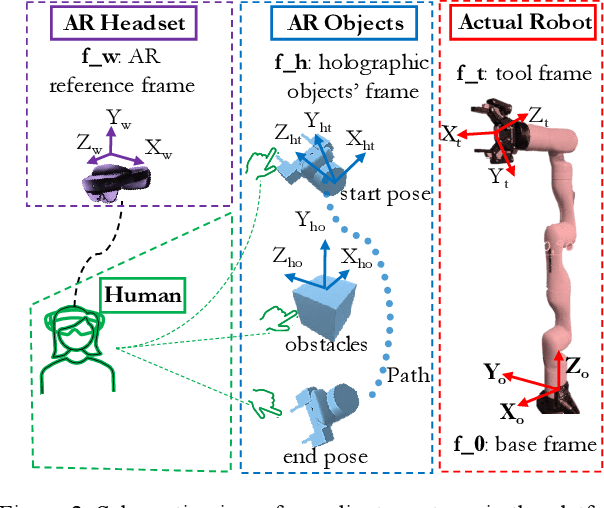

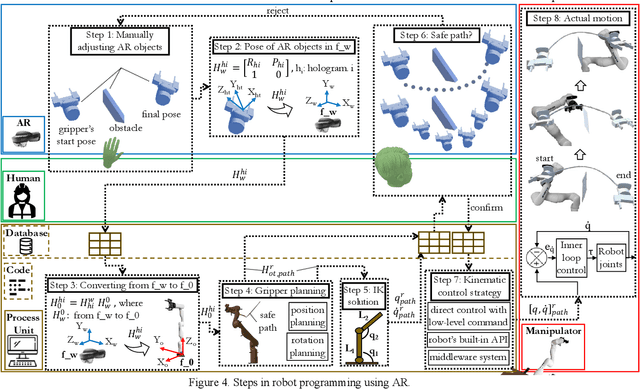

Immersive Robot Programming Interface for Human-Guided Automation and Randomized Path Planning

Jun 04, 2024

Abstract:Researchers are exploring Augmented Reality (AR) interfaces for online robot programming to streamline automation and user interaction in variable manufacturing environments. This study introduces an AR interface for online programming and data visualization that integrates the human in the randomized robot path planning, reducing the inherent randomness of the methods with human intervention. The interface uses holographic items which correspond to physical elements to interact with a redundant manipulator. Utilizing Rapidly Random Tree Star (RRT*) and Spherical Linear Interpolation (SLERP) algorithms, the interface achieves end-effector s progression through collision-free path with smooth rotation. Next, Sequential Quadratic Programming (SQP) achieve robot s configurations for this progression. The platform executes the RRT* algorithm in a loop, with each iteration independently exploring the shortest path through random sampling, leading to variations in the optimized paths produced. These paths are then demonstrated to AR users, who select the most appropriate path based on the environmental context and their intuition. The accuracy and effectiveness of the interface are validated through its implementation and testing with a seven Degree-OF-Freedom (DOF) manipulator, indicating its potential to advance current practices in robot programming. The validation of this paper include two implementations demonstrating the value of human-in-the-loop and context awareness in robotics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge