Fazil Altinel

Spectral Transfer Guided Active Domain Adaptation For Thermal Imagery

Apr 14, 2023

Abstract:The exploitation of visible spectrum datasets has led deep networks to show remarkable success. However, real-world tasks include low-lighting conditions which arise performance bottlenecks for models trained on large-scale RGB image datasets. Thermal IR cameras are more robust against such conditions. Therefore, the usage of thermal imagery in real-world applications can be useful. Unsupervised domain adaptation (UDA) allows transferring information from a source domain to a fully unlabeled target domain. Despite substantial improvements in UDA, the performance gap between UDA and its supervised learning counterpart remains significant. By picking a small number of target samples to annotate and using them in training, active domain adaptation tries to mitigate this gap with minimum annotation expense. We propose an active domain adaptation method in order to examine the efficiency of combining the visible spectrum and thermal imagery modalities. When the domain gap is considerably large as in the visible-to-thermal task, we may conclude that the methods without explicit domain alignment cannot achieve their full potential. To this end, we propose a spectral transfer guided active domain adaptation method to select the most informative unlabeled target samples while aligning source and target domains. We used the large-scale visible spectrum dataset MS-COCO as the source domain and the thermal dataset FLIR ADAS as the target domain to present the results of our method. Extensive experimental evaluation demonstrates that our proposed method outperforms the state-of-the-art active domain adaptation methods. The code and models are publicly available.

Self-training Guided Adversarial Domain Adaptation For Thermal Imagery

Jun 14, 2021

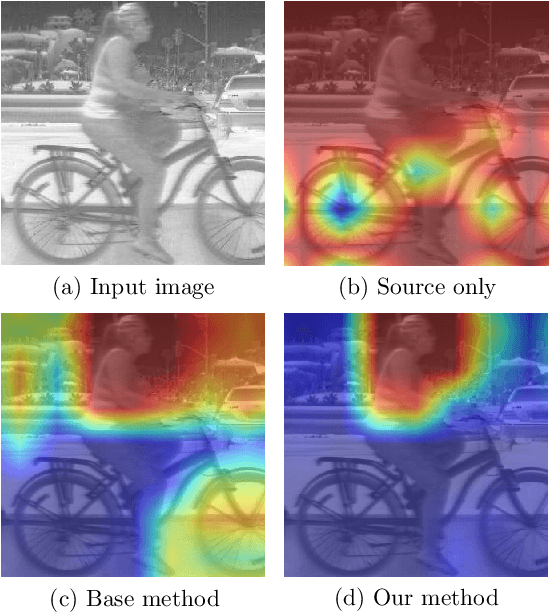

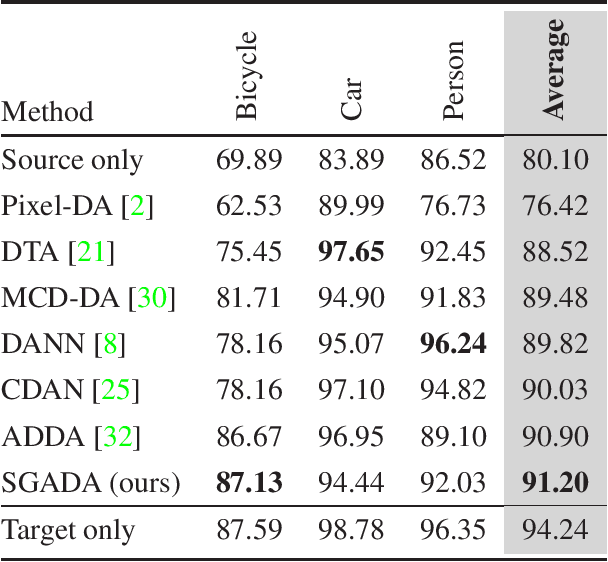

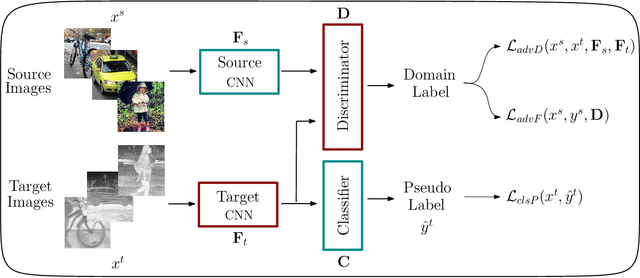

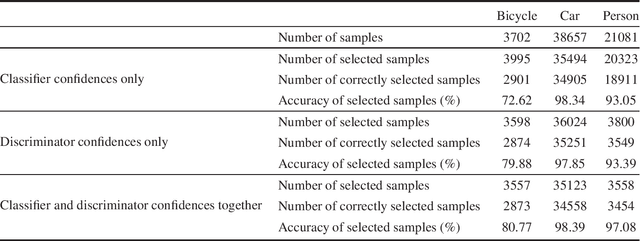

Abstract:Deep models trained on large-scale RGB image datasets have shown tremendous success. It is important to apply such deep models to real-world problems. However, these models suffer from a performance bottleneck under illumination changes. Thermal IR cameras are more robust against such changes, and thus can be very useful for the real-world problems. In order to investigate efficacy of combining feature-rich visible spectrum and thermal image modalities, we propose an unsupervised domain adaptation method which does not require RGB-to-thermal image pairs. We employ large-scale RGB dataset MS-COCO as source domain and thermal dataset FLIR ADAS as target domain to demonstrate results of our method. Although adversarial domain adaptation methods aim to align the distributions of source and target domains, simply aligning the distributions cannot guarantee perfect generalization to the target domain. To this end, we propose a self-training guided adversarial domain adaptation method to promote generalization capabilities of adversarial domain adaptation methods. To perform self-training, pseudo labels are assigned to the samples on the target thermal domain to learn more generalized representations for the target domain. Extensive experimental analyses show that our proposed method achieves better results than the state-of-the-art adversarial domain adaptation methods. The code and models are publicly available.

Deep Structured Energy-Based Image Inpainting

Aug 30, 2018

Abstract:In this paper, we propose a structured image inpainting method employing an energy based model. In order to learn structural relationship between patterns observed in images and missing regions of the images, we employ an energy-based structured prediction method. The structural relationship is learned by minimizing an energy function which is defined by a simple convolutional neural network. The experimental results on various benchmark datasets show that our proposed method significantly outperforms the state-of-the-art methods which use Generative Adversarial Networks (GANs). We obtained 497.35 mean squared error (MSE) on the Olivetti face dataset compared to 833.0 MSE provided by the state-of-the-art method. Moreover, we obtained 28.4 dB peak signal to noise ratio (PSNR) on the SVHN dataset and 23.53 dB on the CelebA dataset, compared to 22.3 dB and 21.3 dB, provided by the state-of-the-art methods, respectively. The code is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge