Self-training Guided Adversarial Domain Adaptation For Thermal Imagery

Paper and Code

Jun 14, 2021

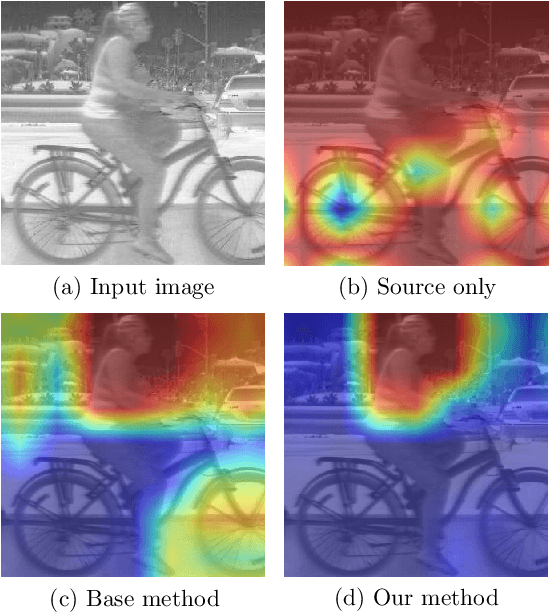

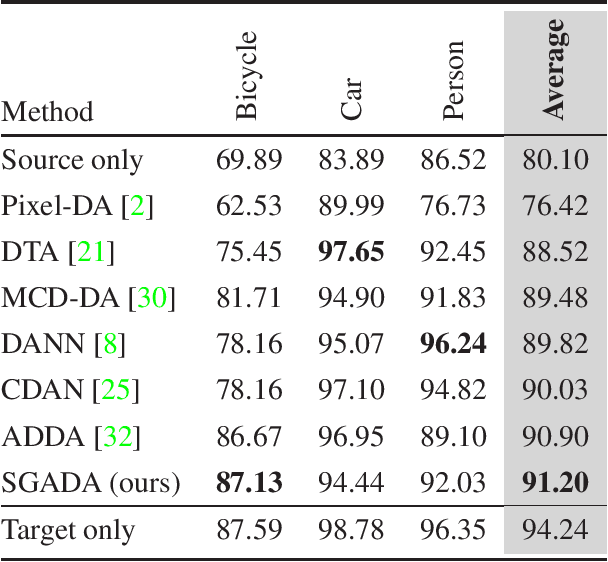

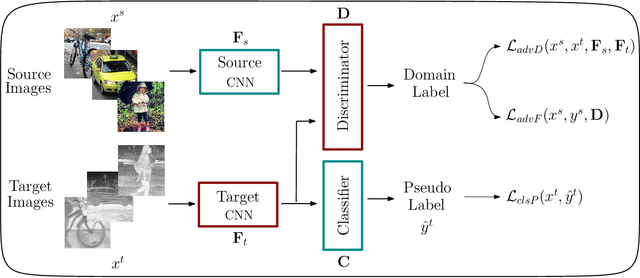

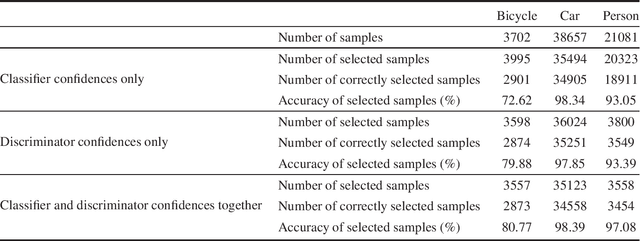

Deep models trained on large-scale RGB image datasets have shown tremendous success. It is important to apply such deep models to real-world problems. However, these models suffer from a performance bottleneck under illumination changes. Thermal IR cameras are more robust against such changes, and thus can be very useful for the real-world problems. In order to investigate efficacy of combining feature-rich visible spectrum and thermal image modalities, we propose an unsupervised domain adaptation method which does not require RGB-to-thermal image pairs. We employ large-scale RGB dataset MS-COCO as source domain and thermal dataset FLIR ADAS as target domain to demonstrate results of our method. Although adversarial domain adaptation methods aim to align the distributions of source and target domains, simply aligning the distributions cannot guarantee perfect generalization to the target domain. To this end, we propose a self-training guided adversarial domain adaptation method to promote generalization capabilities of adversarial domain adaptation methods. To perform self-training, pseudo labels are assigned to the samples on the target thermal domain to learn more generalized representations for the target domain. Extensive experimental analyses show that our proposed method achieves better results than the state-of-the-art adversarial domain adaptation methods. The code and models are publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge