Fatma Arslan

A Benchmark Dataset of Check-worthy Factual Claims

Apr 29, 2020

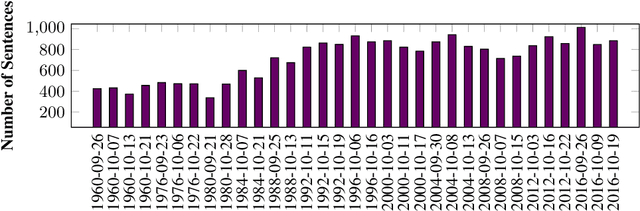

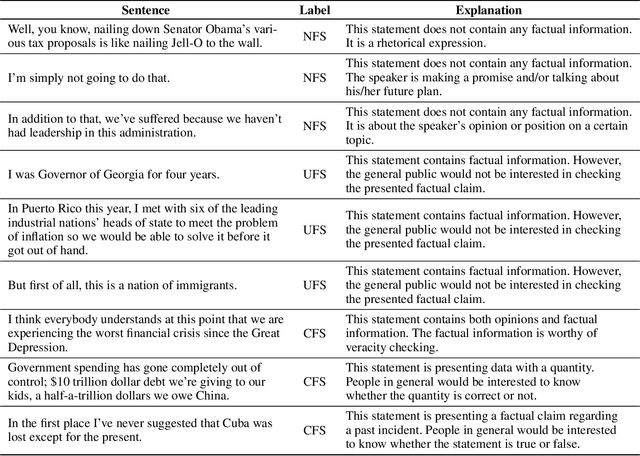

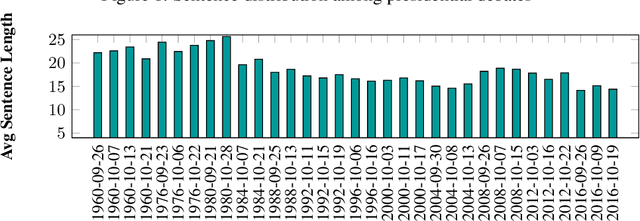

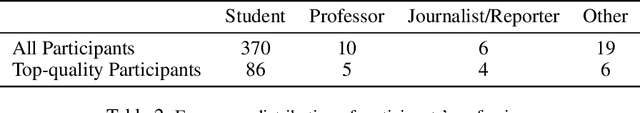

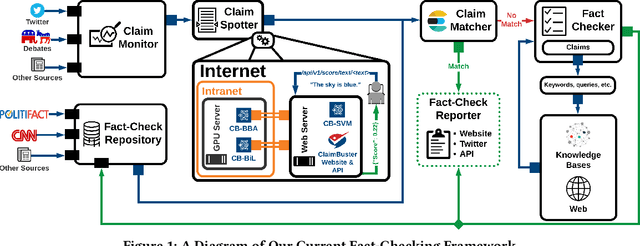

Abstract:In this paper we present the ClaimBuster dataset of 23,533 statements extracted from all U.S. general election presidential debates and annotated by human coders. The ClaimBuster dataset can be leveraged in building computational methods to identify claims that are worth fact-checking from the myriad of sources of digital or traditional media. The ClaimBuster dataset is publicly available to the research community, and it can be found at http://doi.org/10.5281/zenodo.3609356.

Gradient-Based Adversarial Training on Transformer Networks for Detecting Check-Worthy Factual Claims

Feb 18, 2020

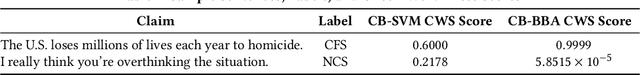

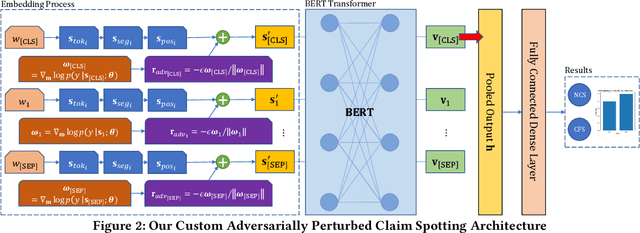

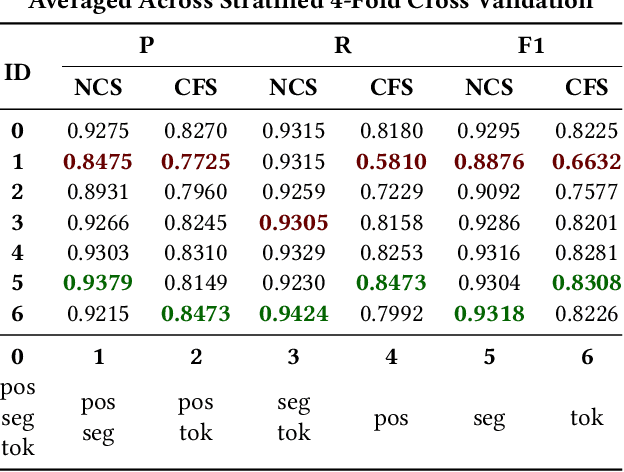

Abstract:We present a study on the efficacy of adversarial training on transformer neural network models, with respect to the task of detecting check-worthy claims. In this work, we introduce the first adversarially-regularized, transformer-based claim spotter model that achieves state-of-the-art results on multiple challenging benchmarks. We obtain a 4.31 point F1-score improvement and a 1.09 point mAP score improvement over current state-of-the-art models on the ClaimBuster Dataset and CLEF2019 Dataset, respectively. In the process, we propose a method to apply adversarial training to transformer models, which has the potential to be generalized to many similar text classification tasks. Along with our results, we are releasing our codebase and manually labeled datasets. We also showcase our models' real world usage via a live public API.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge