Farnoud Farahmand

Pyramid: Machine Learning Framework to Estimate the Optimal Timing and Resource Usage of a High-Level Synthesis Design

Jul 29, 2019

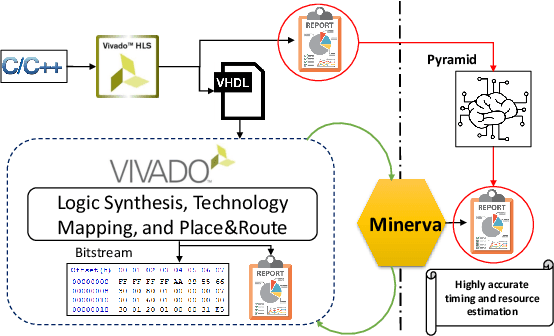

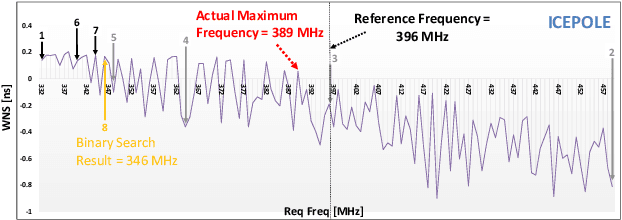

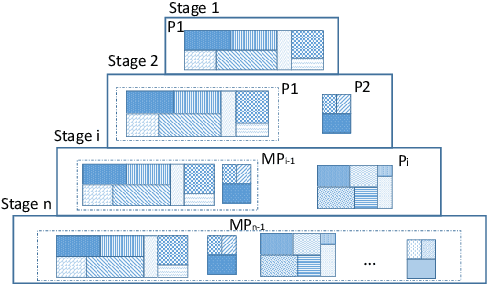

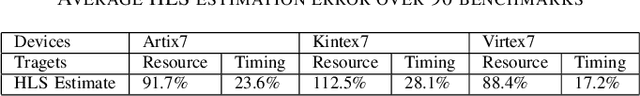

Abstract:The emergence of High-Level Synthesis (HLS) tools shifted the paradigm of hardware design by making the process of mapping high-level programming languages to hardware design such as C to VHDL/Verilog feasible. HLS tools offer a plethora of techniques to optimize designs for both area and performance, but resource usage and timing reports of HLS tools mostly deviate from the post-implementation results. In addition, to evaluate a hardware design performance, it is critical to determine the maximum achievable clock frequency. Obtaining such information using static timing analysis provided by CAD tools is difficult, due to the multitude of tool options. Moreover, a binary search to find the maximum frequency is tedious, time-consuming, and often does not obtain the optimal result. To address these challenges, we propose a framework, called Pyramid, that uses machine learning to accurately estimate the optimal performance and resource utilization of an HLS design. For this purpose, we first create a database of C-to-FPGA results from a diverse set of benchmarks. To find the achievable maximum clock frequency, we use Minerva, which is an automated hardware optimization tool. Minerva determines the close-to-optimal settings of tools, using static timing analysis and a heuristic algorithm, and targets either optimal throughput or throughput-to-area. Pyramid uses the database to train an ensemble machine learning model to map the HLS-reported features to the results of Minerva. To this end, Pyramid re-calibrates the results of HLS to bridge the accuracy gap and enable developers to estimate the throughput or throughput-to-area of hardware design with more than 95% accuracy and alleviates the need to perform actual implementation for estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge