Fardin Saad

Gricean Norms as a Basis for Effective Collaboration

Mar 18, 2025

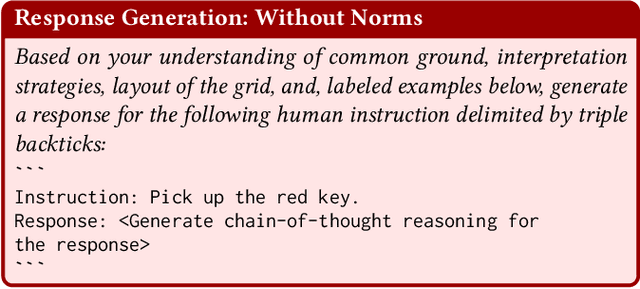

Abstract:Effective human-AI collaboration hinges not only on the AI agent's ability to follow explicit instructions but also on its capacity to navigate ambiguity, incompleteness, invalidity, and irrelevance in communication. Gricean conversational and inference norms facilitate collaboration by aligning unclear instructions with cooperative principles. We propose a normative framework that integrates Gricean norms and cognitive frameworks -- common ground, relevance theory, and theory of mind -- into large language model (LLM) based agents. The normative framework adopts the Gricean maxims of quantity, quality, relation, and manner, along with inference, as Gricean norms to interpret unclear instructions, which are: ambiguous, incomplete, invalid, or irrelevant. Within this framework, we introduce Lamoids, GPT-4 powered agents designed to collaborate with humans. To assess the influence of Gricean norms in human-AI collaboration, we evaluate two versions of a Lamoid: one with norms and one without. In our experiments, a Lamoid collaborates with a human to achieve shared goals in a grid world (Doors, Keys, and Gems) by interpreting both clear and unclear natural language instructions. Our results reveal that the Lamoid with Gricean norms achieves higher task accuracy and generates clearer, more accurate, and contextually relevant responses than the Lamoid without norms. This improvement stems from the normative framework, which enhances the agent's pragmatic reasoning, fostering effective human-AI collaboration and enabling context-aware communication in LLM-based agents.

Is Speech Emotion Recognition Language-Independent? Analysis of English and Bangla Languages using Language-Independent Vocal Features

Nov 21, 2021

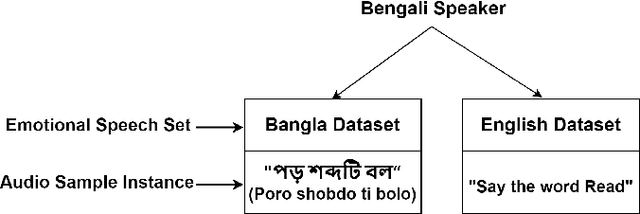

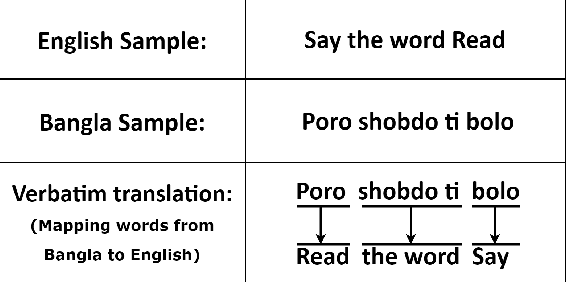

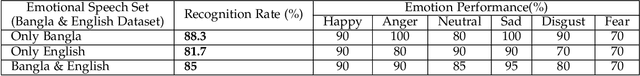

Abstract:A language agnostic approach to recognizing emotions from speech remains an incomplete and challenging task. In this paper, we used Bangla and English languages to assess whether distinguishing emotions from speech is independent of language. The following emotions were categorized for this study: happiness, anger, neutral, sadness, disgust, and fear. We employed three Emotional Speech Sets, of which the first two were developed by native Bengali speakers in Bangla and English languages separately. The third was the Toronto Emotional Speech Set (TESS), which was developed by native English speakers from Canada. We carefully selected language-independent prosodic features, adopted a Support Vector Machine (SVM) model, and conducted three experiments to carry out our proposition. In the first experiment, we measured the performance of the three speech sets individually. This was followed by the second experiment, where we recorded the classification rate by combining the speech sets. Finally, in the third experiment we measured the recognition rate by training and testing the model with different speech sets. Although this study reveals that Speech Emotion Recognition (SER) is mostly language-independent, there is some disparity while recognizing emotional states like disgust and fear in these two languages. Moreover, our investigations inferred that non-native speakers convey emotions through speech, much like expressing themselves in their native tongue.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge