Fanfan Ye

Self-distilled Knowledge Delegator for Exemplar-free Class Incremental Learning

May 23, 2022

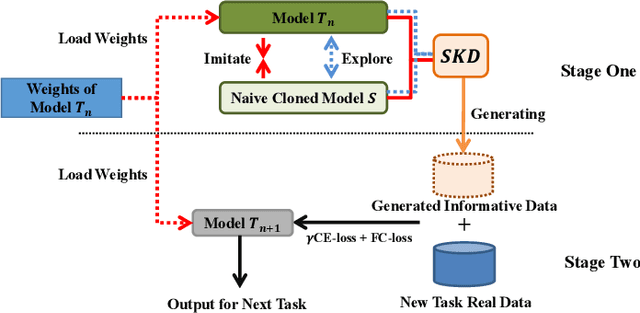

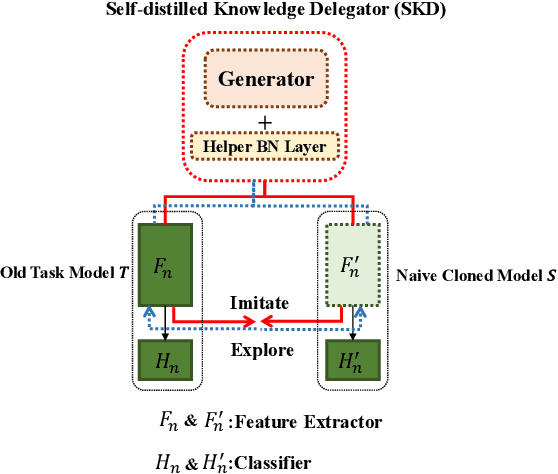

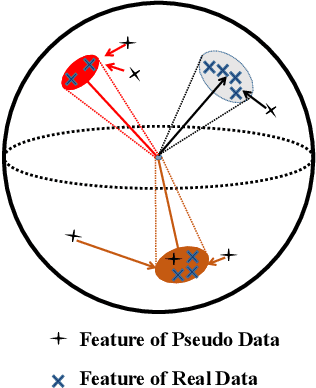

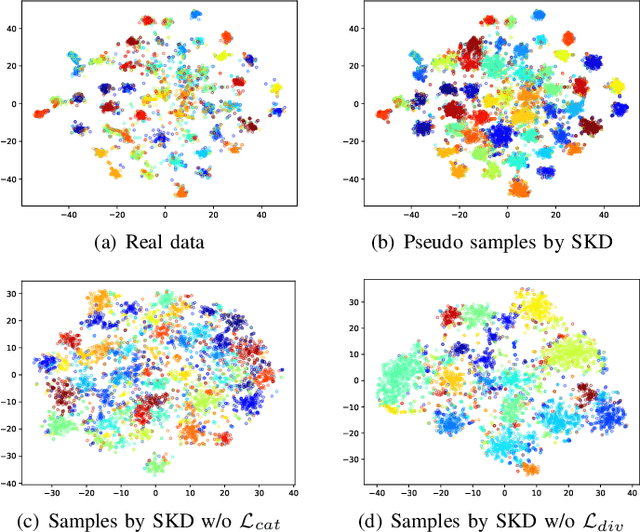

Abstract:Exemplar-free incremental learning is extremely challenging due to inaccessibility of data from old tasks. In this paper, we attempt to exploit the knowledge encoded in a previously trained classification model to handle the catastrophic forgetting problem in continual learning. Specifically, we introduce a so-called knowledge delegator, which is capable of transferring knowledge from the trained model to a randomly re-initialized new model by generating informative samples. Given the previous model only, the delegator is effectively learned using a self-distillation mechanism in a data-free manner. The knowledge extracted by the delegator is then utilized to maintain the performance of the model on old tasks in incremental learning. This simple incremental learning framework surpasses existing exemplar-free methods by a large margin on four widely used class incremental benchmarks, namely CIFAR-100, ImageNet-Subset, Caltech-101 and Flowers-102. Notably, we achieve comparable performance to some exemplar-based methods without accessing any exemplars.

Topology-aware Convolutional Neural Network for Efficient Skeleton-based Action Recognition

Dec 09, 2021

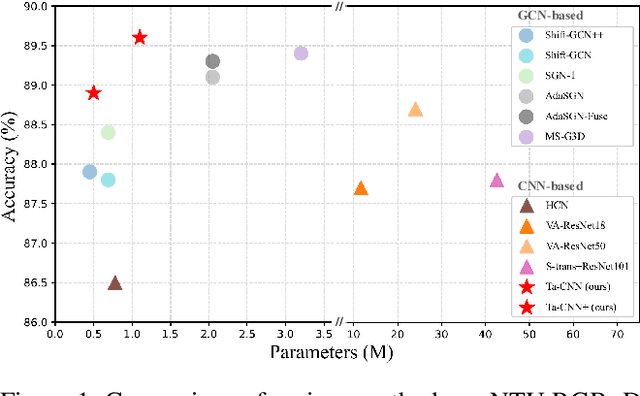

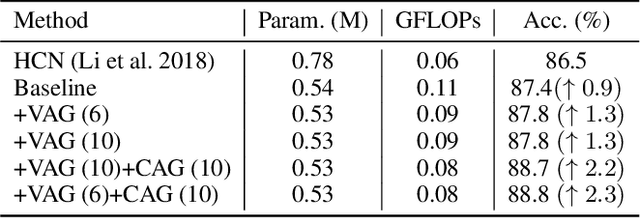

Abstract:In the context of skeleton-based action recognition, graph convolutional networks (GCNs) have been rapidly developed, whereas convolutional neural networks (CNNs) have received less attention. One reason is that CNNs are considered poor in modeling the irregular skeleton topology. To alleviate this limitation, we propose a pure CNN architecture named Topology-aware CNN (Ta-CNN) in this paper. In particular, we develop a novel cross-channel feature augmentation module, which is a combo of map-attend-group-map operations. By applying the module to the coordinate level and the joint level subsequently, the topology feature is effectively enhanced. Notably, we theoretically prove that graph convolution is a special case of normal convolution when the joint dimension is treated as channels. This confirms that the topology modeling power of GCNs can also be implemented by using a CNN. Moreover, we creatively design a SkeletonMix strategy which mixes two persons in a unique manner and further boosts the performance. Extensive experiments are conducted on four widely used datasets, i.e. N-UCLA, SBU, NTU RGB+D and NTU RGB+D 120 to verify the effectiveness of Ta-CNN. We surpass existing CNN-based methods significantly. Compared with leading GCN-based methods, we achieve comparable performance with much less complexity in terms of the required GFLOPs and parameters.

Dynamic GCN: Context-enriched Topology Learning for Skeleton-based Action Recognition

Jul 29, 2020

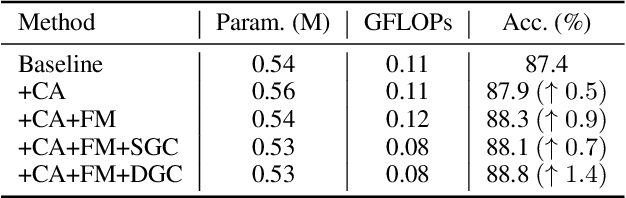

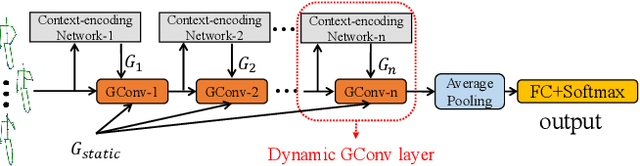

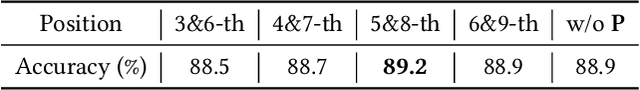

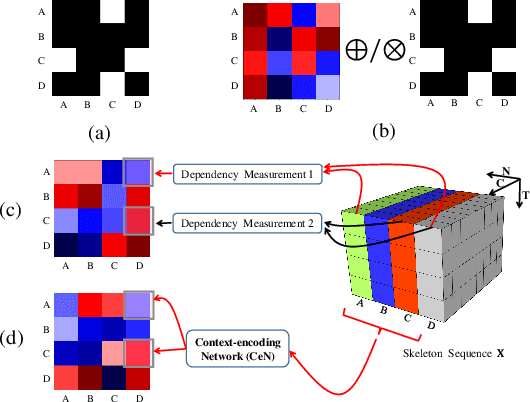

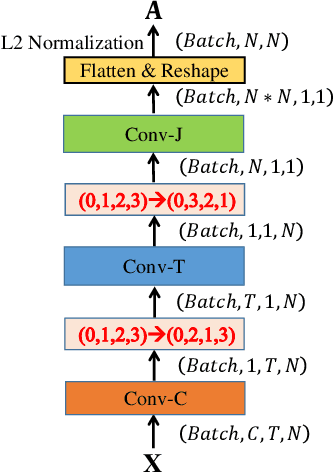

Abstract:Graph Convolutional Networks (GCNs) have attracted increasing interests for the task of skeleton-based action recognition. The key lies in the design of the graph structure, which encodes skeleton topology information. In this paper, we propose Dynamic GCN, in which a novel convolutional neural network named Contextencoding Network (CeN) is introduced to learn skeleton topology automatically. In particular, when learning the dependency between two joints, contextual features from the rest joints are incorporated in a global manner. CeN is extremely lightweight yet effective, and can be embedded into a graph convolutional layer. By stacking multiple CeN-enabled graph convolutional layers, we build Dynamic GCN. Notably, as a merit of CeN, dynamic graph topologies are constructed for different input samples as well as graph convolutional layers of various depths. Besides, three alternative context modeling architectures are well explored, which may serve as a guideline for future research on graph topology learning. CeN brings only ~7% extra FLOPs for the baseline model, and Dynamic GCN achieves better performance with $2\times$~$4\times$ fewer FLOPs than existing methods. By further combining static physical body connections and motion modalities, we achieve state-of-the-art performance on three large-scale benchmarks, namely NTU-RGB+D, NTU-RGB+D 120 and Skeleton-Kinetics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge