Fabrice Benhamouda

FALCON: Honest-Majority Maliciously Secure Framework for Private Deep Learning

Apr 05, 2020

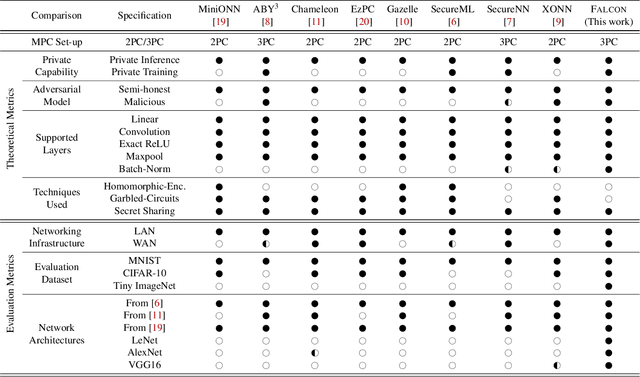

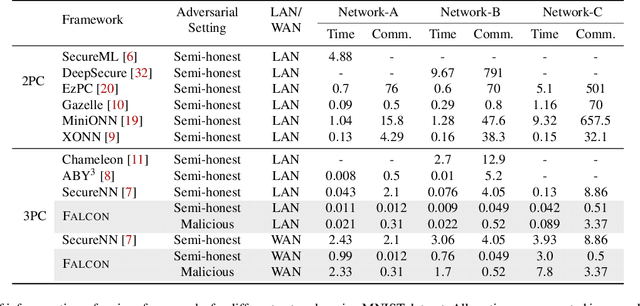

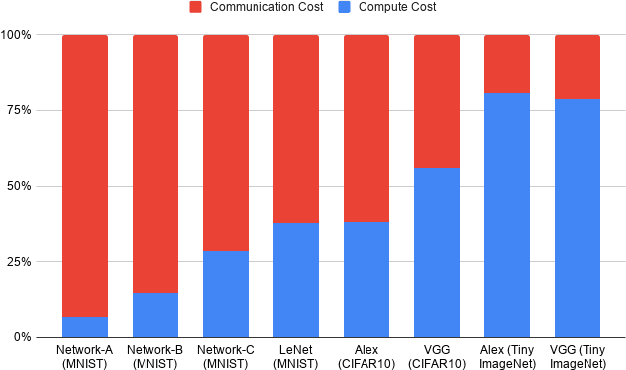

Abstract:This paper aims to enable training and inference of neural networks in a manner that protects the privacy of sensitive data. We propose FALCON - an end-to-end 3-party protocol for fast and secure computation of deep learning algorithms on large networks. FALCON presents three main advantages. It is highly expressive. To the best of our knowledge, it is the first secure framework to support high capacity networks with over a hundred million parameters such as VGG16 as well as the first to support batch normalization, a critical component of deep learning that enables training of complex network architectures such as AlexNet. Next, FALCON guarantees security with abort against malicious adversaries, assuming an honest majority. It ensures that the protocol always completes with correct output for honest participants or aborts when it detects the presence of a malicious adversary. Lastly, FALCON presents new theoretical insights for protocol design that make it highly efficient and allow it to outperform existing secure deep learning solutions. Compared to prior art for private inference, we are about 8x faster than SecureNN (PETS '19) on average and comparable to ABY3 (CCS '18). We are about 16-200x more communication efficient than either of these. For private training, we are about 6x faster than SecureNN, 4.4x faster than ABY3 and about 2-60x more communication efficient. This is the first paper to show via experiments in the WAN setting, that for multi-party machine learning computations over large networks and datasets, compute operations dominate the overall latency, as opposed to the communication.

How to Profile Privacy-Conscious Users in Recommender Systems

Dec 01, 2018Abstract:Matrix factorization is a popular method to build a recommender system. In such a system, existing users and items are associated to a low-dimension vector called a profile. The profiles of a user and of an item can be combined (via inner product) to predict the rating that the user would get on the item. One important issue of such a system is the so-called cold-start problem: how to allow a user to learn her profile, so that she can then get accurate recommendations? While a profile can be computed if the user is willing to rate well-chosen items and/or provide supplemental attributes or demographics (such as gender), revealing this additional information is known to allow the analyst of the recommender system to infer many more personal sensitive information. We design a protocol to allow privacy-conscious users to benefit from matrix-factorization-based recommender systems while preserving their privacy. More precisely, our protocol enables a user to learn her profile, and from that to predict ratings without the user revealing any personal information. The protocol is secure in the standard model against semi-honest adversaries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge