Fabio Massacci

Repairing vulnerabilities without invisible hands. A differentiated replication study on LLMs

Jul 28, 2025Abstract:Background: Automated Vulnerability Repair (AVR) is a fast-growing branch of program repair. Recent studies show that large language models (LLMs) outperform traditional techniques, extending their success beyond code generation and fault detection. Hypothesis: These gains may be driven by hidden factors -- "invisible hands" such as training-data leakage or perfect fault localization -- that let an LLM reproduce human-authored fixes for the same code. Objective: We replicate prior AVR studies under controlled conditions by deliberately adding errors to the reported vulnerability location in the prompt. If LLMs merely regurgitate memorized fixes, both small and large localization errors should yield the same number of correct patches, because any offset should divert the model from the original fix. Method: Our pipeline repairs vulnerabilities from the Vul4J and VJTrans benchmarks after shifting the fault location by n lines from the ground truth. A first LLM generates a patch, a second LLM reviews it, and we validate the result with regression and proof-of-vulnerability tests. Finally, we manually audit a sample of patches and estimate the error rate with the Agresti-Coull-Wilson method.

Today's Cat Is Tomorrow's Dog: Accounting for Time-Based Changes in the Labels of ML Vulnerability Detection Approaches

Jun 13, 2025Abstract:Vulnerability datasets used for ML testing implicitly contain retrospective information. When tested on the field, one can only use the labels available at the time of training and testing (e.g. seen and assumed negatives). As vulnerabilities are discovered across calendar time, labels change and past performance is not necessarily aligned with future performance. Past works only considered the slices of the whole history (e.g. DiverseVUl) or individual differences between releases (e.g. Jimenez et al. ESEC/FSE 2019). Such approaches are either too optimistic in training (e.g. the whole history) or too conservative (e.g. consecutive releases). We propose a method to restructure a dataset into a series of datasets in which both training and testing labels change to account for the knowledge available at the time. If the model is actually learning, it should improve its performance over time as more data becomes available and data becomes more stable, an effect that can be checked with the Mann-Kendall test. We validate our methodology for vulnerability detection with 4 time-based datasets (3 projects from BigVul dataset + Vuldeepecker's NVD) and 5 ML models (Code2Vec, CodeBERT, LineVul, ReGVD, and Vuldeepecker). In contrast to the intuitive expectation (more retrospective information, better performance), the trend results show that performance changes inconsistently across the years, showing that most models are not learning.

Using ML filters to help automated vulnerability repairs: when it helps and when it doesn't

Apr 09, 2025Abstract:[Context:] The acceptance of candidate patches in automated program repair has been typically based on testing oracles. Testing requires typically a costly process of building the application while ML models can be used to quickly classify patches, thus allowing more candidate patches to be generated in a positive feedback loop. [Problem:] If the model predictions are unreliable (as in vulnerability detection) they can hardly replace the more reliable oracles based on testing. [New Idea:] We propose to use an ML model as a preliminary filter of candidate patches which is put in front of a traditional filter based on testing. [Preliminary Results:] We identify some theoretical bounds on the precision and recall of the ML algorithm that makes such operation meaningful in practice. With these bounds and the results published in the literature, we calculate how fast some of state-of-the art vulnerability detectors must be to be more effective over a traditional AVR pipeline such as APR4Vuln based just on testing.

Large Language Models are Unreliable for Cyber Threat Intelligence

Mar 29, 2025Abstract:Several recent works have argued that Large Language Models (LLMs) can be used to tame the data deluge in the cybersecurity field, by improving the automation of Cyber Threat Intelligence (CTI) tasks. This work presents an evaluation methodology that other than allowing to test LLMs on CTI tasks when using zero-shot learning, few-shot learning and fine-tuning, also allows to quantify their consistency and their confidence level. We run experiments with three state-of-the-art LLMs and a dataset of 350 threat intelligence reports and present new evidence of potential security risks in relying on LLMs for CTI. We show how LLMs cannot guarantee sufficient performance on real-size reports while also being inconsistent and overconfident. Few-shot learning and fine-tuning only partially improve the results, thus posing doubts about the possibility of using LLMs for CTI scenarios, where labelled datasets are lacking and where confidence is a fundamental factor.

A Convolutional Transformation Network for Malware Classification

Sep 16, 2019

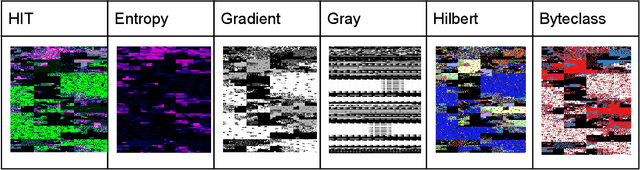

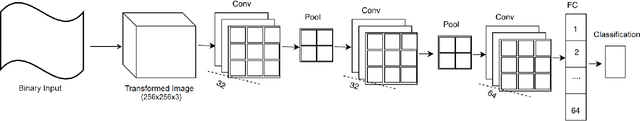

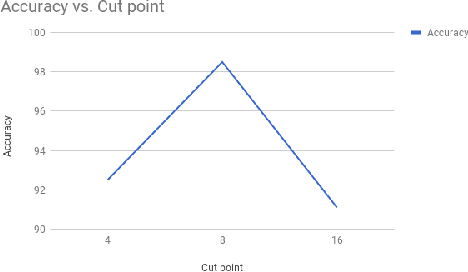

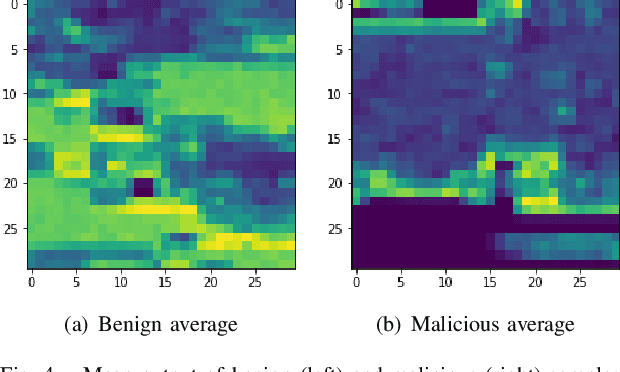

Abstract:Modern malware evolves various detection avoidance techniques to bypass the state-of-the-art detection methods. An emerging trend to deal with this issue is the combination of image transformation and machine learning techniques to classify and detect malware. However, existing works in this field only perform simple image transformation methods that limit the accuracy of the detection. In this paper, we introduce a novel approach to classify malware by using a deep network on images transformed from binary samples. In particular, we first develop a novel hybrid image transformation method to convert binaries into color images that convey the binary semantics. The images are trained by a deep convolutional neural network that later classifies the test inputs into benign or malicious categories. Through the extensive experiments, our proposed method surpasses all baselines and achieves 99.14% in terms of accuracy on the testing set.

DES: a Challenge Problem for Nonmonotonic Reasoning Systems

Mar 08, 2000

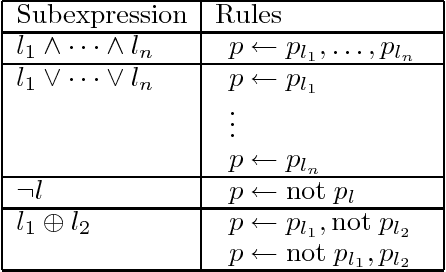

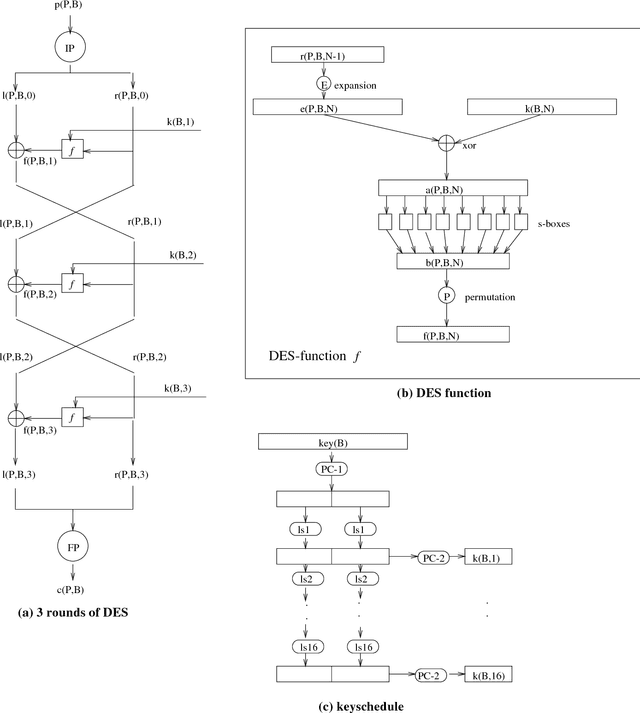

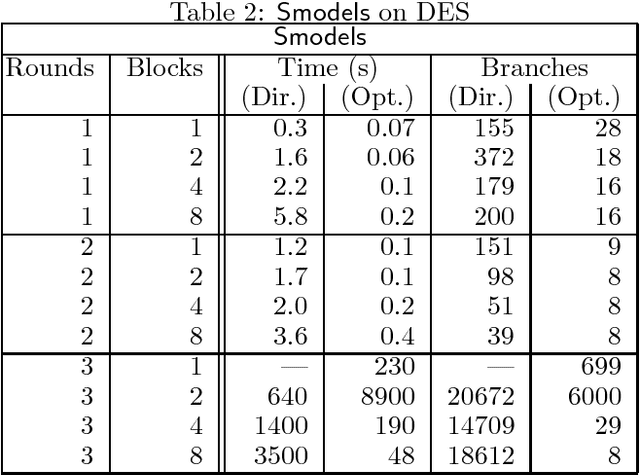

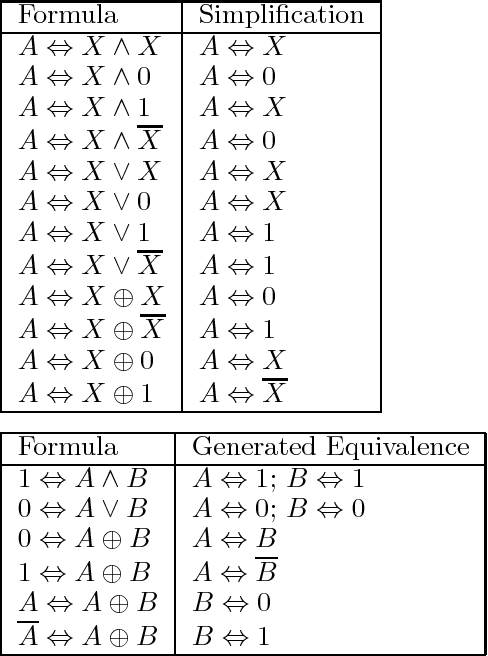

Abstract:The US Data Encryption Standard, DES for short, is put forward as an interesting benchmark problem for nonmonotonic reasoning systems because (i) it provides a set of test cases of industrial relevance which shares features of randomly generated problems and real-world problems, (ii) the representation of DES using normal logic programs with the stable model semantics is simple and easy to understand, and (iii) this subclass of logic programs can be seen as an interesting special case for many other formalizations of nonmonotonic reasoning. In this paper we present two encodings of DES as logic programs: a direct one out of the standard specifications and an optimized one extending the work of Massacci and Marraro. The computational properties of the encodings are studied by using them for DES key search with the Smodels system as the implementation of the stable model semantics. Results indicate that the encodings and Smodels are quite competitive: they outperform state-of-the-art SAT-checkers working with an optimized encoding of DES into SAT and are comparable with a SAT-checker that is customized and tuned for the optimized SAT encoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge