Evan Kravitz

Adversarially-Trained Deep Nets Transfer Better

Jul 11, 2020

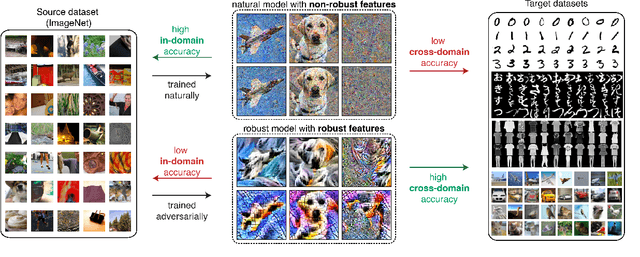

Abstract:Transfer learning has emerged as a powerful methodology for adapting pre-trained deep neural networks to new domains. This process consists of taking a neural network pre-trained on a large feature-rich source dataset, freezing the early layers that encode essential generic image properties, and then fine-tuning the last few layers in order to capture specific information related to the target situation. This approach is particularly useful when only limited or weakly labelled data are available for the new task. In this work, we demonstrate that adversarially-trained models transfer better across new domains than naturally-trained models, even though it's known that these models do not generalize as well as naturally-trained models on the source domain. We show that this behavior results from a bias, introduced by the adversarial training, that pushes the learned inner layers to more natural image representations, which in turn enables better transfer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge