Etienne Corvee

INRIA Sophia Antipolis

Robust Mobile Object Tracking Based on Multiple Feature Similarity and Trajectory Filtering

Jun 14, 2011

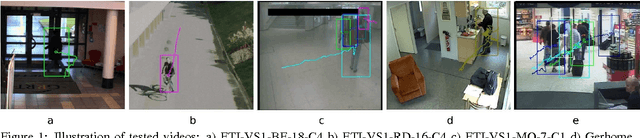

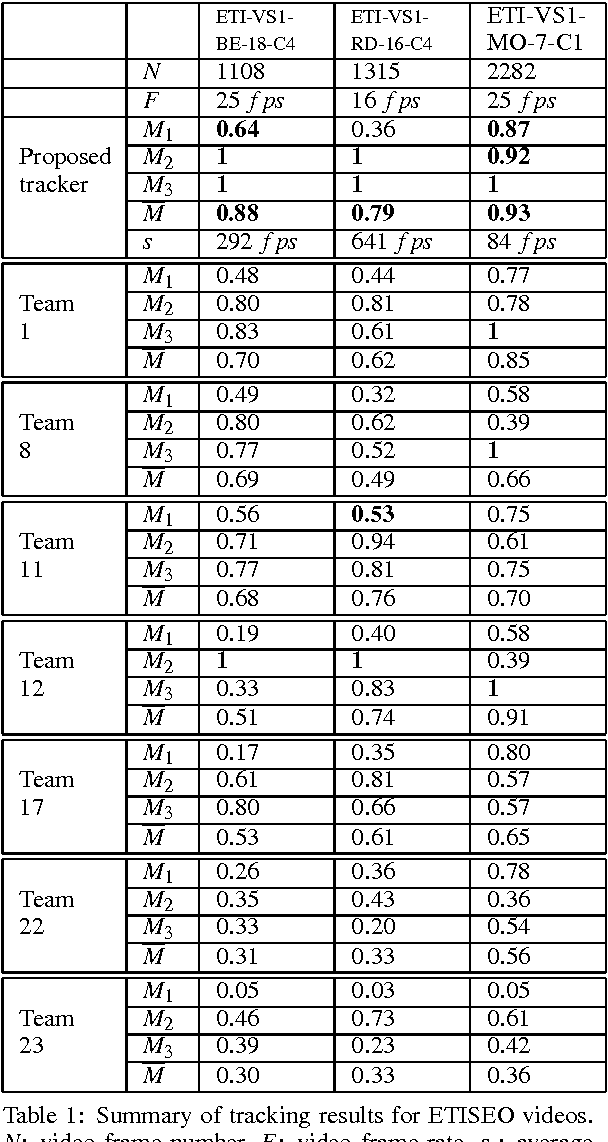

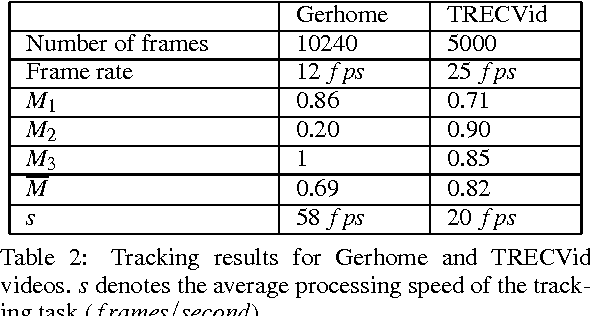

Abstract:This paper presents a new algorithm to track mobile objects in different scene conditions. The main idea of the proposed tracker includes estimation, multi-features similarity measures and trajectory filtering. A feature set (distance, area, shape ratio, color histogram) is defined for each tracked object to search for the best matching object. Its best matching object and its state estimated by the Kalman filter are combined to update position and size of the tracked object. However, the mobile object trajectories are usually fragmented because of occlusions and misdetections. Therefore, we also propose a trajectory filtering, named global tracker, aims at removing the noisy trajectories and fusing the fragmented trajectories belonging to a same mobile object. The method has been tested with five videos of different scene conditions. Three of them are provided by the ETISEO benchmarking project (http://www-sop.inria.fr/orion/ETISEO) in which the proposed tracker performance has been compared with other seven tracking algorithms. The advantages of our approach over the existing state of the art ones are: (i) no prior knowledge information is required (e.g. no calibration and no contextual models are needed), (ii) the tracker is more reliable by combining multiple feature similarities, (iii) the tracker can perform in different scene conditions: single/several mobile objects, weak/strong illumination, indoor/outdoor scenes, (iv) a trajectory filtering is defined and applied to improve the tracker performance, (v) the tracker performance outperforms many algorithms of the state of the art.

Repairing People Trajectories Based on Point Clustering

Jul 02, 2010

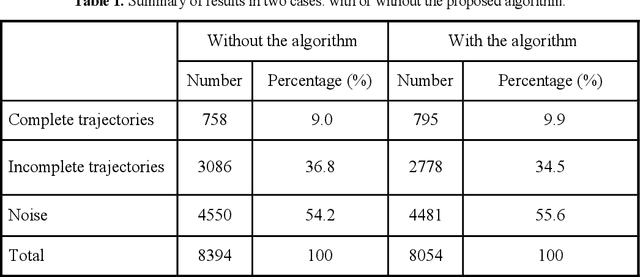

Abstract:This paper presents a method for improving any object tracking algorithm based on machine learning. During the training phase, important trajectory features are extracted which are then used to calculate a confidence value of trajectory. The positions at which objects are usually lost and found are clustered in order to construct the set of 'lost zones' and 'found zones' in the scene. Using these zones, we construct a triplet set of zones i.e. three zones: In/Out zone (zone where an object can enter or exit the scene), 'lost zone' and 'found zone'. Thanks to these triplets, during the testing phase, we can repair the erroneous trajectories according to which triplet they are most likely to belong to. The advantage of our approach over the existing state of the art approaches is that (i) this method does not depend on a predefined contextual scene, (ii) we exploit the semantic of the scene and (iii) we have proposed a method to filter out noisy trajectories based on their confidence value.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge