Erik Larsson

CRPS-LAM: Regional ensemble weather forecasting from matching marginals

Oct 10, 2025Abstract:Machine learning for weather prediction increasingly relies on ensemble methods to provide probabilistic forecasts. Diffusion-based models have shown strong performance in Limited-Area Modeling (LAM) but remain computationally expensive at sampling time. Building on the success of global weather forecasting models trained based on Continuous Ranked Probability Score (CRPS), we introduce CRPS-LAM, a probabilistic LAM forecasting model trained with a CRPS-based objective. By sampling and injecting a single latent noise vector into the model, CRPS-LAM generates ensemble members in a single forward pass, achieving sampling speeds up to 39 times faster than a diffusion-based model. We evaluate the model on the MEPS regional dataset, where CRPS-LAM matches the low errors of diffusion models. By retaining also fine-scale forecast details, the method stands out as an effective approach for probabilistic regional weather forecasting

Diffusion-LAM: Probabilistic Limited Area Weather Forecasting with Diffusion

Feb 11, 2025Abstract:Machine learning methods have been shown to be effective for weather forecasting, based on the speed and accuracy compared to traditional numerical models. While early efforts primarily concentrated on deterministic predictions, the field has increasingly shifted toward probabilistic forecasting to better capture the forecast uncertainty. Most machine learning-based models have been designed for global-scale predictions, with only limited work targeting regional or limited area forecasting, which allows more specialized and flexible modeling for specific locations. This work introduces Diffusion-LAM, a probabilistic limited area weather model leveraging conditional diffusion. By conditioning on boundary data from surrounding regions, our approach generates forecasts within a defined area. Experimental results on the MEPS limited area dataset demonstrate the potential of Diffusion-LAM to deliver accurate probabilistic forecasts, highlighting its promise for limited-area weather prediction.

Improving Relational Database Interactions with Large Language Models: Column Descriptions and Their Impact on Text-to-SQL Performance

Aug 08, 2024

Abstract:Relational databases often suffer from uninformative descriptors of table contents, such as ambiguous columns and hard-to-interpret values, impacting both human users and Text-to-SQL models. This paper explores the use of large language models (LLMs) to generate informative column descriptions as a semantic layer for relational databases. Using the BIRD-Bench development set, we created \textsc{ColSQL}, a dataset with gold-standard column descriptions generated and refined by LLMs and human annotators. We evaluated several instruction-tuned models, finding that GPT-4o and Command R+ excelled in generating high-quality descriptions. Additionally, we applied an LLM-as-a-judge to evaluate model performance. Although this method does not align well with human evaluations, we included it to explore its potential and to identify areas for improvement. More work is needed to improve the reliability of automatic evaluations for this task. We also find that detailed column descriptions significantly improve Text-to-SQL execution accuracy, especially when columns are uninformative. This study establishes LLMs as effective tools for generating detailed metadata, enhancing the usability of relational databases.

Enabling Image Recognition on Constrained Devices Using Neural Network Pruning and a CycleGAN

Sep 11, 2020

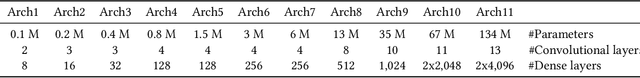

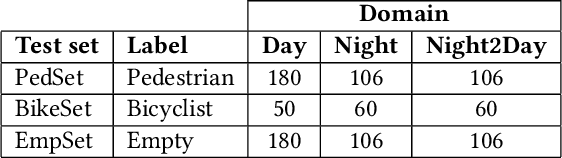

Abstract:Smart cameras are increasingly used in surveillance solutions in public spaces. Contemporary computer vision applications can be used to recognize events that require intervention by emergency services. Smart cameras can be mounted in locations where citizens feel particularly unsafe, e.g., pathways and underpasses with a history of incidents. One promising approach for smart cameras is edge AI, i.e., deploying AI technology on IoT devices. However, implementing resource-demanding technology such as image recognition using deep neural networks (DNN) on constrained devices is a substantial challenge. In this paper, we explore two approaches to reduce the need for compute in contemporary image recognition in an underpass. First, we showcase successful neural network pruning, i.e., we retain comparable classification accuracy with only 1.1\% of the neurons remaining from the state-of-the-art DNN architecture. Second, we demonstrate how a CycleGAN can be used to transform out-of-distribution images to the operational design domain. We posit that both pruning and CycleGANs are promising enablers for efficient edge AI in smart cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge