Eric Q. Nguyen

A Domain-Agnostic Approach for Characterization of Lifelong Learning Systems

Jan 18, 2023

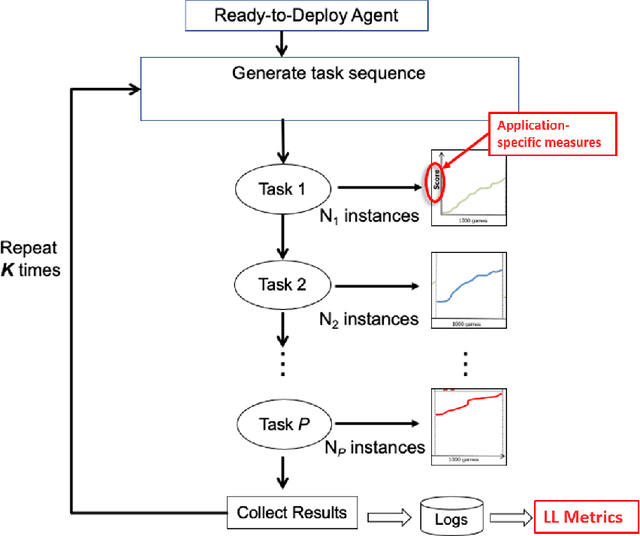

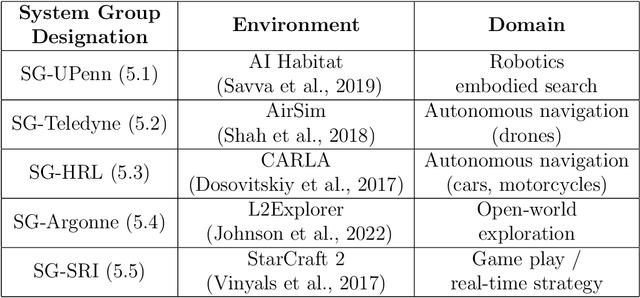

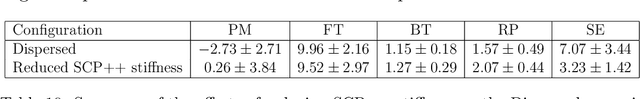

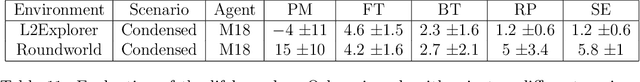

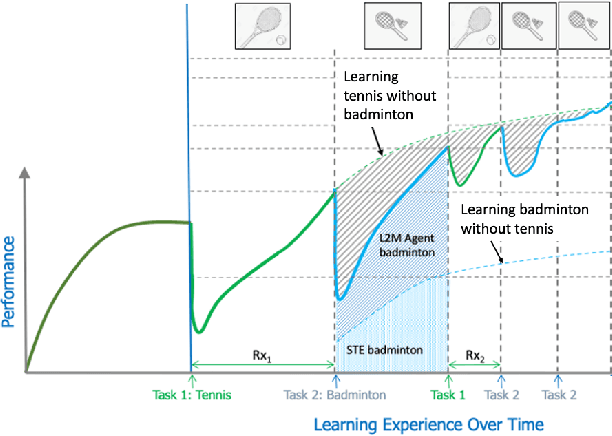

Abstract:Despite the advancement of machine learning techniques in recent years, state-of-the-art systems lack robustness to "real world" events, where the input distributions and tasks encountered by the deployed systems will not be limited to the original training context, and systems will instead need to adapt to novel distributions and tasks while deployed. This critical gap may be addressed through the development of "Lifelong Learning" systems that are capable of 1) Continuous Learning, 2) Transfer and Adaptation, and 3) Scalability. Unfortunately, efforts to improve these capabilities are typically treated as distinct areas of research that are assessed independently, without regard to the impact of each separate capability on other aspects of the system. We instead propose a holistic approach, using a suite of metrics and an evaluation framework to assess Lifelong Learning in a principled way that is agnostic to specific domains or system techniques. Through five case studies, we show that this suite of metrics can inform the development of varied and complex Lifelong Learning systems. We highlight how the proposed suite of metrics quantifies performance trade-offs present during Lifelong Learning system development - both the widely discussed Stability-Plasticity dilemma and the newly proposed relationship between Sample Efficient and Robust Learning. Further, we make recommendations for the formulation and use of metrics to guide the continuing development of Lifelong Learning systems and assess their progress in the future.

L2Explorer: A Lifelong Reinforcement Learning Assessment Environment

Mar 14, 2022

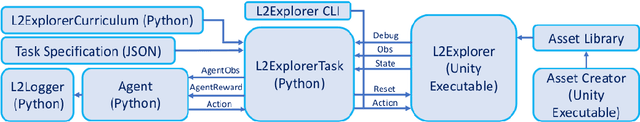

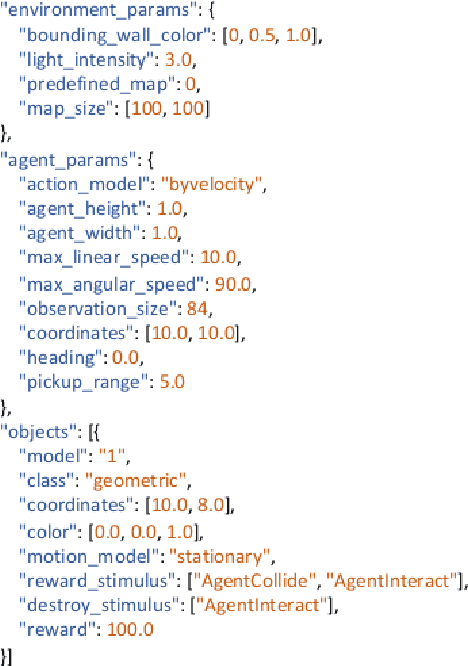

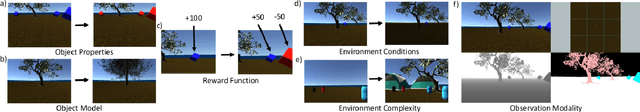

Abstract:Despite groundbreaking progress in reinforcement learning for robotics, gameplay, and other complex domains, major challenges remain in applying reinforcement learning to the evolving, open-world problems often found in critical application spaces. Reinforcement learning solutions tend to generalize poorly when exposed to new tasks outside of the data distribution they are trained on, prompting an interest in continual learning algorithms. In tandem with research on continual learning algorithms, there is a need for challenge environments, carefully designed experiments, and metrics to assess research progress. We address the latter need by introducing a framework for continual reinforcement-learning development and assessment using Lifelong Learning Explorer (L2Explorer), a new, Unity-based, first-person 3D exploration environment that can be continuously reconfigured to generate a range of tasks and task variants structured into complex and evolving evaluation curricula. In contrast to procedurally generated worlds with randomized components, we have developed a systematic approach to defining curricula in response to controlled changes with accompanying metrics to assess transfer, performance recovery, and data efficiency. Taken together, the L2Explorer environment and evaluation approach provides a framework for developing future evaluation methodologies in open-world settings and rigorously evaluating approaches to lifelong learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge