Eric Ng

EdgeConnect: Generative Image Inpainting with Adversarial Edge Learning

Jan 11, 2019

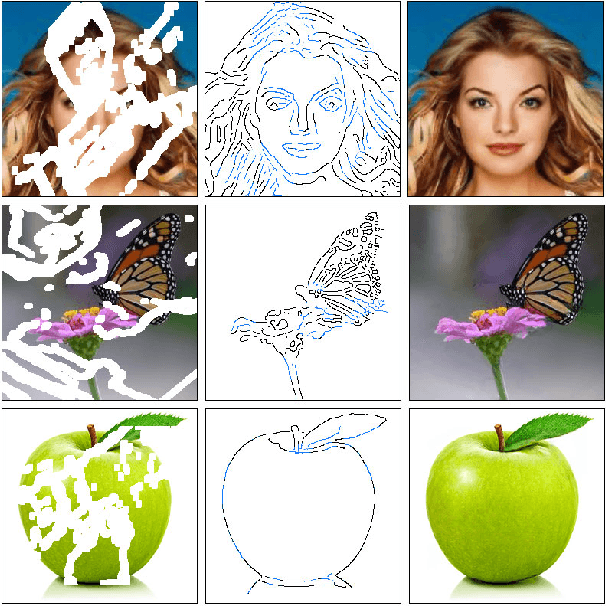

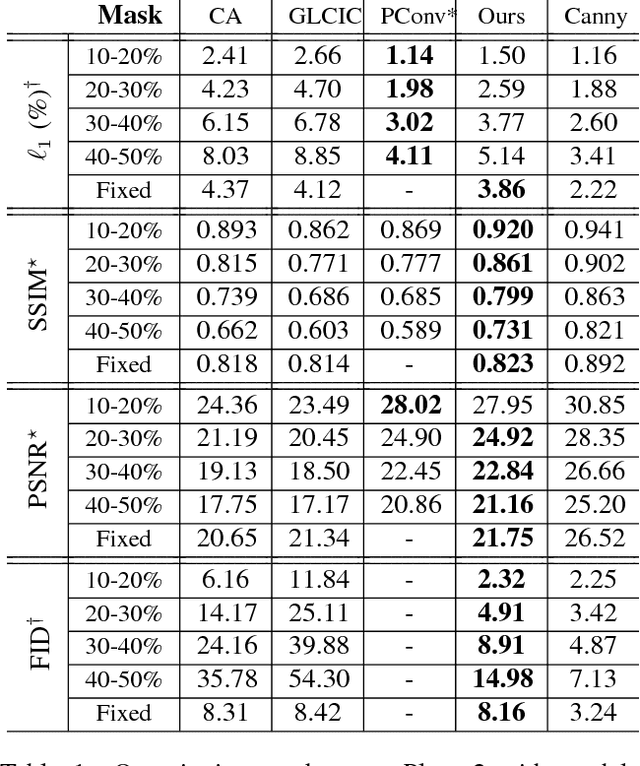

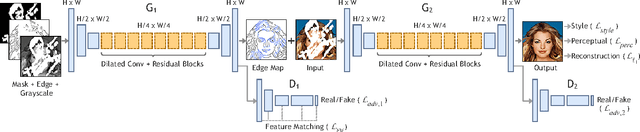

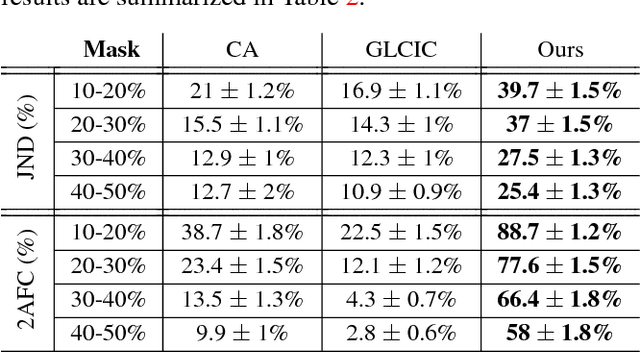

Abstract:Over the last few years, deep learning techniques have yielded significant improvements in image inpainting. However, many of these techniques fail to reconstruct reasonable structures as they are commonly over-smoothed and/or blurry. This paper develops a new approach for image inpainting that does a better job of reproducing filled regions exhibiting fine details. We propose a two-stage adversarial model EdgeConnect that comprises of an edge generator followed by an image completion network. The edge generator hallucinates edges of the missing region (both regular and irregular) of the image, and the image completion network fills in the missing regions using hallucinated edges as a priori. We evaluate our model end-to-end over the publicly available datasets CelebA, Places2, and Paris StreetView, and show that it outperforms current state-of-the-art techniques quantitatively and qualitatively. Code and models available at: https://github.com/knazeri/edge-connect

Image Colorization with Generative Adversarial Networks

May 16, 2018

Abstract:Over the last decade, the process of automatic image colorization has been of significant interest for several application areas including restoration of aged or degraded images. This problem is highly ill-posed due to the large degrees of freedom during the assignment of color information. Many of the recent developments in automatic colorization involve images that contain a common theme or require highly processed data such as semantic maps as input. In our approach, we attempt to fully generalize the colorization procedure using a conditional Deep Convolutional Generative Adversarial Network (DCGAN), extend current methods to high-resolution images and suggest training strategies that speed up the process and greatly stabilize it. The network is trained over datasets that are publicly available such as CIFAR-10 and Places365. The results of the generative model and traditional deep neural networks are compared.

* Lecture Notes in Computer Science, Proceedings of tenth international conference on Articulated Motion and Deformable Objects (AMDO), Palma, Mallorca, Spain, 12-13 July 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge