Eric Grinstein

Binaural Localization Model for Speech in Noise

Jul 26, 2025

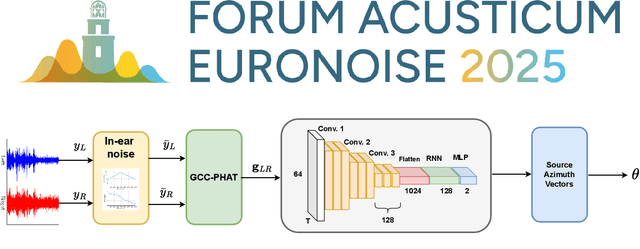

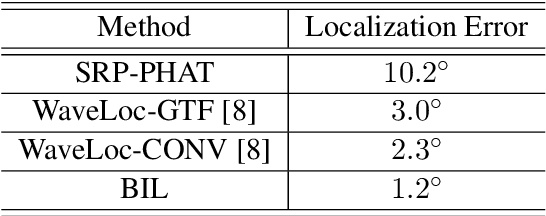

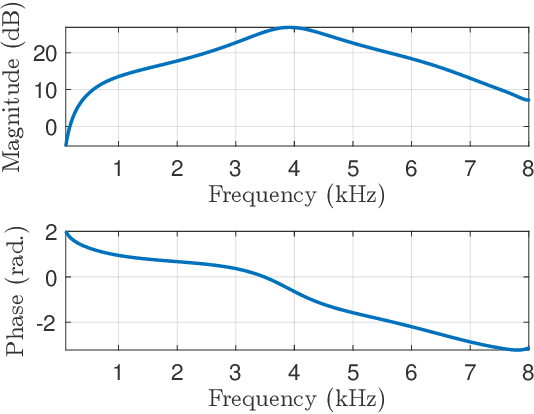

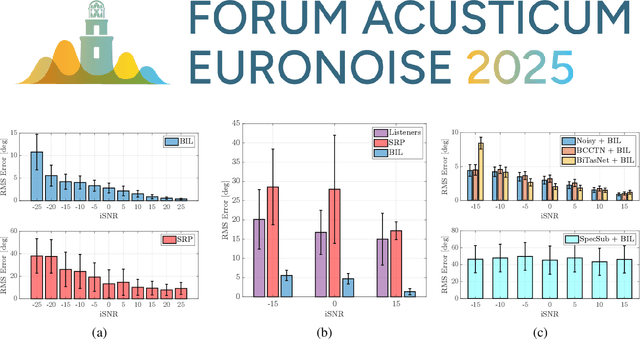

Abstract:Binaural acoustic source localization is important to human listeners for spatial awareness, communication and safety. In this paper, an end-to-end binaural localization model for speech in noise is presented. A lightweight convolutional recurrent network that localizes sound in the frontal azimuthal plane for noisy reverberant binaural signals is introduced. The model incorporates additive internal ear noise to represent the frequency-dependent hearing threshold of a typical listener. The localization performance of the model is compared with the steered response power algorithm, and the use of the model as a measure of interaural cue preservation for binaural speech enhancement methods is studied. A listening test was performed to compare the performance of the model with human localization of speech in noisy conditions.

Steered Response Power for Sound Source Localization: A Tutorial Review

May 05, 2024Abstract:In the last three decades, the Steered Response Power (SRP) method has been widely used for the task of Sound Source Localization (SSL), due to its satisfactory localization performance on moderately reverberant and noisy scenarios. Many works have analyzed and extended the original SRP method to reduce its computational cost, to allow it to locate multiple sources, or to improve its performance in adverse environments. In this work, we review over 200 papers on the SRP method and its variants, with emphasis on the SRP-PHAT method. We also present eXtensible-SRP, or X-SRP, a generalized and modularized version of the SRP algorithm which allows the reviewed extensions to be implemented. We provide a Python implementation of the algorithm which includes selected extensions from the literature.

The Neural-SRP method for positional sound source localization

Mar 14, 2024

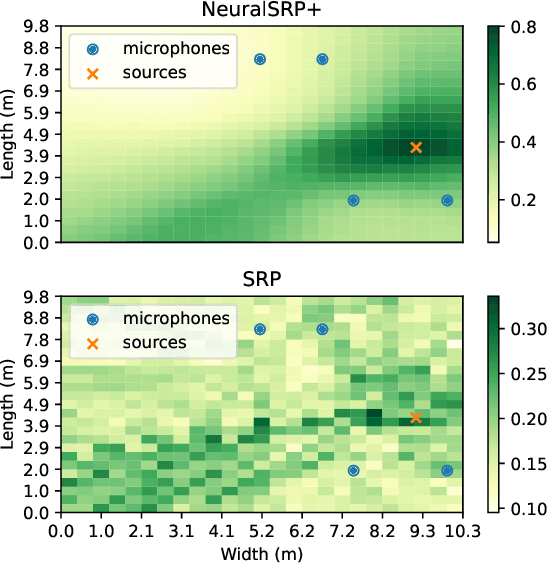

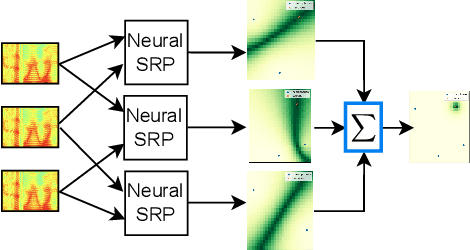

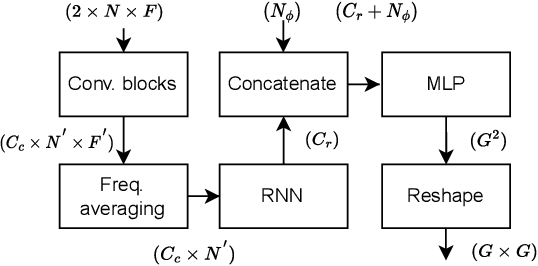

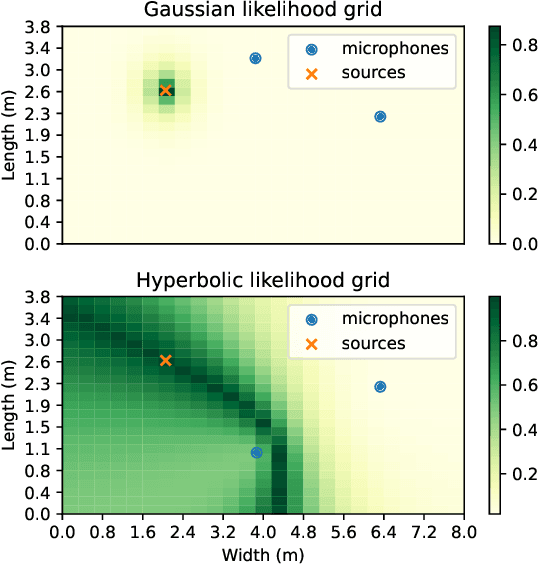

Abstract:Steered Response Power (SRP) is a widely used method for the task of sound source localization using microphone arrays, showing satisfactory localization performance on many practical scenarios. However, its performance is diminished under highly reverberant environments. Although Deep Neural Networks (DNNs) have been previously proposed to overcome this limitation, most are trained for a specific number of microphones with fixed spatial coordinates. This restricts their practical application on scenarios frequently observed in wireless acoustic sensor networks, where each application has an ad-hoc microphone topology. We propose Neural-SRP, a DNN which combines the flexibility of SRP with the performance gains of DNNs. We train our network using simulated data and transfer learning, and evaluate our approach on recorded and simulated data. Results verify that Neural-SRP's localization performance significantly outperforms the baselines.

Binaural Speech Enhancement Using Deep Complex Convolutional Transformer Networks

Mar 08, 2024

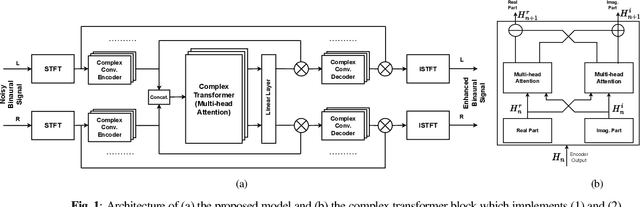

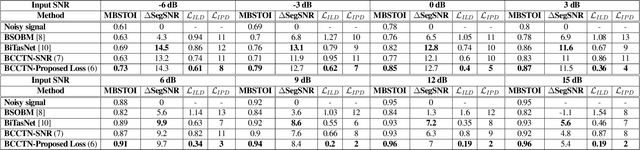

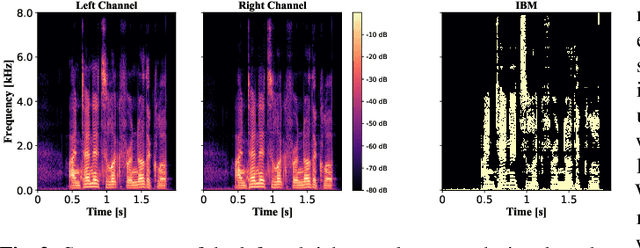

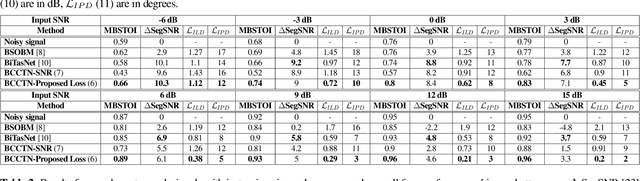

Abstract:Studies have shown that in noisy acoustic environments, providing binaural signals to the user of an assistive listening device may improve speech intelligibility and spatial awareness. This paper presents a binaural speech enhancement method using a complex convolutional neural network with an encoder-decoder architecture and a complex multi-head attention transformer. The model is trained to estimate individual complex ratio masks in the time-frequency domain for the left and right-ear channels of binaural hearing devices. The model is trained using a novel loss function that incorporates the preservation of spatial information along with speech intelligibility improvement and noise reduction. Simulation results for acoustic scenarios with a single target speaker and isotropic noise of various types show that the proposed method improves the estimated binaural speech intelligibility and preserves the binaural cues better in comparison with several baseline algorithms.

Dual input neural networks for positional sound source localization

Aug 08, 2023Abstract:In many signal processing applications, metadata may be advantageously used in conjunction with a high dimensional signal to produce a desired output. In the case of classical Sound Source Localization (SSL) algorithms, information from a high dimensional, multichannel audio signals received by many distributed microphones is combined with information describing acoustic properties of the scene, such as the microphones' coordinates in space, to estimate the position of a sound source. We introduce Dual Input Neural Networks (DI-NNs) as a simple and effective way to model these two data types in a neural network. We train and evaluate our proposed DI-NN on scenarios of varying difficulty and realism and compare it against an alternative architecture, a classical Least-Squares (LS) method as well as a classical Convolutional Recurrent Neural Network (CRNN). Our results show that the DI-NN significantly outperforms the baselines, achieving a five times lower localization error than the LS method and two times lower than the CRNN in a test dataset of real recordings.

Graph neural networks for sound source localization on distributed microphone networks

Jun 28, 2023Abstract:Distributed Microphone Arrays (DMAs) present many challenges with respect to centralized microphone arrays. An important requirement of applications on these arrays is handling a variable number of input channels. We consider the use of Graph Neural Networks (GNNs) as a solution to this challenge. We present a localization method using the Relation Network GNN, which we show shares many similarities to classical signal processing algorithms for Sound Source Localization (SSL). We apply our method for the task of SSL and validate it experimentally using an unseen number of microphones. We test different feature extractors and show that our approach significantly outperforms classical baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge