Erdi Sarıtaş

A Survey on Class-Agnostic Counting: Advancements from Reference-Based to Open-World Text-Guided Approaches

Jan 31, 2025

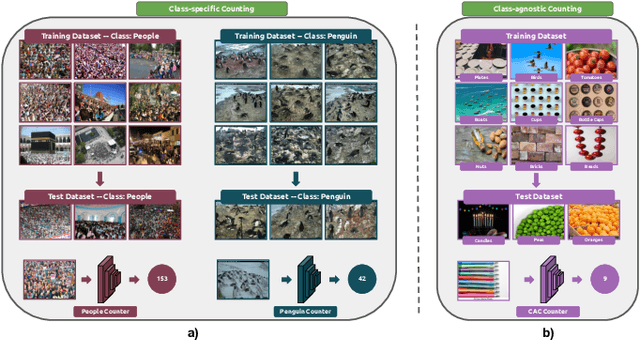

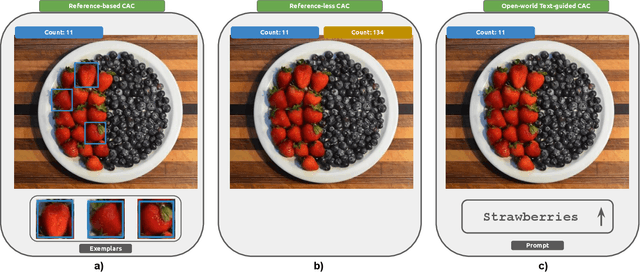

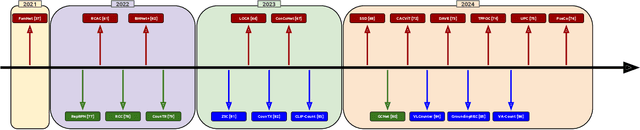

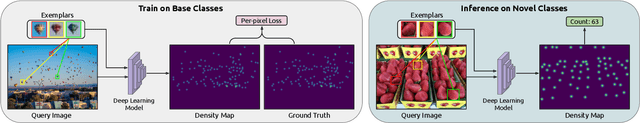

Abstract:Object counting has recently shifted towards class-agnostic counting (CAC), which addresses the challenge of counting objects across arbitrary categories, tackling a critical need in versatile counting systems. While humans effortlessly identify and count objects from diverse categories without prior knowledge, most counting methods remain restricted to enumerating instances of known classes, requiring extensive labeled datasets for training, and struggling under open-vocabulary settings. Conversely, CAC aims to count objects belonging to classes never seen during training, typically operating in a few-shot setting. In this paper, for the first time, we review advancements in CAC methodologies, categorizing them into three paradigms based on how target object classes can be specified: reference-based, reference-less, and open-world text-guided. Reference-based approaches have set performance benchmarks using exemplar-guided mechanisms. Reference-less methods eliminate exemplar dependency by leveraging inherent image patterns. Finally, open-world text-guided methods utilize vision-language models, enabling object class descriptions through textual prompts, representing a flexible and appealing solution. We analyze state-of-the-art techniques and we report their results on existing gold standard benchmarks, comparing their performance and identifying and discussing their strengths and limitations. Persistent challenges -- such as annotation dependency, scalability, and generalization -- are discussed, alongside future directions. We believe this survey serves as a valuable resource for researchers to understand the progressive developments and contributions over time and the current state-of-the-art of CAC, suggesting insights for future directions and challenges to be addressed.

Impact of Face Alignment on Face Image Quality

Dec 16, 2024Abstract:Face alignment is a crucial step in preparing face images for feature extraction in facial analysis tasks. For applications such as face recognition, facial expression recognition, and facial attribute classification, alignment is widely utilized during both training and inference to standardize the positions of key landmarks in the face. It is well known that the application and method of face alignment significantly affect the performance of facial analysis models. However, the impact of alignment on face image quality has not been thoroughly investigated. Current FIQA studies often assume alignment as a prerequisite but do not explicitly evaluate how alignment affects quality metrics, especially with the advent of modern deep learning-based detectors that integrate detection and landmark localization. To address this need, our study examines the impact of face alignment on face image quality scores. We conducted experiments on the LFW, IJB-B, and SCFace datasets, employing MTCNN and RetinaFace models for face detection and alignment. To evaluate face image quality, we utilized several assessment methods, including SER-FIQ, FaceQAN, DifFIQA, and SDD-FIQA. Our analysis included examining quality score distributions for the LFW and IJB-B datasets and analyzing average quality scores at varying distances in the SCFace dataset. Our findings reveal that face image quality assessment methods are sensitive to alignment. Moreover, this sensitivity increases under challenging real-life conditions, highlighting the importance of evaluating alignment's role in quality assessment.

Analyzing the Effect of Combined Degradations on Face Recognition

Jun 04, 2024

Abstract:A face recognition model is typically trained on large datasets of images that may be collected from controlled environments. This results in performance discrepancies when applied to real-world scenarios due to the domain gap between clean and in-the-wild images. Therefore, some researchers have investigated the robustness of these models by analyzing synthetic degradations. Yet, existing studies have mostly focused on single degradation factors, which may not fully capture the complexity of real-world degradations. This work addresses this problem by analyzing the impact of both single and combined degradations using a real-world degradation pipeline extended with under/over-exposure conditions. We use the LFW dataset for our experiments and assess the model's performance based on verification accuracy. Results reveal that single and combined degradations show dissimilar model behavior. The combined effect of degradation significantly lowers performance even if its single effect is negligible. This work emphasizes the importance of accounting for real-world complexity to assess the robustness of face recognition models in real-world settings. The code is publicly available at https://github.com/ThEnded32/AnalyzingCombinedDegradations.

Analyzing the Feature Extractor Networks for Face Image Synthesis

Jun 04, 2024

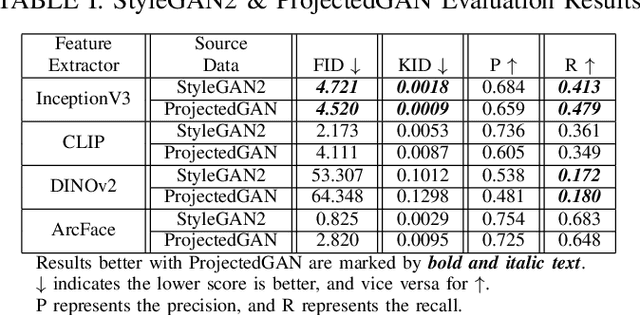

Abstract:Advancements like Generative Adversarial Networks have attracted the attention of researchers toward face image synthesis to generate ever more realistic images. Thereby, the need for the evaluation criteria to assess the realism of the generated images has become apparent. While FID utilized with InceptionV3 is one of the primary choices for benchmarking, concerns about InceptionV3's limitations for face images have emerged. This study investigates the behavior of diverse feature extractors -- InceptionV3, CLIP, DINOv2, and ArcFace -- considering a variety of metrics -- FID, KID, Precision\&Recall. While the FFHQ dataset is used as the target domain, as the source domains, the CelebA-HQ dataset and the synthetic datasets generated using StyleGAN2 and Projected FastGAN are used. Experiments include deep-down analysis of the features: $L_2$ normalization, model attention during extraction, and domain distributions in the feature space. We aim to give valuable insights into the behavior of feature extractors for evaluating face image synthesis methodologies. The code is publicly available at https://github.com/ThEnded32/AnalyzingFeatureExtractors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge