Eoin M Kenny

Twin Systems for DeepCBR: A Menagerie of Deep Learning and Case-Based Reasoning Pairings for Explanation and Data Augmentation

Apr 29, 2021

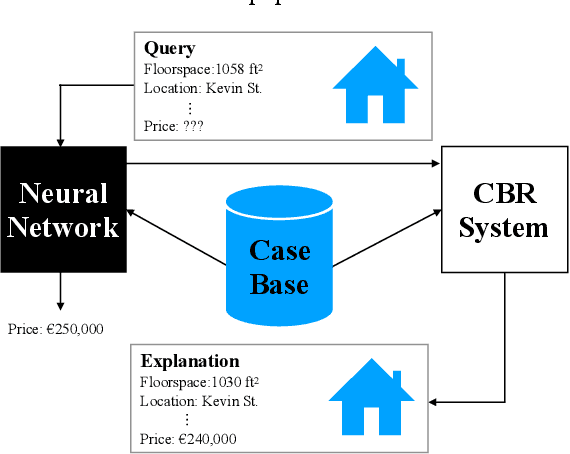

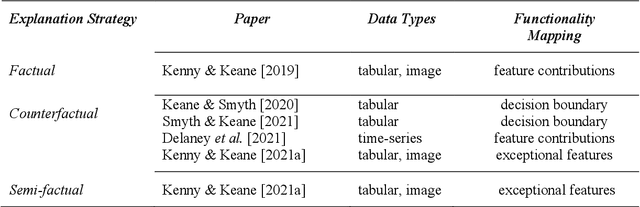

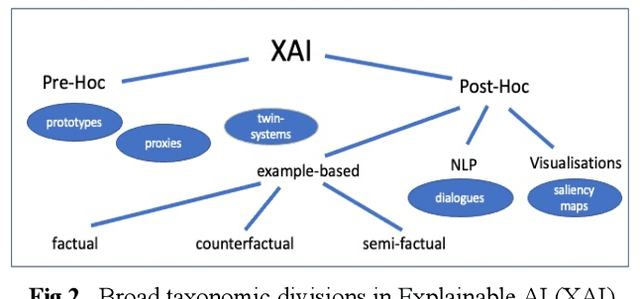

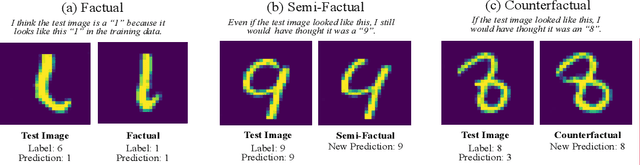

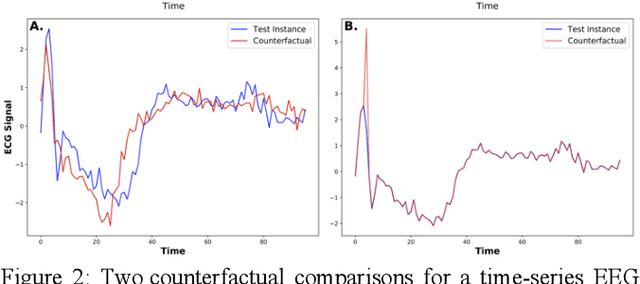

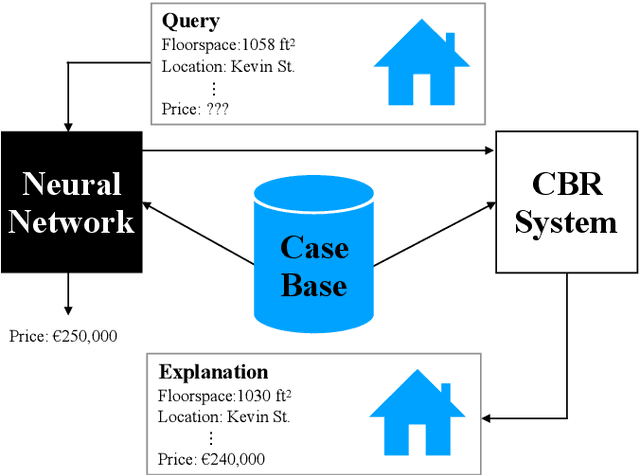

Abstract:Recently, it has been proposed that fruitful synergies may exist between Deep Learning (DL) and Case Based Reasoning (CBR); that there are insights to be gained by applying CBR ideas to problems in DL (what could be called DeepCBR). In this paper, we report on a program of research that applies CBR solutions to the problem of Explainable AI (XAI) in the DL. We describe a series of twin-systems pairings of opaque DL models with transparent CBR models that allow the latter to explain the former using factual, counterfactual and semi-factual explanation strategies. This twinning shows that functional abstractions of DL (e.g., feature weights, feature importance and decision boundaries) can be used to drive these explanatory solutions. We also raise the prospect that this research also applies to the problem of Data Augmentation in DL, underscoring the fecundity of these DeepCBR ideas.

If Only We Had Better Counterfactual Explanations: Five Key Deficits to Rectify in the Evaluation of Counterfactual XAI Techniques

Feb 26, 2021

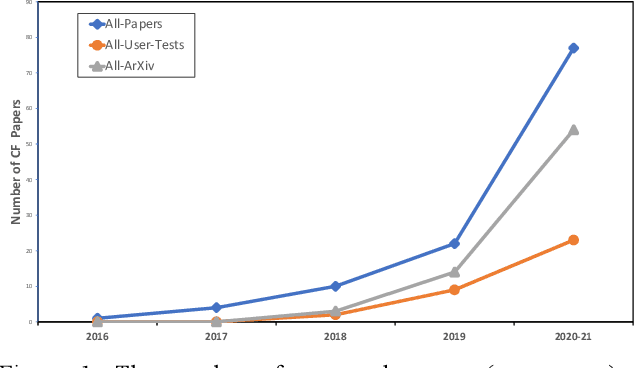

Abstract:In recent years, there has been an explosion of AI research on counterfactual explanations as a solution to the problem of eXplainable AI (XAI). These explanations seem to offer technical, psychological and legal benefits over other explanation techniques. We survey 100 distinct counterfactual explanation methods reported in the literature. This survey addresses the extent to which these methods have been adequately evaluated, both psychologically and computationally, and quantifies the shortfalls occurring. For instance, only 21% of these methods have been user tested. Five key deficits in the evaluation of these methods are detailed and a roadmap, with standardised benchmark evaluations, is proposed to resolve the issues arising; issues, that currently effectively block scientific progress in this field.

How Case Based Reasoning Explained Neural Networks: An XAI Survey of Post-Hoc Explanation-by-Example in ANN-CBR Twins

May 17, 2019

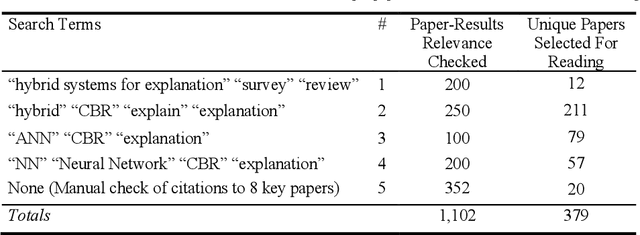

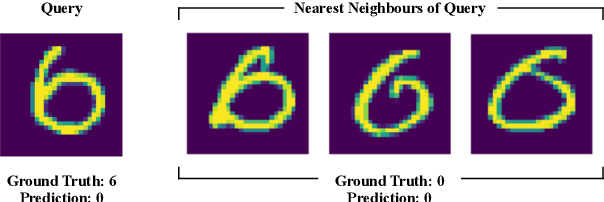

Abstract:This paper surveys an approach to the XAI problem, using post-hoc explanation by example, that hinges on twinning Artificial Neural Networks (ANNs) with Case-Based Reasoning (CBR) systems, so-called ANN-CBR twins. A systematic survey of 1100+ papers was carried out to identify the fragmented literature on this topic and to trace it influence through to more recent work involving Deep Neural Networks (DNNs). The paper argues that this twin-system approach, especially using ANN-CBR twins, presents one possible coherent, generic solution to the XAI problem (and, indeed, XCBR problem). The paper concludes by road-mapping some future directions for this XAI solution involving (i) further tests of feature-weighting techniques, (iii) explorations of how explanatory cases might best be deployed (e.g., in counterfactuals, near-miss cases, a fortori cases), and (iii) the raising of the unwelcome and, much ignored, issue of human user evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge