Emmanuel Martinez

LD-GAN: Low-Dimensional Generative Adversarial Network for Spectral Image Generation with Variance Regularization

Apr 29, 2023Abstract:Deep learning methods are state-of-the-art for spectral image (SI) computational tasks. However, these methods are constrained in their performance since available datasets are limited due to the highly expensive and long acquisition time. Usually, data augmentation techniques are employed to mitigate the lack of data. Surpassing classical augmentation methods, such as geometric transformations, GANs enable diverse augmentation by learning and sampling from the data distribution. Nevertheless, GAN-based SI generation is challenging since the high-dimensionality nature of this kind of data hinders the convergence of the GAN training yielding to suboptimal generation. To surmount this limitation, we propose low-dimensional GAN (LD-GAN), where we train the GAN employing a low-dimensional representation of the {dataset} with the latent space of a pretrained autoencoder network. Thus, we generate new low-dimensional samples which are then mapped to the SI dimension with the pretrained decoder network. Besides, we propose a statistical regularization to control the low-dimensional representation variance for the autoencoder training and to achieve high diversity of samples generated with the GAN. We validate our method LD-GAN as data augmentation strategy for compressive spectral imaging, SI super-resolution, and RBG to spectral tasks with improvements varying from 0.5 to 1 [dB] in each task respectively. We perform comparisons against the non-data augmentation training, traditional DA, and with the same GAN adjusted and trained to generate the full-sized SIs. The code of this paper can be found in https://github.com/romanjacome99/LD_GAN.git

Computational Spectral Imaging: A Contemporary Overview

Mar 08, 2023Abstract:Spectral imaging collects and processes information along spatial and spectral coordinates quantified in discrete voxels, which can be treated as a 3D spectral data cube. The spectral images (SIs) allow identifying objects, crops, and materials in the scene through their spectral behavior. Since most spectral optical systems can only employ 1D or maximum 2D sensors, it is challenging to directly acquire the 3D information from available commercial sensors. As an alternative, computational spectral imaging (CSI) has emerged as a sensing tool where the 3D data can be obtained using 2D encoded projections. Then, a computational recovery process must be employed to retrieve the SI. CSI enables the development of snapshot optical systems that reduce acquisition time and provide low computational storage costs compared to conventional scanning systems. Recent advances in deep learning (DL) have allowed the design of data-driven CSI to improve the SI reconstruction or, even more, perform high-level tasks such as classification, unmixing, or anomaly detection directly from 2D encoded projections. This work summarises the advances in CSI, starting with SI and its relevance; continuing with the most relevant compressive spectral optical systems. Then, CSI with DL will be introduced, and the recent advances in combining the physical optical design with computational DL algorithms to solve high-level tasks.

Fast Disparity Estimation from a Single Compressed Light Field Measurement

Sep 22, 2022

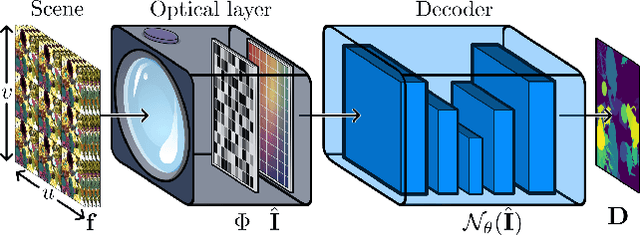

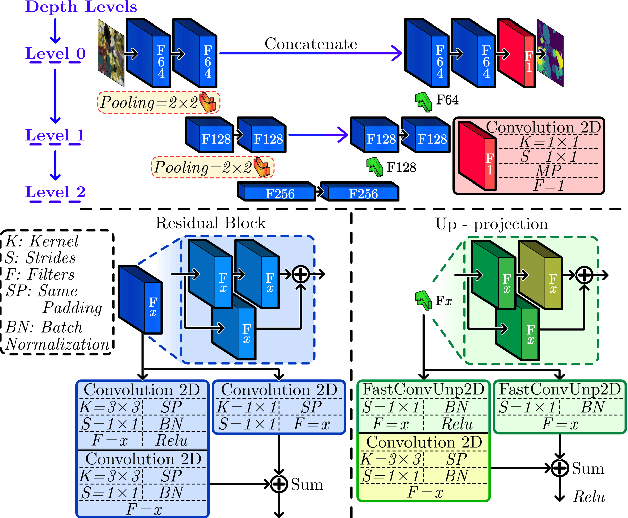

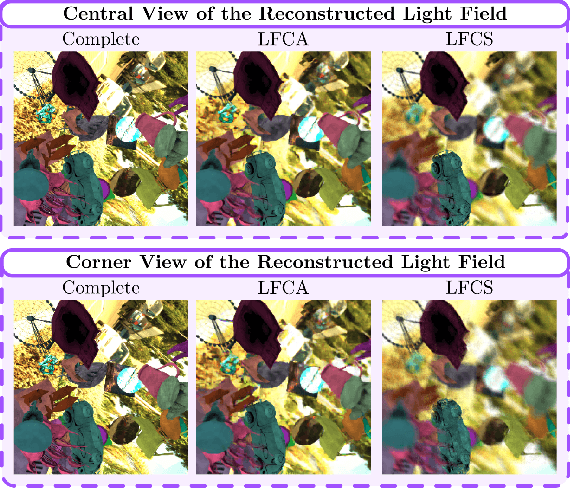

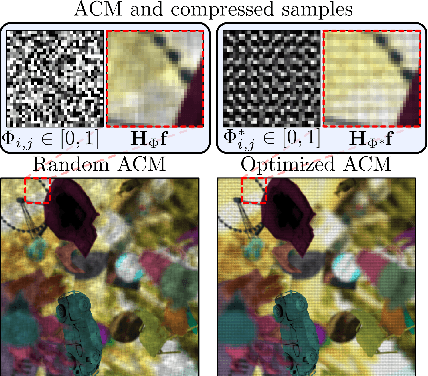

Abstract:The abundant spatial and angular information from light fields has allowed the development of multiple disparity estimation approaches. However, the acquisition of light fields requires high storage and processing cost, limiting the use of this technology in practical applications. To overcome these drawbacks, the compressive sensing (CS) theory has allowed the development of optical architectures to acquire a single coded light field measurement. This measurement is decoded using an optimization algorithm or deep neural network that requires high computational costs. The traditional approach for disparity estimation from compressed light fields requires first recovering the entire light field and then a post-processing step, thus requiring long times. In contrast, this work proposes a fast disparity estimation from a single compressed measurement by omitting the recovery step required in traditional approaches. Specifically, we propose to jointly optimize an optical architecture for acquiring a single coded light field snapshot and a convolutional neural network (CNN) for estimating the disparity maps. Experimentally, the proposed method estimates disparity maps comparable with those obtained from light fields reconstructed using deep learning approaches. Furthermore, the proposed method is 20 times faster in training and inference than the best method that estimates the disparity from reconstructed light fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge