Emmanouil Panagiotou

MMM-fair: An Interactive Toolkit for Exploring and Operationalizing Multi-Fairness Trade-offs

Sep 09, 2025Abstract:Fairness-aware classification requires balancing performance and fairness, often intensified by intersectional biases. Conflicting fairness definitions further complicate the task, making it difficult to identify universally fair solutions. Despite growing regulatory and societal demands for equitable AI, popular toolkits offer limited support for exploring multi-dimensional fairness and related trade-offs. To address this, we present mmm-fair, an open-source toolkit leveraging boosting-based ensemble approaches that dynamically optimizes model weights to jointly minimize classification errors and diverse fairness violations, enabling flexible multi-objective optimization. The system empowers users to deploy models that align with their context-specific needs while reliably uncovering intersectional biases often missed by state-of-the-art methods. In a nutshell, mmm-fair uniquely combines in-depth multi-attribute fairness, multi-objective optimization, a no-code, chat-based interface, LLM-powered explanations, interactive Pareto exploration for model selection, custom fairness constraint definition, and deployment-ready models in a single open-source toolkit, a combination rarely found in existing fairness tools. Demo walkthrough available at: https://youtu.be/_rcpjlXFqkw.

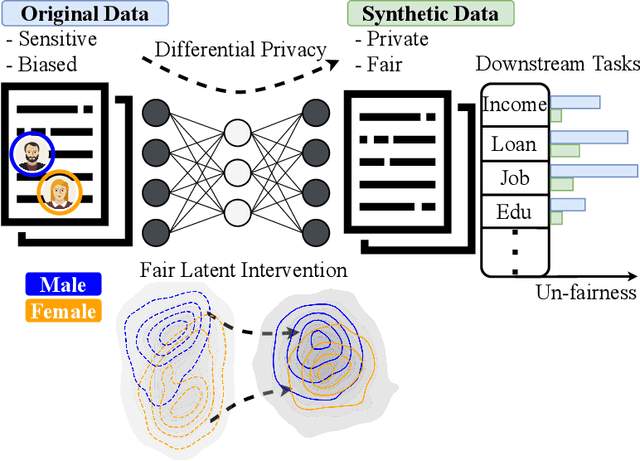

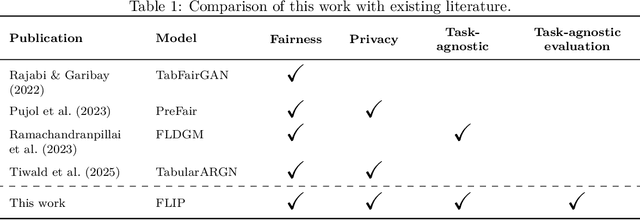

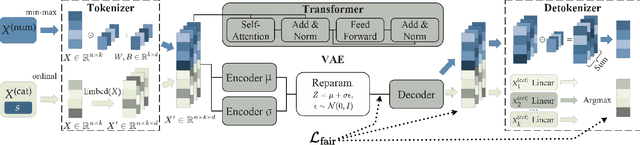

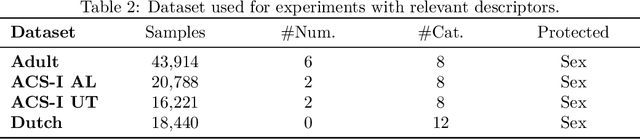

Achieving Hilbert-Schmidt Independence Under Rényi Differential Privacy for Fair and Private Data Generation

Aug 29, 2025

Abstract:As privacy regulations such as the GDPR and HIPAA and responsibility frameworks for artificial intelligence such as the AI Act gain traction, the ethical and responsible use of real-world data faces increasing constraints. Synthetic data generation has emerged as a promising solution to risk-aware data sharing and model development, particularly for tabular datasets that are foundational to sensitive domains such as healthcare. To address both privacy and fairness concerns in this setting, we propose FLIP (Fair Latent Intervention under Privacy guarantees), a transformer-based variational autoencoder augmented with latent diffusion to generate heterogeneous tabular data. Unlike the typical setup in fairness-aware data generation, we assume a task-agnostic setup, not reliant on a fixed, defined downstream task, thus offering broader applicability. To ensure privacy, FLIP employs R\'enyi differential privacy (RDP) constraints during training and addresses fairness in the input space with RDP-compatible balanced sampling that accounts for group-specific noise levels across multiple sampling rates. In the latent space, we promote fairness by aligning neuron activation patterns across protected groups using Centered Kernel Alignment (CKA), a similarity measure extending the Hilbert-Schmidt Independence Criterion (HSIC). This alignment encourages statistical independence between latent representations and the protected feature. Empirical results demonstrate that FLIP effectively provides significant fairness improvements for task-agnostic fairness and across diverse downstream tasks under differential privacy constraints.

Explanations as Bias Detectors: A Critical Study of Local Post-hoc XAI Methods for Fairness Exploration

May 01, 2025Abstract:As Artificial Intelligence (AI) is increasingly used in areas that significantly impact human lives, concerns about fairness and transparency have grown, especially regarding their impact on protected groups. Recently, the intersection of explainability and fairness has emerged as an important area to promote responsible AI systems. This paper explores how explainability methods can be leveraged to detect and interpret unfairness. We propose a pipeline that integrates local post-hoc explanation methods to derive fairness-related insights. During the pipeline design, we identify and address critical questions arising from the use of explanations as bias detectors such as the relationship between distributive and procedural fairness, the effect of removing the protected attribute, the consistency and quality of results across different explanation methods, the impact of various aggregation strategies of local explanations on group fairness evaluations, and the overall trustworthiness of explanations as bias detectors. Our results show the potential of explanation methods used for fairness while highlighting the need to carefully consider the aforementioned critical aspects.

TABCF: Counterfactual Explanations for Tabular Data Using a Transformer-Based VAE

Oct 14, 2024Abstract:In the field of Explainable AI (XAI), counterfactual (CF) explanations are one prominent method to interpret a black-box model by suggesting changes to the input that would alter a prediction. In real-world applications, the input is predominantly in tabular form and comprised of mixed data types and complex feature interdependencies. These unique data characteristics are difficult to model, and we empirically show that they lead to bias towards specific feature types when generating CFs. To overcome this issue, we introduce TABCF, a CF explanation method that leverages a transformer-based Variational Autoencoder (VAE) tailored for modeling tabular data. Our approach uses transformers to learn a continuous latent space and a novel Gumbel-Softmax detokenizer that enables precise categorical reconstruction while preserving end-to-end differentiability. Extensive quantitative evaluation on five financial datasets demonstrates that TABCF does not exhibit bias toward specific feature types, and outperforms existing methods in producing effective CFs that align with common CF desiderata.

Synthetic Tabular Data Generation for Class Imbalance and Fairness: A Comparative Study

Sep 08, 2024

Abstract:Due to their data-driven nature, Machine Learning (ML) models are susceptible to bias inherited from data, especially in classification problems where class and group imbalances are prevalent. Class imbalance (in the classification target) and group imbalance (in protected attributes like sex or race) can undermine both ML utility and fairness. Although class and group imbalances commonly coincide in real-world tabular datasets, limited methods address this scenario. While most methods use oversampling techniques, like interpolation, to mitigate imbalances, recent advancements in synthetic tabular data generation offer promise but have not been adequately explored for this purpose. To this end, this paper conducts a comparative analysis to address class and group imbalances using state-of-the-art models for synthetic tabular data generation and various sampling strategies. Experimental results on four datasets, demonstrate the effectiveness of generative models for bias mitigation, creating opportunities for further exploration in this direction.

Procedural 3D Terrain Generation using Generative Adversarial Networks

Oct 13, 2020

Abstract:Procedural 3D Terrain generation has become a necessity in open world games, as it can provide unlimited content, through a functionally infinite number of different areas, for players to explore. In our approach, we use Generative Adversarial Networks (GAN) to yield realistic 3D environments based on the distribution of remotely sensed images of landscapes, captured by satellites or drones. Our task consists of synthesizing a random but plausible RGB satellite image and generating a corresponding Height Map in the form of a 3D point cloud that will serve as an appropriate mesh of the landscape. For the first step, we utilize a GAN trained with satellite images that manages to learn the distribution of the dataset, creating novel satellite images. For the second part, we need a one-to-one mapping from RGB images to Digital Elevation Models (DEM). We deploy a Conditional Generative Adversarial network (CGAN), which is the state-of-the-art approach to image-to-image translation, to generate a plausible height map for every randomly generated image of the first model. Combining the generated DEM and RGB image, we are able to construct 3D scenery consisting of a plausible height distribution and colorization, in relation to the remotely sensed landscapes provided during training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge