Emily Zimmerman

Subtle Signals: Video-based Detection of Infant Non-nutritive Sucking as a Neurodevelopmental Cue

Oct 24, 2023Abstract:Non-nutritive sucking (NNS), which refers to the act of sucking on a pacifier, finger, or similar object without nutrient intake, plays a crucial role in assessing healthy early development. In the case of preterm infants, NNS behavior is a key component in determining their readiness for feeding. In older infants, the characteristics of NNS behavior offer valuable insights into neural and motor development. Additionally, NNS activity has been proposed as a potential safeguard against sudden infant death syndrome (SIDS). However, the clinical application of NNS assessment is currently hindered by labor-intensive and subjective finger-in-mouth evaluations. Consequently, researchers often resort to expensive pressure transducers for objective NNS signal measurement. To enhance the accessibility and reliability of NNS signal monitoring for both clinicians and researchers, we introduce a vision-based algorithm designed for non-contact detection of NNS activity using baby monitor footage in natural settings. Our approach involves a comprehensive exploration of optical flow and temporal convolutional networks, enabling the detection and amplification of subtle infant-sucking signals. We successfully classify short video clips of uniform length into NNS and non-NNS periods. Furthermore, we investigate manual and learning-based techniques to piece together local classification results, facilitating the segmentation of longer mixed-activity videos into NNS and non-NNS segments of varying duration. Our research introduces two novel datasets of annotated infant videos, including one sourced from our clinical study featuring 19 infant subjects and 183 hours of overnight baby monitor footage.

A Video-based End-to-end Pipeline for Non-nutritive Sucking Action Recognition and Segmentation in Young Infants

Mar 29, 2023Abstract:We present an end-to-end computer vision pipeline to detect non-nutritive sucking (NNS) -- an infant sucking pattern with no nutrition delivered -- as a potential biomarker for developmental delays, using off-the-shelf baby monitor video footage. One barrier to clinical (or algorithmic) assessment of NNS stems from its sparsity, requiring experts to wade through hours of footage to find minutes of relevant activity. Our NNS activity segmentation algorithm solves this problem by identifying periods of NNS with high certainty -- up to 94.0\% average precision and 84.9\% average recall across 30 heterogeneous 60 s clips, drawn from our manually annotated NNS clinical in-crib dataset of 183 hours of overnight baby monitor footage from 19 infants. Our method is based on an underlying NNS action recognition algorithm, which uses spatiotemporal deep learning networks and infant-specific pose estimation, achieving 94.9\% accuracy in binary classification of 960 2.5 s balanced NNS vs. non-NNS clips. Tested on our second, independent, and public NNS in-the-wild dataset, NNS recognition classification reaches 92.3\% accuracy, and NNS segmentation achieves 90.8\% precision and 84.2\% recall.

Infant Contact-less Non-Nutritive Sucking Pattern Quantification via Facial Gesture Analysis

Jun 05, 2019

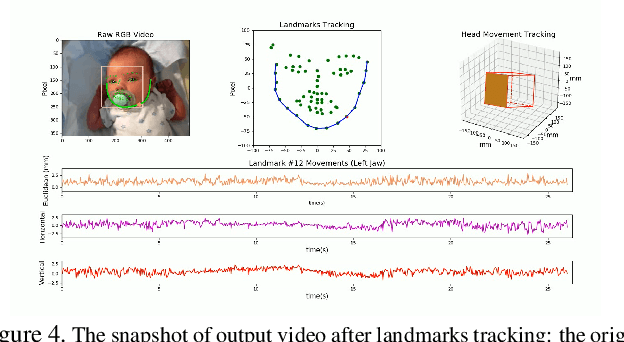

Abstract:Non-nutritive sucking (NNS) is defined as the sucking action that occurs when a finger, pacifier, or other object is placed in the baby's mouth, but there is no nutrient delivered. In addition to providing a sense of safety, NNS even can be regarded as an indicator of infant's central nervous system development. The rich data, such as sucking frequency, the number of cycles, and their amplitude during baby's non-nutritive sucking is important clue for judging the brain development of infants or preterm infants. Nowadays most researchers are collecting NNS data by using some contact devices such as pressure transducers. However, such invasive contact will have a direct impact on the baby's natural sucking behavior, resulting in significant distortion in the collected data. Therefore, we propose a novel contact-less NNS data acquisition and quantification scheme, which leverages the facial landmarks tracking technology to extract the movement signals of baby's jaw from recorded baby's sucking video. Since completion of the sucking action requires a large amount of synchronous coordination and neural integration of the facial muscles and the cranial nerves, the facial muscle movement signals accompanying baby's sucking pacifier can indirectly replace the NNS signal. We have evaluated our method on videos collected from several infants during their NNS behaviors and we have achieved the quantified NNS patterns closely comparable to results from visual inspection as well as contact-based sensor readings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge