Elsa Andrea Kirchner

A Feature Extraction Pipeline for Enhancing Lightweight Neural Networks in sEMG-based Joint Torque Estimation

Jan 23, 2026Abstract:Robot-assisted rehabilitation offers an effective approach, wherein exoskeletons adapt to users' needs and provide personalized assistance. However, to deliver such assistance, accurate prediction of the user's joint torques is essential. In this work, we propose a feature extraction pipeline using 8-channel surface electromyography (sEMG) signals to predict elbow and shoulder joint torques. For preliminary evaluation, this pipeline was integrated into two neural network models: the Multilayer Perceptron (MLP) and the Temporal Convolutional Network (TCN). Data were collected from a single subject performing elbow and shoulder movements under three load conditions (0 kg, 1.10 kg, and 1.85 kg) using three motion-capture cameras. Reference torques were estimated from center-of-mass kinematics under the assumption of static equilibrium. Our offline analyses showed that, with our feature extraction pipeline, MLP model achieved mean RMSE of 0.963 N m, 1.403 N m, and 1.434 N m (over five seeds) for elbow, front-shoulder, and side-shoulder joints, respectively, which were comparable to the TCN performance. These results demonstrate that the proposed feature extraction pipeline enables a simple MLP to achieve performance comparable to that of a network designed explicitly for temporal dependencies. This finding is particularly relevant for applications with limited training data, a common scenario patient care.

Enhancing Robustness of Asynchronous EEG-Based Movement Prediction using Classifier Ensembles

Jan 07, 2026Abstract:Objective: Stroke is one of the leading causes of disabilities. One promising approach is to extend the rehabilitation with self-initiated robot-assisted movement therapy. To enable this, it is required to detect the patient's intention to move to trigger the assistance of a robotic device. This intention to move can be detected from human surface electroencephalography (EEG) signals; however, it is particularly challenging to decode when classifications are performed online and asynchronously. In this work, the effectiveness of classifier ensembles and a sliding-window postprocessing technique was investigated to enhance the robustness of such asynchronous classification. Approach: To investigate the effectiveness of classifier ensembles and a sliding-window postprocessing, two EEG datasets with 14 healthy subjects who performed self-initiated arm movements were analyzed. Offline and pseudo-online evaluations were conducted to compare ensemble combinations of the support vector machine (SVM), multilayer perceptron (MLP), and EEGNet classification models. Results: The results of the pseudo-online evaluation show that the two model ensembles significantly outperformed the best single model for the optimal number of postprocessing windows. In particular, for single models, an increased number of postprocessing windows significantly improved classification performances. Interestingly, we found no significant improvements between performances of the best single model and classifier ensembles in the offline evaluation. Significance: We demonstrated that classifier ensembles and appropriate postprocessing methods effectively enhance the asynchronous detection of movement intentions from EEG signals. In particular, the classifier ensemble approach yields greater improvements in online classification than in offline classification, and reduces false detections, i.e., early false positives.

EEG classifier cross-task transfer to avoid training sessions in robot-assisted rehabilitation

Feb 26, 2024Abstract:Background: For an individualized support of patients during rehabilitation, learning of individual machine learning models from the human electroencephalogram (EEG) is required. Our approach allows labeled training data to be recorded without the need for a specific training session. For this, the planned exoskeleton-assisted rehabilitation enables bilateral mirror therapy, in which movement intentions can be inferred from the activity of the unaffected arm. During this therapy, labeled EEG data can be collected to enable movement predictions of only the affected arm of a patient. Methods: A study was conducted with 8 healthy subjects and the performance of the classifier transfer approach was evaluated. Each subject performed 3 runs of 40 self-intended unilateral and bilateral reaching movements toward a target while EEG data was recorded from 64 channels. A support vector machine (SVM) classifier was trained under both movement conditions to make predictions for the same type of movement. Furthermore, the classifier was evaluated to predict unilateral movements by only beeing trained on the data of the bilateral movement condition. Results: The results show that the performance of the classifier trained on selected EEG channels evoked by bilateral movement intentions is not significantly reduced compared to a classifier trained directly on EEG data including unilateral movement intentions. Moreover, the results show that our approach also works with only 8 or even 4 channels. Conclusion: It was shown that the proposed classifier transfer approach enables motion prediction without explicit collection of training data. Since the approach can be applied even with a small number of EEG channels, this speaks for the feasibility of the approach in real therapy sessions with patients and motivates further investigations with stroke patients.

EEG and EMG dataset for the detection of errors introduced by an active orthosis device

May 25, 2023

Abstract:This paper presents a dataset containing recordings of the electroencephalogram (EEG) and the electromyogram (EMG) from eight subjects who were assisted in moving their right arm by an active orthosis device. The supported movements were elbow joint movements, i.e., flexion and extension of the right arm. While the orthosis was actively moving the subject's arm, some errors were deliberately introduced for a short duration of time. During this time, the orthosis moved in the opposite direction. In this paper, we explain the experimental setup and present some behavioral analyses across all subjects. Additionally, we present an average event-related potential analysis for one subject to offer insights into the data quality and the EEG activity caused by the error introduction. The dataset described herein is openly accessible. The aim of this study was to provide a dataset to the research community, particularly for the development of new methods in the asynchronous detection of erroneous events from the EEG. We are especially interested in the tactile and haptic-mediated recognition of errors, which has not yet been sufficiently investigated in the literature. We hope that the detailed description of the orthosis and the experiment will enable its reproduction and facilitate a systematic investigation of the influencing factors in the detection of erroneous behavior of assistive systems by a large community.

Continuous ErrP detections during multimodal human-robot interaction

Jul 25, 2022

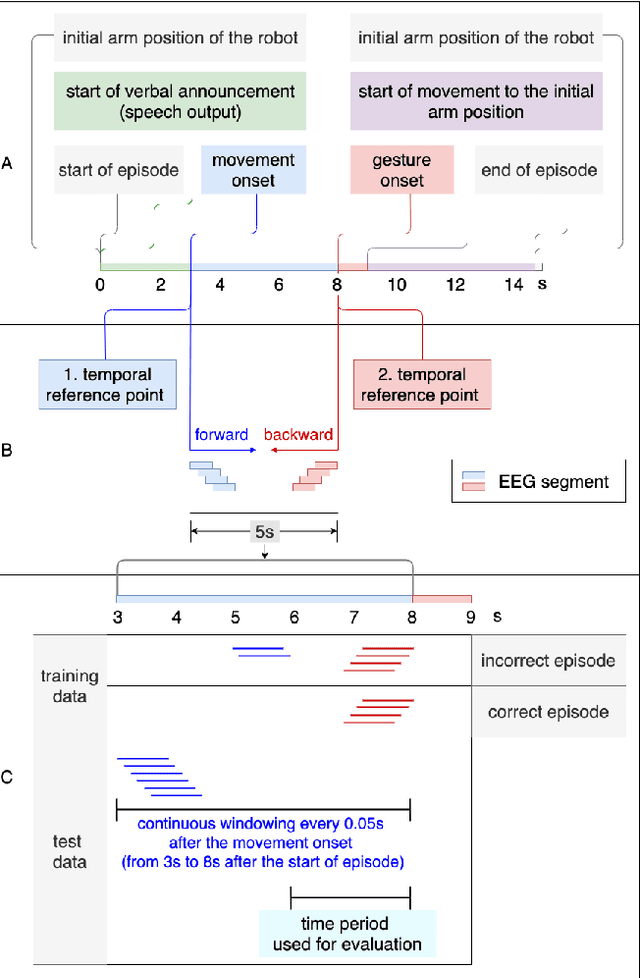

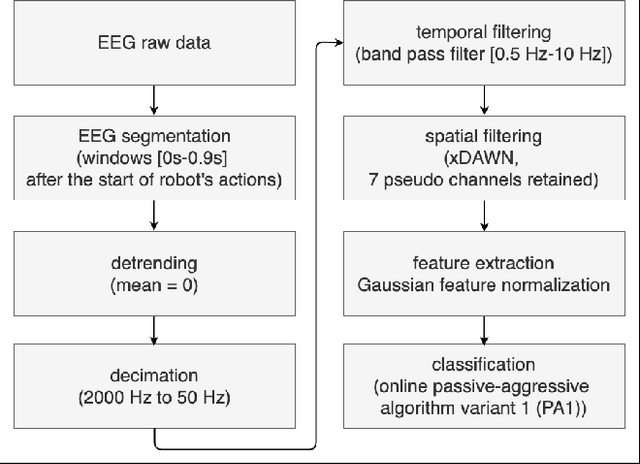

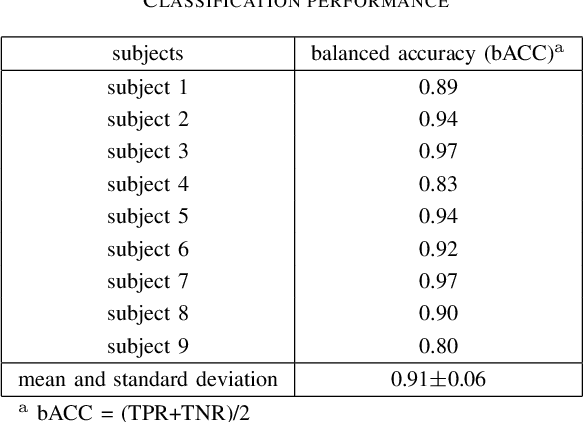

Abstract:Human-in-the-loop approaches are of great importance for robot applications. In the presented study, we implemented a multimodal human-robot interaction (HRI) scenario, in which a simulated robot communicates with its human partner through speech and gestures. The robot announces its intention verbally and selects the appropriate action using pointing gestures. The human partner, in turn, evaluates whether the robot's verbal announcement (intention) matches the action (pointing gesture) chosen by the robot. For cases where the verbal announcement of the robot does not match the corresponding action choice of the robot, we expect error-related potentials (ErrPs) in the human electroencephalogram (EEG). These intrinsic evaluations of robot actions by humans, evident in the EEG, were recorded in real time, continuously segmented online and classified asynchronously. For feature selection, we propose an approach that allows the combinations of forward and backward sliding windows to train a classifier. We achieved an average classification performance of 91% across 9 subjects. As expected, we also observed a relatively high variability between the subjects. In the future, the proposed feature selection approach will be extended to allow for customization of feature selection. To this end, the best combinations of forward and backward sliding windows will be automatically selected to account for inter-subject variability in classification performance. In addition, we plan to use the intrinsic human error evaluation evident in the error case by the ErrP in interactive reinforcement learning to improve multimodal human-robot interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge