Elisabeth Moyer

Insight into cloud processes from unsupervised classification with a rotationally invariant autoencoder

Nov 20, 2022

Abstract:Clouds play a critical role in the Earth's energy budget and their potential changes are one of the largest uncertainties in future climate projections. However, the use of satellite observations to understand cloud feedbacks in a warming climate has been hampered by the simplicity of existing cloud classification schemes, which are based on single-pixel cloud properties rather than utilizing spatial structures and textures. Recent advances in computer vision enable the grouping of different patterns of images without using human-predefined labels, providing a novel means of automated cloud classification. This unsupervised learning approach allows discovery of unknown climate-relevant cloud patterns, and the automated processing of large datasets. We describe here the use of such methods to generate a new AI-driven Cloud Classification Atlas (AICCA), which leverages 22 years and 800 terabytes of MODIS satellite observations over the global ocean. We use a rotation-invariant cloud clustering (RICC) method to classify those observations into 42 AI-generated cloud class labels at ~100 km spatial resolution. As a case study, we use AICCA to examine a recent finding of decreasing cloudiness in a critical part of the subtropical stratocumulus deck, and show that the change is accompanied by strong trends in cloud classes.

Cloud Classification with Unsupervised Deep Learning

Sep 30, 2022

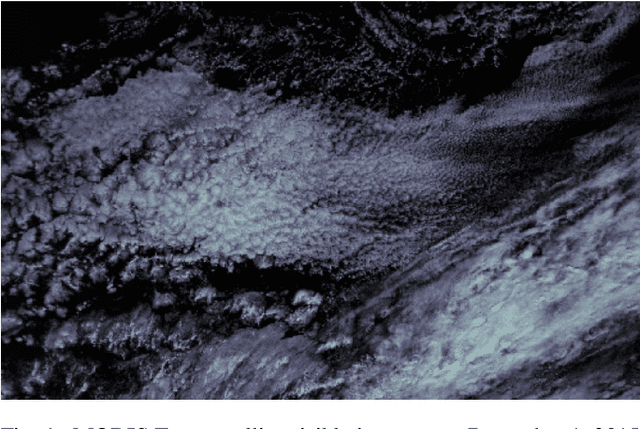

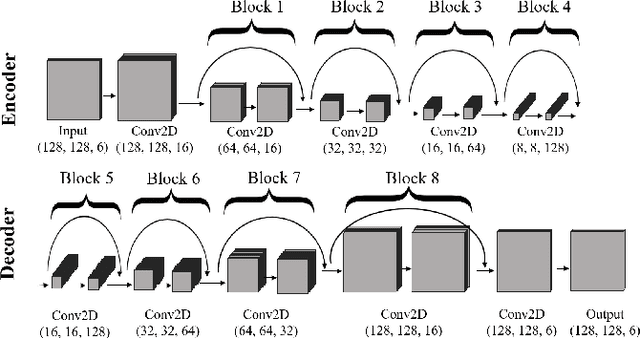

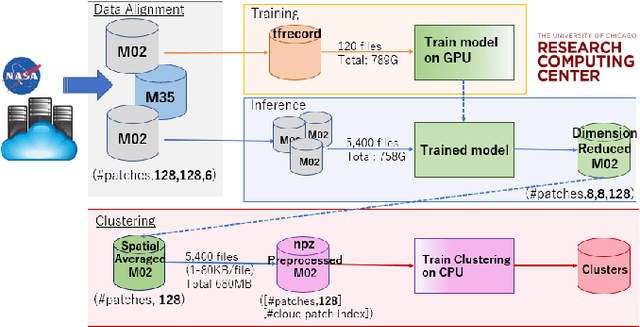

Abstract:We present a framework for cloud characterization that leverages modern unsupervised deep learning technologies. While previous neural network-based cloud classification models have used supervised learning methods, unsupervised learning allows us to avoid restricting the model to artificial categories based on historical cloud classification schemes and enables the discovery of novel, more detailed classifications. Our framework learns cloud features directly from radiance data produced by NASA's Moderate Resolution Imaging Spectroradiometer (MODIS) satellite instrument, deriving cloud characteristics from millions of images without relying on pre-defined cloud types during the training process. We present preliminary results showing that our method extracts physically relevant information from radiance data and produces meaningful cloud classes.

AICCA: AI-driven Cloud Classification Atlas

Sep 29, 2022

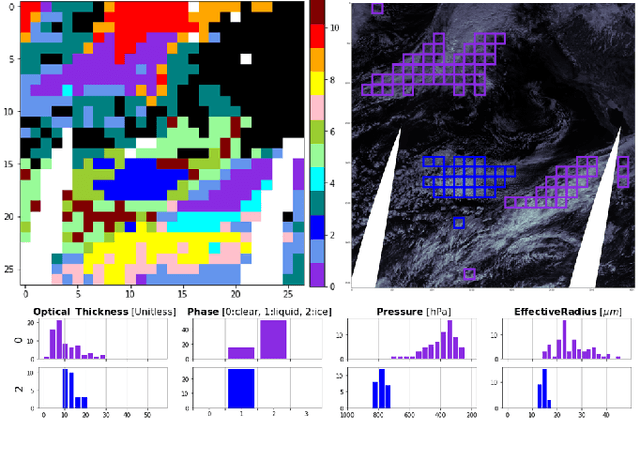

Abstract:Clouds play an important role in the Earth's energy budget and their behavior is one of the largest uncertainties in future climate projections. Satellite observations should help in understanding cloud responses, but decades and petabytes of multispectral cloud imagery have to date received only limited use. This study reduces the dimensionality of satellite cloud observations by grouping them via a novel automated, unsupervised cloud classification technique by using a convolutional neural network. Our technique combines a rotation-invariant autoencoder with hierarchical agglomerative clustering to generate cloud clusters that capture meaningful distinctions among cloud textures, using only raw multispectral imagery as input. Thus, cloud classes are defined without reliance on location, time/season, derived physical properties, or pre-designated class definitions. We use this approach to generate a unique new cloud dataset, the AI-driven cloud classification atlas (AICCA), which clusters 22 years of ocean images from the Moderate Resolution Imaging Spectroradiometer (MODIS) on NASA's Aqua and Terra instruments - 800 TB of data or 198 million patches roughly 100 km x 100 km (128 x 128 pixels) - into 42 AI-generated cloud classes. We show that AICCA classes involve meaningful distinctions that employ spatial information and result in distinct geographic distributions, capturing, for example, stratocumulus decks along the West coasts of North and South America. AICCA delivers the information in multi-spectral images in a compact form, enables data-driven diagnosis of patterns of cloud organization, provides insight into cloud evolution on timescales of hours to decades, and helps democratize climate research by facilitating access to core data.

Data-driven Cloud Clustering via a Rotationally Invariant Autoencoder

Mar 08, 2021

Abstract:Advanced satellite-born remote sensing instruments produce high-resolution multi-spectral data for much of the globe at a daily cadence. These datasets open up the possibility of improved understanding of cloud dynamics and feedback, which remain the biggest source of uncertainty in global climate model projections. As a step towards answering these questions, we describe an automated rotation-invariant cloud clustering (RICC) method that leverages deep learning autoencoder technology to organize cloud imagery within large datasets in an unsupervised fashion, free from assumptions about predefined classes. We describe both the design and implementation of this method and its evaluation, which uses a sequence of testing protocols to determine whether the resulting clusters: (1) are physically reasonable, (i.e., embody scientifically relevant distinctions); (2) capture information on spatial distributions, such as textures; (3) are cohesive and separable in latent space; and (4) are rotationally invariant, (i.e., insensitive to the orientation of an image). Results obtained when these evaluation protocols are applied to RICC outputs suggest that the resultant novel cloud clusters capture meaningful aspects of cloud physics, are appropriately spatially coherent, and are invariant to orientations of input images. Our results support the possibility of using an unsupervised data-driven approach for automated clustering and pattern discovery in cloud imagery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge